2017 DenselyConnectedConvolutionalNe

- (Huang et al., 2017) ⇒ Gao Huang, Zhuang Liu, Laurens van der Maaten, and Kilian Q. Weinberger. (2017). “Densely Connected Convolutional Networks.” In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017). ISBN:978-1-5386-0457-1 doi:10.1109/CVPR.2017.243

Subject Headings: DenseNet; Dense Block; Deep Convolutional Neural Network.

Notes

- Online Resource(s):

- ArXiv: https://arxiv.org/abs/1608.06993

- CVPR 2017 Open Access Article:

- IEEE Computer Society Digital Library:

- Video: https://www.youtube.com/watch?v=-W6y8xnd--U

- Source Code: https://github.com/liuzhuang13/DenseNet.

Cited By

- Google Scholar: ~ 18,345 Citations.

- (Pleiss et al., 2017) ⇒ Geoff Pleiss, Danlu Chen, Gao Huang, Tongcheng Li, Laurens van der Maaten, and Kilian Q. Weinberger. (2017). “Memory-Efficient Implementation of DenseNets.” eprint arXiv:1707.06990.

Quotes

Author Keywords

Abstract

Recent work has shown that convolutional networks can be substantially deeper, more accurate, and efficient to train if they contain shorter connections between layers close to the input and those close to the output. In this paper, we embrace this observation and introduce the Dense Convolutional Network (DenseNet), which connects each layer to every other layer in a feed-forward fashion. Whereas traditional convolutional networks with L layers have a connections-one between each layer and its subsequent layer-our network has L (L + 1) / 2 direct connections. For each layer, the feature-maps of all preceding layers are used as inputs, and its own feature-maps are used as inputs into all subsequent layers. DenseNets have several compelling advantages: they alleviate the vanishing-gradient problem, strengthen feature propagation, encourage feature reuse, and substantially reduce the number of parameters. We evaluate our proposed architecture on four highly competitive object recognition benchmark tasks (CIFAR-10, CIFAR-100, SVHN, and ImageNet). DenseNets obtain significant improvements over the state-of-the-art on most of them, whilst requiring less memory and computation to achieve high performance. Code and pre-trained models are available at https://github.com/liuzhuang13/DenseNet.

1. Introduction

Convolutional neural networks (CNNs) have become the dominant machine learning approach for visual object recognition. Although they were originally introduced over 20 years ago LeCun et al., 1989, improvements in computer hardware and network structure have enabled the training of truly deep CNNs only recently. The original LeNet5 (LeCun et al., 1998) consisted of 5 layers, VGG featured 19 (Russakovsky et al., 2015), and only last year Highway Networks (Srivastava et al., 2015) and Residual Networks (ResNets) (He et al., 2016) have surpassed the 100-layer barrier.

As CNNs become increasingly deep, a new research problem emerges: as information about the input or gradient passes through many layers, it can vanish and “wash out” by the time it reaches the end (or beginning) of the network. Many recent publications address this or related problems. ResNets (He et al., 2017) and Highway Networks (Srivastava et al., 2015) bypass signal from one layer to the next via identity connections. Stochastic depth (Huang et al., 2016) shortens ResNets by randomly dropping layers during training to allow better information and gradient flow. FractalNets (Larsson et al., 2016) repeatedly combine several parallel layer sequences with different number of convolutional blocks to obtain a large nominal depth, while maintaining many short paths in the network. Although these different approaches vary in network topology and training procedure, they all share a key characteristic: they create short paths from early layers to later layers.

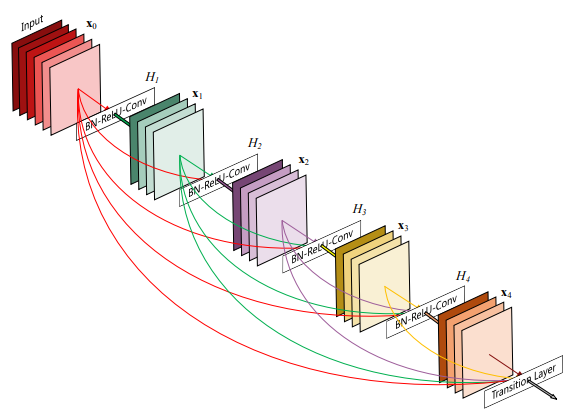

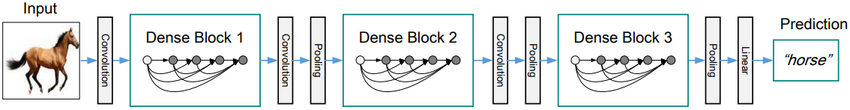

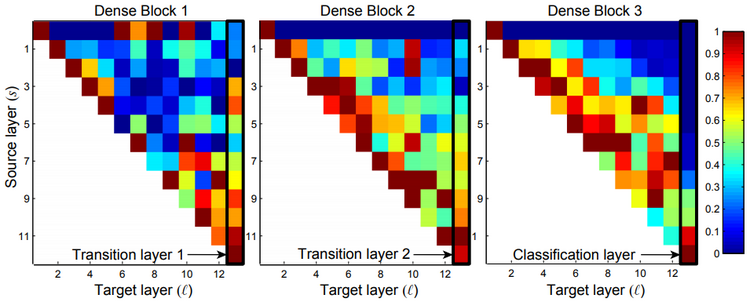

In this paper, we propose an architecture that distills this insight into a simple connectivity pattern: to ensure maximum information flow between layers in the network, we] connect all layers (with matching feature-map sizes) directly with each other. To preserve the feed-forward nature, each layer obtains additional inputs from all preceding layers and passes on its own feature-maps to all subsequent layers. Figure 1 illustrates this layout schematically. Crucially, in contrast to ResNets, we never combine features through summation before they are passed into a layer; instead, we combine features by concatenating them. Hence, the $\ell$-th layer has $\ell$ inputs, consisting of the feature-maps of all preceding convolutional blocks. Its own feature-maps are passed on to all $L−\ell$ subsequent layers. This introduces $\frac{L (L + 1)}{2}$ connections in an $L$-layer network, instead of just $L$, as in traditional architectures. Because of its dense connectivity pattern, we refer to our approach as Dense Convolutional Network (DenseNet).

|

(...)

2. Related Work

3. DenseNets

|

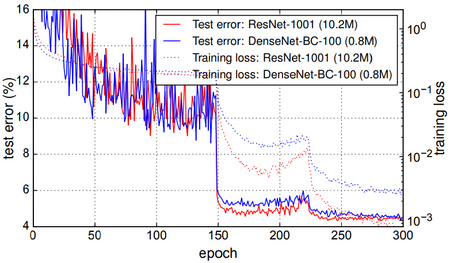

4. Experiments

|

|

|

5. Discussion

|

6. Conclusion

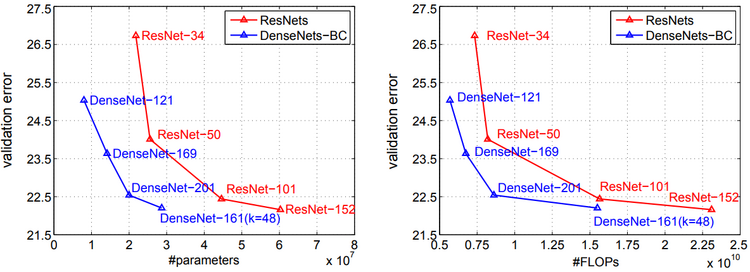

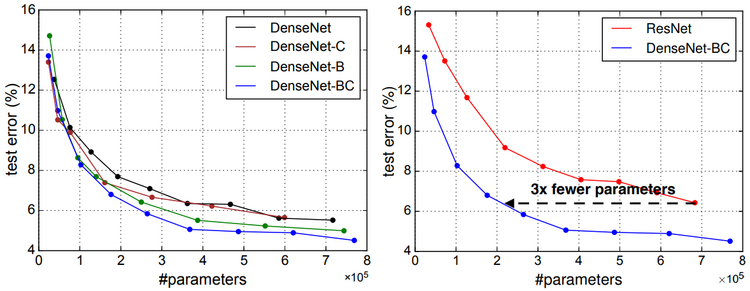

We proposed a new convolutional network architecture, which we refer to as Dense Convolutional Network (DenseNet). It introduces direct connections between any two layers with the same feature-map size. We showed that DenseNets scale naturally to hundreds of layers, while exhibiting no optimization difficulties. In our experiments, DenseNets tend to yield consistent improvement in accuracy with growing number of parameters, without any signs of performance degradation or overfitting. Under multiple settings, it achieved state-of-the-art results across several highly competitive datasets. Moreover, DenseNets require substantially fewer parameters and less computation to achieve state-of-the-art performances. Because we adopted hyperparameter settings optimized for residual networks in our study, we believe that further gains in accuracy of DenseNets may be obtained by more detailed tuning of hyperparameters and learning rate schedules.

Whilst following a simple connectivity rule, DenseNets naturally integrate the properties of identity mappings, deep supervision, and diversified depth. They allow feature reuse throughout the networks and can consequently learn more compact and, according to our experiments, more accurate models. Because of their compact internal representations and reduced feature redundancy, DenseNets may be good feature extractors for various computer vision tasks that build on convolutional features (e.g. Gardner et al.,2015; Gatys et al., 2015). We plan to study such feature transfer with DenseNets in future work.

Acknowledgements

The authors are supported in part by the III-1618134, III-1526012, IIS-1149882 grants from the National Science Foundation, and the Bill an Melinda Gates foundation. Gao Huang is supported by the International Postdoctoral Exchange Fellowship Program of China Postdoctoral Council (No.20150015). Zhuang Liu is supported by the National Basic Research Program of China Grants 2011CBA00300, 2011CBA00301, the National Natural Science Foundation of China Grant 61361136003. We also thank Daniel Sedra, Geoff Pleiss and Yu Sun for many insightful discussions.

References

BibTeX

@inproceedings{2017_DenselyConnectedConvolutionalNe,

author = {Gao Huang and

Zhuang Liu and

Laurens van der Maaten and

Kilian Q. Weinberger},

title = {Densely Connected Convolutional Networks},

booktitle = {Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017)},

pages = {2261--2269},

publisher = {IEEE Computer Society},

year = {2017},

url = {https://doi.org/10.1109/CVPR.2017.243},

doi = {10.1109/CVPR.2017.243},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 DenselyConnectedConvolutionalNe | Gao Huang Kilian Q. Weinberger Zhuang Liu Laurens van der Maaten Geoff Pleiss Danlu Chen Tongcheng Li | Densely Connected Convolutional Networks | 10.1109/CVPR.2017.243 | 2017 |