2019 TransformerXLAttentiveLanguageM

- (Dai et al., 2019) ⇒ Zihang Dai, Zhilin Yang, Yiming Yang, Jaime G. Carbonell, Quoc V. Le, and Ruslan Salakhutdinov. (2019). "Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context".; In: CoRR, arXiv:1901.02860.

Subject Headings: Neural Transformer Network, Transformer-based LM, Transformer-XL.

Notes

- Implemented at

https://github.com/kimiyoung/transformer-xl - Copyright: Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0)

Cited By

- Google Scholar: ~ 1,180 Citations.

2019

- (ICLR, 2019) ⇒ ICLR 2019 Conference Paper717 AnonReviewer2: https://openreview.net/forum?id=HJePno0cYm¬eId=Hkla0-dp27

- QUOTE: This paper proposes a variant of transformer to train language model, it uses two modifications, one is the segment level recurrence with state reuse, the other is relative positional encoding, which significantly enhances the power to model long range dependency. Extensive experiments in terms of perplexity results are reported, specially on WikiText-103 corpus, significant perplexity reduction has been achieved.

Perplexity is not a gold standard for language model, the authors are encouraged to report experimental results on real world applications such as word error rate reduction on ASR or BLEU score improvement on machine translation.

- QUOTE: This paper proposes a variant of transformer to train language model, it uses two modifications, one is the segment level recurrence with state reuse, the other is relative positional encoding, which significantly enhances the power to model long range dependency. Extensive experiments in terms of perplexity results are reported, specially on WikiText-103 corpus, significant perplexity reduction has been achieved.

Quotes

Abstract

Transformer networks have a potential of learning longer-term dependency, but are limited by a fixed-length context in the setting of language modeling. As a solution, we propose a novel neural architecture, Transformer-XL, that enables Transformer to learn dependency beyond a fixed length without disrupting temporal coherence. Concretely, it consists of a segment-level recurrence mechanism and a novel positional encoding scheme. Our method not only enables capturing longer-term dependency, but also resolves the problem of context fragmentation. As a result, Transformer-XL learns dependency that is about 80% longer than RNNs and 450% longer than vanilla Transformers, achieves better performance on both short and long sequences, and is up to 1,800 + times faster than vanilla Transformer during evaluation. Additionally, we improve the state-of-the-art (SoTA) results of bpc/perplexity from 1.06 to 0.99 on enwiki8, from 1.13 to 1.08 on text8, from 20.5 to 18.3 on WikiText-103, from 23.7 to 21.8 on One Billion Word, and from 55.3 to 54.5 on Penn Treebank (without finetuning). Our code, pretrained models, and hyperparameters are available in both Tensorflow and PyTorch[1].

1. Introduction

Language modeling is among the important problems that require modeling long-term dependency, with successful applications such as unsupervised pretraining (Dai & Le, 2015; Peters et al., 2018; Radford et al., 2018; Devlin et al., 2018). However, it has been a challenge to equip neural networks with the capability to model long-term dependency in sequential data. Recurrent neural networks (RNNs), in particular Long Short-Term Memory (LSTM) networks (Hochreiter & Schmidhuber, 1997), have been a standard solution to language modeling and obtained strong results on multiple benchmarks. Despite the wide adaption, RNNs are difficult to optimize due to gradient vanishing and explosion (Hochreiter et al., 2001), and the introduction of gating in LSTMs and the gradient clipping technique (Graves, 2013; Pascanu et al., 2012) might not be sufficient to fully address his issue. Empirically, previous work has found that LSTM language models use 200 context words on average (Khandelwal et al., 2018), indicating room for further improvement.

On the other hand, the direct connections between long-distance word pairs baked in attention mechanisms might ease optimization and enable the learning of long-term dependency (Bahdanau et al., 2014; Vaswani et al., 2017). Recently, Al-Rfou et al. (2018) designed a set of auxiliary losses to train deep Transformer networks for character-level language modeling, which outperform LSTMs by a large margin. Despite the success, the LM training in Al-Rfou et al. (2018) is performed on separated fixed-length segments of a few hundred characters, without any information flow across segments. As a consequence of the fixed context length, the model cannot capture any longer-term dependency beyond the predefined context length. In addition, the fixed-length segments are created by selecting a consecutive chunk of symbols without respecting the sentence or any other semantic boundary. Hence, the model lacks necessary contextual information needed to well predict the first few symbols, leading to inefficient optimization and inferior performance. We refer to this problem as context fragmentation.

To address the aforementioned limitations of fixed-length contexts, we propose a new architecture called Transformer-XL (meaning extra long). We introduce the notion of recurrence into our deep self-attention network. In particular, instead of computing the hidden states from scratch for each new segment, we reuse the hidden states obtained in previous segments. The reused hidden states serve as memory for the current segment, which builds up a recurrent connection between the segments.

As a result, modeling very long-term dependency becomes possible because information can be propagated through the recurrent connections. Meanwhile, passing information from the previous segment can also resolve the problem of context fragmentation. More importantly, we show the necessity of using relative positional encodings rather than absolute ones, in order to enable state reuse without causing temporal confusion. Hence, as an additional technical contribution, we introduce a simple but more effective relative positional encoding formulation that generalizes to attention lengths longer than the one observed during training.

Transformer-XL obtained strong results on five datasets, varying from word-level to character-level language modeling. Transformer-XL improves the previous state-of-the-art (SoTA) results from 1.06 to 0.99 in bpc on enwiki8, from 1.13 to 1.08 in bpc on text8, from 20.5 to 18.3 in perplexity on WikiText-103, and from 23.7 to 21.8 in perplexity on One BillionWord. On small data, TransformerXL also achieves a perplexity of 54.5 on Penn Treebank without finetuning, which is SoTA when comparable settings are considered.

We use two methods to quantitatively study the effective lengths of Transformer-XL and the baselines.

Similar to Khandelwal et al. (2018), we gradually increase the attention length at test time until no further noticeable improvement (0.1% Similar to Khandelwal et al. (2018), we gradually increase the attention length at test time until no further noticeable improvement (0.1% relative gains) can be observed. Our best model in this settings use attention lengths of 1, 600 and 3, 800 on WikiText-103 and enwiki8 respectively. In addition, we devise a metric called Relative Effective Context Length (RECL) that aims to perform a fair comparison of the gains brought by increasing the context lengths for different models. In this setting, Transformer-XL learns a RECL of 900 words on WikiText-103, while the numbers for recurrent networks and Transformer are only 500 and 128.

2 Related Work

In the last few years, the field of language modeling has witnessed many significant advances, including but not limited to devising novel architectures to better encode the context (Bengio et al., 2003; Mikolov et al., 2010; Zilly et al., 2016; Krause et al., 2016; Grave et al., 2016b; Dauphin et al., 2016; Chung et al., 2016; Merity et al., 2016; Kalchbrenner et al., 2016; Al-Rfou et al., 2018), improving regularization and optimization algorithms Zaremba et al. (2014); Inan et al. (2016); Press & Wolf (2016); Merity et al. (2017); Gal & Ghahramani (2016), speeding up the Softmax computation (Morin & Bengio, 2005; Kuchaiev & Ginsburg, 2017; Grave et al., 2016a; Jozefowicz et al., 2016), and enriching the output distribution family (Yang et al., 2017; Kanai et al., 2018).

To capture the long-range context in language modeling, a line of work directly feeds a representation of the wider context into the network as an additional input. Existing works range from ones where context representations are manually defined (Mikolov & Zweig, 2012; Ji et al., 2015; Wang & Cho, 2015) to others that rely on document-level topics learned from data (Dieng et al., 2016; Wang et al., 2017).

More broadly, in generic sequence modeling, how to capture long-term dependency has been a longstanding research problem. From this perspective, since the ubiquitous adaption of LSTM, many efforts have been spent on relieving the vanishing gradient problem, including better initialization (Le et al., 2015), additional loss signal (Trinh et al., 2018), augmented memory structure (Ke et al., 2018) and others that modify the internal architecture of RNNs to ease the optimization Mikolov et al. (2014); Koutnik et al. (2014); Wu et al. (2016); Li et al. (2018). Different from them, our work is based on the Transformer architecture and shows that language modeling as a real-world task benefits from the ability to learn longer-term dependency.

3. Model

Given a corpus of tokens [math]\displaystyle{ x = (x_1, \cdots , x_T ), }[/math] the task of language modeling is to estimate the joint probability [math]\displaystyle{ P(x) }[/math], which is often auto-regressively factorized as [math]\displaystyle{ P(x) = \prod_{\ell} P(x_t |X_{\lt t}) }[/math]. With the factorization, the problem reduces to estimating each conditional factor. In this work, we stick to the standard neural approach to modeling the conditional probability. Specifically, a trainable neural network is used to encode the context $x<t$ into a fixed size hidden state, which is multiplied with the word embeddings to obtain the logits. The logits are then fed into the Softmax function, yielding a categorical probability distribution over the next token.

3.1. Vanilla Transformer Language Models

In order to apply Transformer or self-attention to language modeling, the central problem is how to train a Transformer to effectively encode an arbitrarily long context into a fixed size representation. Given infinite memory and computation, a simple solution would be to process the entire context sequence using an unconditional Transformer decoder, similar to a feed-forward neural network. However, this is usually infeasible with the limited resource in practice.

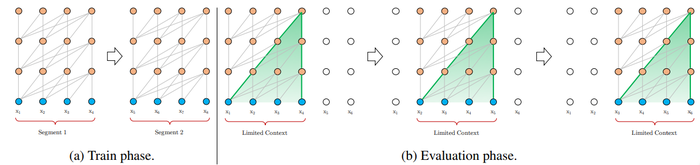

One feasible but crude approximation is to split the entire corpus into shorter segments of manageable sizes, and only train the model within each segment, ignoring all contextual information from previous segments. This is the idea adopted by Al-Rfou et al. (2018). We call it the vanilla model and visualize it in Fig. 1a. Under this training paradigm, information never flows across segments in either the forward or backward pass. There are two critical limitations of using a fixed-length context. First, the largest possible dependency length is upper bounded by the segment length, which is a few hundred on character-level language modeling (Al-Rfou et al., 2018). Therefore, although the self-attention mechanism is less affected by the vanishing gradient problem compared to RNNs, the vanilla model is not able to fully exploit this optimization advantage. Second, though it is possible to use padding to respect the sentence or other semantic boundaries, in practice it has been standard practice to simply chunk long text into fixed-length segments due to improved efficiency (Peters et al., 2018; Devlin et al., 2018; Al-Rfou et al., 2018). However, simply chunking a sequence into fixed-length segments will lead to the context fragmentation problem as discussed in Section 1.

During evaluation, at each step, the vanilla model also consumes a segment of the same length as in training, but only makes one prediction at the last position. Then, at the next step, the segment is shifted to the right by only one position, and the new segment has to be processed all from scratch. As shown in Fig. 1b, this procedure ensures that each prediction utilizes the longest possible context exposed during training, and also relieves context fragmentation issue encountered in training. However, this evaluation procedure is extremely expensive. We will show that our proposed architecture is able to substantially improve the evaluation speed.

3.2. Segment-Level Recurrence With State Reuse

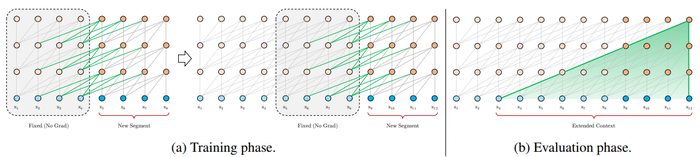

To address the limitations of using a fixed-length context, we propose to introduce a recurrence mechanism to the Transformer architecture. During training, the hidden state sequence computed for the previous segment is fixed and cached to be reused as an extended context when the model processes the next new segment, as shown in Fig. 2a. Although the gradient still remains within a segment, this additional input allows the network to exploit information in the history, leading to an ability of modeling longer-term dependency and avoiding context fragmentation. Formally, let the two consecutive segments of length [math]\displaystyle{ L }[/math] be [math]\displaystyle{ s_{\tau} = [x_{\tau;1}, \cdots , x_{\tau,L}] }[/math] and [math]\displaystyle{ s_{\tau+1} = [x_{\tau+1,1},\cdots, x_{\tau+1,L}] }[/math] respectively. Denoting the [math]\displaystyle{ n }[/math]-th layer hidden state sequence produced for the [math]\displaystyle{ \tau }[/math]-th segment [math]\displaystyle{ s_{\tau} }[/math] by [math]\displaystyle{ h_n^{\tau} \in R^{L\times d} }[/math], where [math]\displaystyle{ d }[/math] is the hidden dimension. Then, the [math]\displaystyle{ n }[/math]-th layer hidden state for segment [math]\displaystyle{ s_{\tau+1} }[/math] is produced (schematically) as follows,

where the function [math]\displaystyle{ SG(\cdot) }[/math] stands for stop-gradient, the notation [math]\displaystyle{ [h_u\circ h_v] }[/math] indicates the concatenation of two hidden sequences along the length dimension, and [math]\displaystyle{ W }[/math] denotes model parameters. Compared to the standard Transformer, the critical difference lies in that the key [math]\displaystyle{ k^n_{\tau +1} }[/math] and value [math]\displaystyle{ v^n_{\tau+1} }[/math] are conditioned on the extended context [math]\displaystyle{ \tilde{h}^{n-1}_{\tau+1} }[/math] and hence [math]\displaystyle{ h^{n-1}_{\tau} }[/math] cached from the previous segment. We emphasize this particular design by the green paths in Fig. 2a.

With this recurrence mechanism applied to every two consecutive segments of a corpus, it essentially creates a segment-level recurrence in the hidden states. As a result, the effective context being utilized can go way beyond just two segments. However, notice that the recurrent dependency between [math]\displaystyle{ h^n_{\tau + 1} }[/math] and [math]\displaystyle{ h^{n-1}_{\tau} }[/math] shifts one layer downwards per-segment, which differs from the same-layer recurrence in conventional RNN-LMs. Consequently, the largest possible dependency length grows linearly w.r.t. the number of layers as well as the segment length, i.e., [math]\displaystyle{ O (N \times L) }[/math], as visualized by the shaded area in Fig. 2b. This is analogous to truncated BPTT (Mikolov et al., 2010), a technique developed for training RNN-LMs. However, different from truncated BPTT, our method caches a sequence of hidden states instead of the last one, and should be applied together with the relative positional encoding technique described in Section 3.3.

Besides achieving extra long context and resolving fragmentation, another benefit that comes with the recurrence scheme is significantly faster evaluation. Specifically, during evaluation, the representations from the previous segments can be reused instead of being computed from scratch as in the case of the vanilla model. In our experiments on enwiki8, Transformer-XL is up to 1, 800 + times faster than the vanilla model during evaluation (see Section 4).

Finally, notice that the recurrence scheme does not need to be restricted to only the previous segment. In theory, we can cache as many previous segments as the GPU memory allows, and reuse all of them as the extra context when processing the current segment. Thus, we can cache a predefined length-[math]\displaystyle{ M }[/math] old hidden states spanning (possibly) multiple segments, and refer to them as the memory [math]\displaystyle{ m^n_{\tau} \in R^{M\times d} }[/math], due to a clear connection to the memory augmented neural networks (Graves et al., 2014; Weston et al., 2014). In our experiments, we set [math]\displaystyle{ M }[/math] equal to the segment length during training, and increase it by multiple times during evaluation.

3.3. Relative Positional Encodings

While we found the idea presented in the previous subsection very appealing, there is a crucial technical challenge we haven’t solved in or der to reuse the hidden states. That is, how can we keep the positional information coherent when we reuse the states? Recall that, in the standard Transformer, the information of sequence order is provided by a set of positional encodings, denoted as $\mathbf{U} \in \R^{L_{max}\times d}$, where the $i$-th row $\mathbf{U}_i$ corresponds to the $i$-th absolute position within a segment and $L_{max}$ prescribes the maximum possible length to be modeled. Then, the actual input to the Transformer is the element-wise addition of the word embeddings and the positional encodings. If we simply adapt this positional encoding to our recurrence mechanism, the hidden state sequence would be computed schematically by

$\begin{aligned} \mathbf{h}_{\tau+1} &=f\left(\mathbf{h}_{\tau}, \mathbf{E}_{\mathbf{s}_{\tau+1}}+\mathbf{U}_{1: L}\right) \\ \mathbf{h}_{\tau} &=f\left(\mathbf{h}_{\tau-1}, \mathbf{E}_{\mathbf{s}_{\tau}}+\mathbf{U}_{1: L}\right) \end{aligned}$

where $\mathbf{E_{s_{\tau}}} \in \R^{L\times d}$ is the word embedding sequence of $\mathbf{s}_{\tau}$ , and $f$ represents a transformation function. Notice that, both $\mathbf{E_{s_{\tau}}}$ and $\mathbf{E_{s_{\tau}+1}}$ are associated with the same positional encoding $\mathbf{U}_{1:L}$. As a result, the model has no information to distinguish the positional difference between $x_{\tau,j}$ and $x_{{\tau+1},j}$ for any $j = 1, \ldots, L$, resulting in a sheer performance loss.

In order to avoid this failure mode, the fundamental idea is to only encode the relative positional information in the hidden states. Conceptually, the positional encoding gives the model a temporal clue or “bias” about how information should be gathered, i.e., where to attend. For the same purpose, instead of incorporating bias statically into the initial embedding, one can inject the same information into the attention score of each layer. More importantly, it is more intuitive and generalizable to define the temporal bias in a relative manner. For instance, when a query vector $q_{\tau, i}$ attends on the key vectors $k_{\tau,} \leq i$, it does not need to know the absolute position of each key vector to identify the temporal order of the segment. Instead, it suffices to know the relative distance between each key vector $k_{\tau, j}$ and itself $q_{\tau, i}$, i.e. $i−j$. Practically, one can create a set of relative positional encodings $\mathbf{R}\in \R^{L_{max}\times d}$, where the $i$-th row $\mathbf{R}_i$ indicates a relative distance of $i$ between two positions. By injecting the relative distance dynamically into the attention score, the query vector can easily distinguish the representations of $x_{\tau, j}$ and $x_{\tau + 1, j}$ from their different distances, making the state reuse mechanism feasible. Meanwhile, we won’t lose any temporal information, as the absolute position can be recovered recursively from relative distances.

Previously, the idea of relative positional encodings has been explored in the context of machine translation (Shaw et al., 2018) and music generation (Huang et al., 2018). Here, we offer a different derivation, arriving at a new form of relative positional encodings, which not only has a one-to-one correspondence to its absolute counterpart but also enjoys much better generalization empirically (see Section 4). Firstly, in the standard Transformer (Vaswani et al., 2017), the attention score between query $q_i$ and key vector $k_j$ within the same segment can be decomposed as

$\mathbf{A}_{i, j}^{\mathrm{abs}} =\underbrace{\mathbf{E}_{x_{i}}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k} \mathbf{E}_{x_{j}}}_{(a)}+\underbrace{\mathbf{E}_{x_{i}}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k} \mathbf{U}_{j}}_{(b)} +\underbrace{\mathbf{U}_{i}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k} \mathbf{E}_{x_{j}}}_{(c)}+\underbrace{\mathbf{U}_{i}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k} \mathbf{U}_{j}}_{(d)}$

Following the idea of only relying on relative positional information, we propose to reparameterize the four terms as follows

$\mathbf{A}_{i, j}^{\mathrm{rel}} =\underbrace{\mathbf{E}_{x_{i}}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k, E} \mathbf{E}_{x_{j}}}_{(a)}+\underbrace{\mathbf{E}_{x_{i}}^{\top} \mathbf{W}_{q}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{i-j}}_{(b)} +\underbrace{u^{\top} \mathbf{W}_{k, E} \mathbf{E}_{x_{j}}}_{(c)}+\underbrace{v^{\top} \mathbf{W}_{k, R} \mathbf{R}_{i-j}}_{(d)}$

- The first change we make is to replace all appearances of the absolute positional embedding $\mathbf{U}_j$ for computing key vectors in term (b) and (d) with its relative counterpart $\mathbf{R}_{i−j}$. This essentially reflects the prior that only the relative distance matters for where to attend. Note that $\mathbf{R}$ is a sinusoid encoding matrix (Vaswani et al., 2017) without learnable parameters.

- Secondly, we introduce a trainable parameter $u \in \R^d$ to replace the query $\mathbf{U}^{\top}_i \mathbf{W}^{\top}_q$ in term (c). In this case, since the query vector is the same for all query positions, it suggests that the attentive bias towards different words should remain the same regardless of the query position. With a similar reasoning, a trainable parameter $v \in \R^d$ is added to substitute $\mathbf{U}^{\top}_i \mathbf{W}^{\top}_q$ in term (d).

- Finally, we deliberately separate the two weight matrices $\mathbf{W}_{k,E}$ and $\mathbf{W}_{k,R}$ for producing the content-based key vectors and location-based key vectors respectively.

Under the new parameterization, each term has an intuitive meaning: term (a) represents content-based addressing, term (b) captures a content-dependent positional bias, term (c) governs a global content bias, and (d) encodes a global positional bias.

In comparison, the formulation in Shaw et al. (2018) only has terms (a) and (b), dropping the two bias terms (c) and (d). Moreover, Shaw et al. (2018) merge the multiplication $\mathbf{W}_{k}\mathbf{R}$ into a single trainable matrix $\mathbf{\hat{R}}$, which abandons the inductive bias built into the original sinusoid positional encoding (Vaswani et al., 2017). In contrast, our relative positional embedding $\mathbf{R}$ adapts the sinusoid formulation. As a benefit of the inductive bias, a model trained on a memory of some certain length can automatically generalize to a memory several times longer during evaluation.

Equipping the recurrence mechanism with our proposed relative positional embedding, we finally arrive at the Transformer-XL architecture. For completeness, we summarize the computational procedure for a N-layer Transformer-XL with a single attention head here. For $n = 1,\ldots , N$:

$ \begin{aligned} \widetilde{\mathbf{h}}_{\tau}^{n-1}=&\left[\mathrm{SG}\left(\mathbf{m}_{\tau}^{n-1}\right) \circ \mathbf{h}_{\tau}^{n-1}\right] \\ \mathbf{q}_{\tau}^{n}, \mathbf{k}_{\tau}^{n}, \mathbf{v}_{\tau}^{n}=& \mathbf{h}_{\tau}^{n-1} \mathbf{W}_{q}^{n \top}, \widetilde{\mathbf{h}}_{\tau}^{n-1} \mathbf{W}_{k, E}^{n}, \widetilde{\mathbf{h}}_{\tau}^{n-1} \mathbf{W}_{v}^{n \top} \\ \mathbf{A}_{\tau, i, j}^{n}=& \mathbf{q}_{\tau, i}^{n}{ }^{\top} \mathbf{k}_{\tau, j}^{n}+\mathbf{q}_{\tau, i}^{n}{ }^{\top} \mathbf{W}_{k, R}^{n} \mathbf{R}_{i-j} +u^{\top} \mathbf{k}_{\tau, j}+v^{\top} \mathbf{W}_{k, R}^{n} \mathbf{R}_{i-j} \\ \mathbf{a}_{\tau}^{n}=& \operatorname{Masked}-\operatorname{Softmax}\left(\mathbf{A}_{\tau}^{n}\right) \mathbf{v}_{\tau}^{n} \\ \mathbf{o}_{\tau}^{n}=& \operatorname{LayerNorm}\left(\operatorname{Linear}\left(\mathbf{a}_{\tau}^{n}\right)+\mathbf{h}_{\tau}^{n-1}\right) \\ \mathbf{h}_{\tau}^{n}=& \text { Positionwise-Feed-Forward }\left(\mathbf{o}_{\tau}^{n}\right) \end{aligned} $

with $\mathbf{h}^0_{\tau}: = \mathbf{E}_{s_{\tau}}$ defined as the word embedding sequence. In addition, it is worth mentioning that a naive way to compute $\mathbf{A}$ requires computing $\mathbf{W}^n_{k, R}\mathbf{R}_{i−j}$ for all pairs $(i, j)$, whose cost is quadratic w.r.t. the sequence length. However, noticing that the value of $i − j$ only ranges from zero to the sequence length, we show a simple computation procedure in Appendix B, which reduces the cost to be linear w.r.t. the sequence length.

4. Experiments

4.1. Main Results

We apply Transformer-XL to a variety of datasets on both word-level and character-level language modeling to have a comparison with state-of-the-art systems, including WikiText-103 (Merity et al., 2016), enwiki8 (LLC, 2009), text8 (LLC, 2009), One Billion Word (Chelba et al., 2013), and Penn Treebank (Mikolov & Zweig, 2012).

WikiText-103 is the largest available word-level language modeling benchmark with long-term dependency. It contains 103M training tokens from 28K articles, with an average length of 3.6K tokens per article, which allows testing the ability of long-term dependency modeling. We set the attention length to 384 during training and 1600 during evaluation. We adopted adaptive softmax and input representations (Baevski and Auli, 2018; Grave et al., 2016a). As shown in Table 1, Transformer-XL reduces the previous state-of-the-art (SoTA) perplexity from 20.5 to 18.3, which demonstrates the superiority of the TransformerXL architecture

| Model | #Param | PPL |

|---|---|---|

| Grave et al. (2016b) - LSTM | − | 48.7 |

| Bai et al. (2018) - TCN | − | 45.2 |

| Dauphin et al. (2016) - GCNN-8 | − | 44.9 |

| Grave et al. (2016b) - LSTM + Neural cache | − | 40.8 |

| Dauphin et al. (2016) - GCNN-14 | − | 37.2 |

| Merity et al. (2018) - QRNN | 151M | 33.0 |

| Rae et al. (2018) - Hebbian + Cache | − | 29.9 |

| Ours - Transformer-XL Standard | 151M | 24.0 |

| Baevski and Auli (2018) - oAdaptive Input | 247M | 20.5 |

| Ours - Transformer-XL Large | 257M | 18.3 |

The dataset enwik8 contains 100M bytes of unprocessed Wikipedia text. We compare our architecture with the previous results in Table 2. Under the model size constraint, the 12-layer Transformer-XL achieves a new SoTA result, outperforming the 12-layer vanilla Transformer from Al-Rfou et al. (2018) by 0.05, while both Transformer variants have a large margin over conventional RNN-based models. Notably, our 12-layer architecture achieves the same result as the 64 - layer network from Al-Rfou et al. (2018), using only 17% of the parameter budget. In order to see whether better performances can be obtained by increasing the model size, we train 18-layer and 24-layer Transformer-XLs with increased model sizes. With the attention length 784 during training and 3, 800 during evaluation, we obtained a new SoTA result and our method is the first to break through 1.0 on widely-studied character-level benchmarks. Different from Al-Rfou et al. (2018), Transformer-XL does not need any auxiliary losses, and thus all benefits are credited to a better architecture.

| Model | #Param | bpc |

|---|---|---|

| Ha et al. (2016) - LN HyperNetworks | 27M | 1.34 |

| Chung et al. (2016) - LN HM-LSTM | 35M | 1.32 |

| Zilly et al. (2016) - RHN | 46M | 1.27 |

| Mujika et al. (2017) - FS-LSTM-4 | 47M | 1.25 |

| Krause et al. (2016) - Large mLSTM | 46M | 1.24 |

| Knol (2017) - cmix v13 | − | 1.23 |

| Al-Rfou et al. (2018) - 12L Transformer | 44M | 1.11 |

| Ours - 12L Transformer-XL | 41M | 1.06 |

| Al-Rfou et al. (2018) - 64L Transformer | 235M | 1.06 |

| Ours - 18L Transformer-XL | 88M | 1.03 |

| Ours - 24L Transformer-XL | 277M | 0.99 |

Similar to but different from enwik8, text8 contains 100M processed Wikipedia characters created by lowering case the text and removing any character other than the 26 letters a through z, and space. Due to the similarity, we simply adapt the best model and the same hyper-parameters on enwik8 to text8 without further tuning. The comparison with previous methods is summarized in Table 3. Again, Transformer-XL achieves the new SoTA result with a clear margin.

| Model | #Param | bpc |

|---|---|---|

| Cooijmans et al. (2016) - BN-LSTM | − | 1.36 |

| Chung et al. (2016) - LN HM-LSTM | 35M | 1.29 |

| Zilly et al. (2016) - RHN | 45M | 1.27 |

| Krause et al. (2016) - Large mLSTM | 45M | 1.27 |

| Al-Rfou et al. (2018) - 12L Transformer | 44M | 1.18 |

| Al-Rfou et al. (2018) - 64L Transformer | 235M | 1.13 |

| Ours - 24L Transformer-XL | 277M | 1.08 |

One Billion Word does not preserve any long-term dependency because sentences have been shuffled. Consequently, this dataset mainly tests the ability of modeling only short-term dependency. The comparison between Transformer-XL and the other methods is shown in Table 4. Although Transformer-XL is mainly designed to better capture longer-term dependency, it dramatically improves the single-model SoTA from 23.7 to 21.8. Specifically, Transformer-XL significantly outperforms a contemporary method using vanilla Transformers (Baevski and Auli, 2018), suggesting the advantage of Transformer-XL is generalizable to modeling short sequences

| Model | #Param | PPL |

|---|---|---|

| Shazeer et al. (2014) - Sparse Non-Negative | 33B | 52.9 |

| Chelba et al. (2013) - RNN-1024 + 9 Gram | 20B | 51.3 |

| Kuchaiev and Ginsburg (2017) - G-LSTM-2 | − | 36.0 |

| Dauphin et al. (2016) - GCNN-14 bottleneck | − | 31.9 |

| Jozefowicz et al. (2016) - LSTM | 1.8B | 30.6 |

| Jozefowicz et al. (2016) - LSTM + CNN Input | 1.04B | 30.0 |

| Shazeer et al. (2017) - Low-Budget MoE | ∼5B | 34.1 |

| Shazeer et al. (2017) - High-Budget MoE | ∼5B | 28.0 |

| Shazeer et al. (2018) - Mesh Tensorflow | 4.9B | 24.0 |

| Baevski and Auli (2018) - oAdaptive Input | 0.46B | 24.1 |

| Baevski and Auli (2018) - oAdaptive Input | 1.0B | 23.7 |

| Ours - Transformer-XL Base | 0.46B | 23.5 |

| Ours - Transformer-XL Large | 0.8B | 21.8 |

We also report the results on word-level Penn Treebank in Table 5. Similar to AWD-LSTM (Merity et al., 2017), we apply variational dropout and weight average to Transformer-XL. With proper regularization, Transformer-XL achieves a new SoTA result among models without two-step finetuning. Penn Treebank has only 1M training tokens, which implies that Transformer-XL also generalizes well even on small datasets.

| Model | #Param | PPL |

|---|---|---|

| Inan et al. (2016) - Tied Variational LSTM | 24M | 73.2 |

| Zilly et al. (2016) - Variational RHN | 23M | 65.4 |

| Zoph and Le (2016) - NAS Cell | 25M | 64.0 |

| #2017_Merity et al. (2017) - AWD-LSTM | 24M | 58.8 |

| Pham et al. (2018) - Efficient NAS | 24M | 58.6 |

| Liu et al. (2018) - Differentiable NAS | 23M | 56.1 |

| Yang et al. (2017) - AWD-LSTM-MoS | 22M | 55.97 |

| Melis et al. (2018) - Dropout tuning | 24M | 55.3 |

| Ours - Transformer-XL | 24M | 54.52 |

| Merity et al. (2017) - AWD-LSTM+Finetune† | 24M | 57.3 |

| Yang et al. (2017) - MoS+Finetune† | 22M | 54.44 |

4.2. Ablation Study

We conduct two sets of ablation studies to examine the effects of two proposed techniques used in Transformer-XL: the recurrence mechanism and the new positional encoding scheme.

The first study is performed on WikiText-103, which requires modeling long-term dependency. The results are reported in Table 6. Among the compared encoding schemes, Shaw et al. (2018) is relative, while Vaswani et al. (2017) and Al-Rfou et al. (2018) are absolute. “Full” and “half” losses refer to applying a cross entropy loss to all or the recent half positions in the segment. We found that absolute encodings only work well with half losses because half losses exclude positions with very short attention lengths during training for better generalization. Table 6 shows that both the recurrence mechanism and our encoding scheme are necessary to achieve the best performance, as well as generalizing to longer attention sequences during evaluation time. Although the backpropagation length during training is only 128, with the two techniques the attention length can be increased to 640 at test time. In the standard setting with 151M parameters, the perplexity decreases as the attention length increases.

| Remark | Recurrence | Encoding | Loss | PPL init | PPL best | Attn Len |

|---|---|---|---|---|---|---|

| Transformer-XL (128M) | ✓ | Ours | Full | 27.02 | 26.77 | 500 |

| − | ✓ | Shaw et al. (2018) | Full | 27.94 | 27.94 | 256 |

| − | ✓ | Ours | Half | 28.69 | 28.33 | 460 |

| − | ✗ | Ours | Full | 29.59 | 29.02 | 260 |

| − | ✗ | Ours | Half | 30.10 | 30.10 | 120 |

| − | ✗ | Shaw et al. (2018) | Full | 29.75 | 29.75 | 120 |

| − | ✗ | Shaw et al. (2018) | Half | 30.50 | 30.50 | 120 |

| − | ✗ | Vaswani et al. (2017) | Half | 30.97 | 30.97 | 120 |

| Transformer (128M)† | ✗ | Al-Rfou et al. (2018) | Half | 31.16 | 31.16 | 120 |

| Transformer-XL (151M) | ✓ | Ours | Full | 23.43 | 23.09 | 640 |

| 23.16 | 450 | |||||

| 23.35 | 300 |

Since the recurrence mechanism costs additional memory, we also compare Transformer-XL with baselines under the same GPU memory constraints. As shown in Table 10 in Appendix A, despite using a shorter backpropagation length, Transformer-XL remains superior to the baselines.

The second study targets at isolating the effects of resolving the context fragmentation problem from the benefit of capturing longer context length. In order to achieve this goal, we deliberately choose a dataset that does not require longterm dependency, so that any improvement from establishing the recurrence can be attributed to solving the context fragmentation. Specifically, we perform this controlled experiment on the One Billion Word dataset, which can only benefit from removing the context fragmentation. We train a 20-layer Transformer-XL with ∼0.3B parameters for 400K steps. As shown in Table 7, using segment-level recurrence substantially improves performance even when long-term dependency is not needed, which is consistent with our previous discussion that the recurrence mechanism resolves the context fragmentation problem. Moreover, our relative positional encodings is also superior to Shaw et al. (2018) on short sequences.

| Method | PPL |

|---|---|

| Ours | 25.2 |

| With Shaw et al. (2018) encodings | 25.7 |

| Without recurrence | 27.1 |

4.3 Relative Effective Context Length

Khandelwal et al. (2018) proposed a method to evaluate the Effective Context Length (ECL) of a sequence model. ECL is the longest length to which increasing the context span would lead to a gain more than a threshold. However, ECL ignores the fact that it is harder to get improvement when a model already achieves a lower perplexity using only a shorter context, and thus it is not suitable for fair comparison among multiple models. We instead propose a new metric called Relative Effective Context Length (RECL). RECL is defined on a model group instead of a single model, and the gain of a long context is measure by the relative improvement over the best short context model. As such, the model group shares the same baseline to enable fair comparison. RECL also has a parameter $r$, which means constraining the comparison on top-r hard examples. See Appedix C for more details about RECL. As shown in Table 8, Transformer-XL manages to model dependency of 900 words long on average with $r = 0.1$. The RECL of Transformer-XL is 80% and 450% longer than recurrent networks and Transformer respectively. Both the recurrence mechanism and our positional encodings contribute to a longer RECL. This further substantiates our argument that Transformer-XL is able to model longer-term dependency.

| Model | r = 0.1 | r = 0.5 | r = 1.0 |

|---|---|---|---|

| Transformer-XL 151M | 900 | 800 | 700 |

| QRNN | 500 | 400 | 300 |

| LSTM | 400 | 300 | 200 |

| Transformer-XL 128M | 700 | 600 | 500 |

| − use Shaw et al. (2018) encoding | 400 | 400 | 300 |

| − remove recurrence | 300 | 300 | 300 |

| Transformer | 128 | 128 | 128 |

4.4. Generated Text

Trained only on WikiText-103 which is medium-sized, Transformer-XL is already able to generate relatively coherent articles with thousands of tokens without manual cherry picking, despite minor flaws. Please refer to Appendix E for samples.

4.5 Evaluation Speed

Finally, we compare the evaluation speed of our model with the vanilla Transformer model (Al-Rfou et al., 2018). As shown in Table 9, due to the state reuse scheme, Transformer-XL achieves an up to 1,874 times speedup during evaluation.

| Attn Len | How much Al-Rfou et al. (2018) is slower |

|---|---|

| 3,800 | 1,874x |

| 2,800 | 1,409x |

| 1,800 | 773x |

| 800 | 363x |

5 CONCLUSIONS

We propose a novel architecture, Transformer-XL, for language modeling with self-attention architectures beyond a fixed-length context. Our main technical contributions include introducing the notion of recurrence in a purely self-attentive model and deriving a novel positional encoding scheme. These two techniques form a complete set of solutions, as any one of them alone does not address the issue of fixed-length contexts. Transformer-XL is the first self-attention model that achieves substantially better results than RNNs on both character-level and word-level language modeling. Transformer-XL is also able to model longer-term dependency than RNNs and Transformer, and achieves substantial speedup during evaluation compared to vanilla Transformers.

A Ablation Study with Memory Constraints

Table 10 compares Transformer-XL with baseline under the same memory budget. Transformer-XL still outperforms the baseline even with a shorter backprop length.

| Backprop Len | Recurrence | Encoding | Loss | pplx best | pplx init | Attn Len |

|---|---|---|---|---|---|---|

| 128 | ✓ | Ours | Full | 26.77 | 27.02 | 500 |

| 128 | ✓ | Ours | Partial | 28.33 | 28.69 | 460 |

| 176 | ✗ | Ours | Full | 27.98 | 28.43 | 400 |

| 172 | ✗ | Ours | Partial | 28.83 | 28.83 | 120 |

B Efficient Computation of the Attention with Relative Positional Embedding

As we discussed in section 3.3, the naive way of computing the $\mathbf{W}_{k,R}\mathbf{R}_{i−j}$ for all pairs $(i, j)$ is subject to a quadratic cost. Here, we present a simple method with only a linear cost. Firstly, notice that the relative distance $i − j$ can only be integer from $0$ to $M + L − 1$, where $M$ and $L$ are the memory length and segment length respectively. Hence, the rows of the matrix.

$ \mathbf{Q}:=\left[\begin{array}{c} \mathbf{R}_{M+L-1}^{\top} \\ \mathbf{R}_{M+L-2}^{M} \\ \vdots \\ \mathbf{R}_{1}^{\top} \\ \mathbf{R}_{0}^{+} \end{array}\right] \mathbf{W}_{k, R}^{\top}=\left[\begin{array}{c} {\left[\mathbf{W}_{k, R} \mathbf{R}_{M+L-1}\right]^{\top}} \\ {\left[\mathbf{W}_{k, R} \mathbf{R}_{M+L-2}\right]^{\top}} \\ \vdots \\ {\left[\mathbf{W}_{k, R} \mathbf{R}_{1}\right]^{\top}} \\ {\left[\mathbf{W}_{k, R} \mathbf{R}_{0}\right]^{\top}} \end{array}\right] \in \mathbb{R}^{(M+L) \times d} $

consist of all possible vector outputs of $\mathbf{W}_{k,R}\mathbf{R}_{i−j}$ for any $(i, j)$. Note that we have defined $Q$ in a reversed order, i.e., $\mathbf{Q}_k = \mathbf{W}_{k,R}\mathbf{R}_{M+L−1−k}$, to make further discussion easier.

Next, we collect the term (b) for all possible $i, j$ into the following $L \times (M + L)$ matrix

$ \begin{aligned} \mathbf{B} &=\left[\begin{array}{cccccc} q_{0}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{M} & \cdots & q_{0}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{0} & 0 & \cdots & 0 \\ q_{1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{M+1} & \cdots & q_{1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{1} & q_{1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{0} & \cdots & 0 \\ \vdots & \vdots & \vdots & & \vdots & \ddots & \vdots \\ q_{L-1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{M+L-1} & \cdots & q_{L-1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{M+L-1} & q_{L-1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{L-1} & \cdots & q_{L-1}^{\top} \mathbf{W}_{k, R} \mathbf{R}_{0} \end{array}\right] \\ &=\left[\begin{array}{ccccc} q_{0}^{\top} \mathbf{Q}_{L-1} & \cdots & q_{0}^{\top} \mathbf{Q}_{M+L-1} & 0 & \cdots & 0 \\ q_{1}^{\top} \mathbf{Q}_{L-2} & \cdots & q_{1}^{\top} \mathbf{Q}_{M+L-2} & q_{1}^{\top} \mathbf{Q}_{M+L-1} & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ q_{L-1}^{\top} \mathbf{Q}_{0} & \cdots & q_{L-1}^{\top} \mathbf{Q}_{M} & q_{L-1}^{\top} \mathbf{Q}_{M+1} & \cdots & q_{L-1}^{\top} \mathbf{Q}_{M+L-1} \end{array}\right] \end{aligned} $

Then, we further define

$ \widetilde{\mathbf{B}}=\mathbf{q} \mathbf{Q}^{\top}=\left[\begin{array}{cccccc} q_{0}^{\top} \mathbf{Q}_{0} & \cdots & q_{0}^{\top} \mathbf{Q}_{M} & q_{0}^{\top} \mathbf{Q}_{M+1} & \cdots & q_{0}^{\top} \mathbf{Q}_{M+L-1} \\ q_{1}^{\top} \mathbf{Q}_{0} & \cdots & q_{1}^{\top} \mathbf{Q}_{M} & q_{1}^{\top} \mathbf{Q}_{M+1} & \cdots & q_{1}^{\top} \mathbf{Q}_{M+L-1} \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ q_{L-1}^{\top} \mathbf{Q}_{0} & \cdots & q_{L-1}^{\top} \mathbf{Q}_{M} & q_{L-1}^{\top} \mathbf{Q}_{M+1} & \cdots & q_{L-1}^{\top} \mathbf{Q}_{M+L-1} \end{array}\right] $

Now, it is easy to see an immediate relationship between $\mathbf{B}$ and $\widetilde{\mathbf{B}}$, where the $i$-th row of $\mathbf{B}$ is simply a left-shifted version of i-th row of $\widetilde{\mathbf{B}}$. Hence, the computation of $\mathbf{B}$ only requires a matrix multiplication $\mathbf{qQ^{\top}}$ to compute Be and then a set of left-shifts.

Similarly, we can collect all term (d) for all possible $i$, $j$ into another $L \times (M + L)$ matrix $\mathbf{D}$,

$ \mathbf{D}=\left[\begin{array}{cccccc} v^{\top} \mathbf{Q}_{L-1} & \cdots & v^{\top} \mathbf{Q}_{M+L-1} & 0 & \cdots & 0 \\ v^{\top} \mathbf{Q}_{L-2} & \cdots & v^{\top} \mathbf{Q}_{M+L-2} & v^{\top} \mathbf{Q}_{M+L-1} & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ v^{\top} \mathbf{Q}_{0} & \cdots & v^{\top} \mathbf{Q}_{M} & v^{\top} \mathbf{Q}_{M+1} & \cdots & v^{\top} \mathbf{Q}_{M+L-1} \end{array}\right] $

Then, we can follow the same procedure to define

$ \tilde{\mathbf{d}}=[\mathbf{Q} v]^{\top}=\left[\begin{array}{lllll} v^{\top} \mathbf{Q}_{0} & \cdots & v^{\top} \mathbf{Q}_{M} & v^{\top} \mathbf{Q}_{M+1} & \cdots & v^{\top} \mathbf{Q}_{M+L-1} \end{array}\right] $.

Again, each row of $\mathbf{D}$ is simply a left-shift version of $\tilde{\mathbf{d}}$. Hence, the main computation cost comes from the matrix-vector multiplication $\tilde{\mathbf{d}}=[\mathbf{Q} v]^{\top}$, which is not expensive any more.

C Details About RECL

D Attention Visualization

E Generated Text

Footnotes

References

- (Al-Rfou et al., 2018) ⇒ Rami Al-Rfou, Dokook Choe, Noah Constant, Mandy Guo, and Llion Jones. (2019). “Character-level Language Modeling with Deeper Self-attention.” In: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI2019), The Thirty-First Innovative Applications of Artificial Intelligence Conference (IAAI 2019), The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI 2019).

Rami Al-Rfou, Dokook Choe, Noah Constant, Mandy Guo, and Llion Jones. Character-level language modeling with deeper self-attention. arXiv preprint arXiv:1808.04444, 2018.

Alexei Baevski and Michael Auli. Adaptive input representations for neural language modeling. arXiv preprint arXiv:1809.10853, 2018. Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473, 2014. Shaojie Bai, J Zico Kolter, and Vladlen Koltun. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271, 2018. Yoshua Bengio, Réjean Ducharme, Pascal Vincent, and Christian Jauvin. A neural probabilistic language model. Journal of machine learning research, 3(Feb):1137–1155, 2003. Ciprian Chelba, Tomas Mikolov, Mike Schuster, Qi Ge, Thorsten Brants, Phillipp Koehn, and Tony Robinson. One billion word benchmark for measuring progress in statistical language modeling. arXiv preprint arXiv:1312.3005, 2013. 10 Junyoung Chung, Sungjin Ahn, and Yoshua Bengio. Hierarchical multiscale recurrent neural networks. arXiv preprint arXiv:1609.01704, 2016. Tim Cooijmans, Nicolas Ballas, César Laurent, C¸ a˘glar G¨ulc¸ehre, and Aaron Courville. Recurrent batch normalization. arXiv preprint arXiv:1603.09025, 2016. Andrew M Dai and Quoc V Le. Semi-supervised sequence learning. In Advances in neural information processing systems, pp. 3079–3087, 2015. Yann N Dauphin, Angela Fan, Michael Auli, and David Grangier. Language modeling with gated convolutional networks. arXiv preprint arXiv:1612.08083, 2016. Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018. Adji B Dieng, Chong Wang, Jianfeng Gao, and John Paisley. Topicrnn: A recurrent neural network with long-range semantic dependency. arXiv preprint arXiv:1611.01702, 2016. Yarin Gal and Zoubin Ghahramani. A theoretically grounded application of dropout in recurrent neural networks. In Advances in Neural Information Processing Systems, pp. 1019–1027, 2016. Edouard Grave, Armand Joulin, Moustapha Cissé, David Grangier, and Hervé Jégou. Efficient softmax approximation for gpus. arXiv preprint arXiv:1609.04309, 2016a. Edouard Grave, Armand Joulin, and Nicolas Usunier. Improving neural language models with a continuous cache. arXiv preprint arXiv:1612.04426, 2016b. Alex Graves. Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850, 2013. Alex Graves, Greg Wayne, and Ivo Danihelka. Neural turing machines. arXiv preprint arXiv:1410.5401, 2014. David Ha, Andrew Dai, and Quoc V Le. Hypernetworks. arXiv preprint arXiv:1609.09106, 2016. Sepp Hochreiter and J¨urgen Schmidhuber. Long short-term memory. Neural computation, 9(8): 1735–1780, 1997. Sepp Hochreiter, Yoshua Bengio, Paolo Frasconi, J¨urgen Schmidhuber, et al. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies, 2001. Cheng-Zhi Anna Huang, Ashish Vaswani, Jakob Uszkoreit, Noam Shazeer, Curtis Hawthorne, Andrew M Dai, Matthew D Hoffman, and Douglas Eck. An improved relative self-attention mechanism for transformer with application to music generation. arXiv preprint arXiv:1809.04281, 2018. Hakan Inan, Khashayar Khosravi, and Richard Socher. Tying word vectors and word classifiers: A loss framework for language modeling. arXiv preprint arXiv:1611.01462, 2016. Yangfeng Ji, Trevor Cohn, Lingpeng Kong, Chris Dyer, and Jacob Eisenstein. Document context language models. arXiv preprint arXiv:1511.03962, 2015. Rafal Jozefowicz, Oriol Vinyals, Mike Schuster, Noam Shazeer, and Yonghui Wu. Exploring the limits of language modeling. arXiv preprint arXiv:1602.02410, 2016. Nal Kalchbrenner, Lasse Espeholt, Karen Simonyan, Aaron van den Oord, Alex Graves, and Koray Kavukcuoglu. Neural machine translation in linear time. arXiv preprint arXiv:1610.10099, 2016. Sekitoshi Kanai, Yasuhiro Fujiwara, Yuki Yamanaka, and Shuichi Adachi. Sigsoftmax: Reanalysis of the softmax bottleneck. arXiv preprint arXiv:1805.10829, 2018. Nan Rosemary Ke, Anirudh Goyal ALIAS PARTH GOYAL, Olexa Bilaniuk, Jonathan Binas, Michael C Mozer, Chris Pal, and Yoshua Bengio. Sparse attentive backtracking: Temporal credit assignment through reminding. In Advances in Neural Information Processing Systems, pp. 7650– 7661, 2018. 11 Urvashi Khandelwal, He He, Peng Qi, and Dan Jurafsky. Sharp nearby, fuzzy far away: How neural language models use context. arXiv preprint arXiv:1805.04623, 2018. Bryon Knol. cmix v13. http://www.byronknoll.com/cmix.html, 2017. Jan Koutnik, Klaus Greff, Faustino Gomez, and Juergen Schmidhuber. A clockwork rnn. arXiv preprint arXiv:1402.3511, 2014. Ben Krause, Liang Lu, Iain Murray, and Steve Renals. Multiplicative lstm for sequence modelling. arXiv preprint arXiv:1609.07959, 2016. Oleksii Kuchaiev and Boris Ginsburg. Factorization tricks for lstm networks. arXiv preprint arXiv:1703.10722, 2017. Quoc V Le, Navdeep Jaitly, and Geoffrey E Hinton. A simple way to initialize recurrent networks of rectified linear units. arXiv preprint arXiv:1504.00941, 2015. Shuai Li,Wanqing Li, Chris Cook, Ce Zhu, and Yanbo Gao. Independently recurrent neural network (indrnn): Building a longer and deeper rnn. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5457–5466, 2018. Hanxiao Liu, Karen Simonyan, and Yiming Yang. Darts: Differentiable architecture search. arXiv preprint arXiv:1806.09055, 2018. MultiMedia LLC. Large text compression benchmark. 2009. G´abor Melis, Charles Blundell, Tom´aˇs Koˇcisk`y, Karl Moritz Hermann, Chris Dyer, and Phil Blunsom. Pushing the bounds of dropout. arXiv preprint arXiv:1805.09208, 2018. Stephen Merity, Caiming Xiong, James Bradbury, and Richard Socher. Pointer sentinel mixture models. arXiv preprint arXiv:1609.07843, 2016. Stephen Merity, Nitish Shirish Keskar, and Richard Socher. Regularizing and optimizing lstm language models. arXiv preprint arXiv:1708.02182, 2017. Stephen Merity, Nitish Shirish Keskar, and Richard Socher. An analysis of neural language modeling at multiple scales. arXiv preprint arXiv:1803.08240, 2018. Tomas Mikolov and Geoffrey Zweig. Context dependent recurrent neural network language model. SLT, 12(234-239):8, 2012. Tom´asˇ Mikolov, Martin Karafia´t, Luka´sˇ Burget, Jan Cˇ ernocky`, and Sanjeev Khudanpur. Recurrent neural network based language model. In Eleventh Annual Conference of the International Speech Communication Association, 2010. Tomas Mikolov, Armand Joulin, Sumit Chopra, Michael Mathieu, and Marc’Aurelio Ranzato. Learning longer memory in recurrent neural networks. arXiv preprint arXiv:1412.7753, 2014. Frederic Morin and Yoshua Bengio. Hierarchical probabilistic neural network language model. In Aistats, volume 5, pp. 246–252. Citeseer, 2005. Asier Mujika, Florian Meier, and Angelika Steger. Fast-slow recurrent neural networks. In Advances in Neural Information Processing Systems, pp. 5915–5924, 2017. Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. Understanding the exploding gradient problem. CoRR, abs/1211.5063, 2012. Matthew E Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke Zettlemoyer. Deep contextualized word representations. arXiv preprint arXiv:1802.05365, 2018. Hieu Pham, Melody Y Guan, Barret Zoph, Quoc V Le, and Jeff Dean. Efficient neural architecture search via parameter sharing. arXiv preprint arXiv:1802.03268, 2018. 12 Ofir Press and Lior Wolf. Using the output embedding to improve language models. arXiv preprint arXiv:1608.05859, 2016. Alec Radford, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. Improving language understanding by generative pre-training. URL https://s3-us-west-2. amazonaws. com/openaiassets/ research-covers/languageunsupervised/language understanding paper. pdf, 2018. Jack W Rae, Chris Dyer, Peter Dayan, and Timothy P Lillicrap. Fast parametric learning with activation memorization. arXiv preprint arXiv:1803.10049, 2018. Peter Shaw, Jakob Uszkoreit, and Ashish Vaswani. Self-attention with relative position representations. arXiv preprint arXiv:1803.02155, 2018. Noam Shazeer, Joris Pelemans, and Ciprian Chelba. Skip-gram language modeling using sparse non-negative matrix probability estimation. arXiv preprint arXiv:1412.1454, 2014. Noam Shazeer, Azalia Mirhoseini, Krzysztof Maziarz, Andy Davis, Quoc Le, Geoffrey Hinton, and Jeff Dean. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. arXiv preprint arXiv:1701.06538, 2017. Noam Shazeer, Youlong Cheng, Niki Parmar, Dustin Tran, Ashish Vaswani, Penporn Koanantakool, Peter Hawkins, HyoukJoong Lee, Mingsheng Hong, Cliff Young, et al. Mesh-tensorflow: Deep learning for supercomputers. In Advances in Neural Information Processing Systems, pp. 10434– 10443, 2018. Trieu H Trinh, Andrew M Dai, Thang Luong, and Quoc V Le. Learning longer-term dependencies in rnns with auxiliary losses. arXiv preprint arXiv:1803.00144, 2018. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in Neural Information Processing Systems, pp. 5998–6008, 2017. Tian Wang and Kyunghyun Cho. Larger-context language modelling. arXiv preprint arXiv:1511.03729, 2015. Wenlin Wang, Zhe Gan, Wenqi Wang, Dinghan Shen, Jiaji Huang, Wei Ping, Sanjeev Satheesh, and Lawrence Carin. Topic compositional neural language model. arXiv preprint arXiv:1712.09783, 2017. Jason Weston, Sumit Chopra, and Antoine Bordes. Memory networks. arXiv preprint arXiv:1410.3916, 2014. Yuhuai Wu, Saizheng Zhang, Ying Zhang, Yoshua Bengio, and Ruslan R Salakhutdinov. On multiplicative integration with recurrent neural networks. In Advances in neural information processing systems, pp. 2856–2864, 2016. Zhilin Yang, Zihang Dai, Ruslan Salakhutdinov, and William W Cohen. Breaking the softmax bottleneck: A high-rank rnn language model. arXiv preprint arXiv:1711.03953, 2017. Wojciech Zaremba, Ilya Sutskever, and Oriol Vinyals. Recurrent neural network regularization. arXiv preprint arXiv:1409.2329, 2014. Julian Georg Zilly, Rupesh Kumar Srivastava, Jan Koutn´ık, and J¨urgen Schmidhuber. Recurrent highway networks. arXiv preprint arXiv:1607.03474, 2016. Barret Zoph and Quoc V Le. Neural architecture search with reinforcement learning. arXiv preprint arXiv:1611.01578, 2016.

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2019 TransformerXLAttentiveLanguageM | Yiming Yang Jaime G. Carbonell (1953 – 2020) Ruslan Salakhutdinov Quoc V. Le Zhilin Yang Zihang Dai | Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context | 2019 |