2005 RepairingConcavitiesinROCCurves

- (Flach & Wu, 2005) ⇒ Peter A. Flach, and Shaomin Wu. (2005). “Repairing Concavities in ROC Curves.” In: Proceedings of the 19th International Joint Conference on Artificial Intelligence (IJCAI, 2005).

Subject Headings: ROC Convex Hull, Convex Hull Algorithm.

Notes

- Other Version(s) and URL(s):

- Resource(s):

Cited By

- Google Scholar: ~ 121 Citations.

- ACM DL: ~ 8 Citations.

- Semantic Scholar: ~ 72 Citations.

- MS Academic: ~ 115 Citations.

Quotes

Abstract

In this paper we investigate methods to detect and repair concavities in ROC curves by manipulating model predictions. The basic idea is that, if a point or a set of points lies below the line spanned by two other points in ROC space, we can use this information to repair the concavity. This effectively builds a hybrid model combining the two better models with an inversion of the poorer models; in the case of ranking classifiers, it means that certain intervals of the scores are identified as unreliable and candidates for inversion. We report very encouraging results on 23 UCI data sets, particularly for naive Bayes where the use of two validation folds yielded significant improvements on more than half of them, with only one loss.

1 Introduction

There is an increasing amount of work on model selection and model combination in the machine learning and data mining literature: for instance, model selection based on ROC space (Provost and Fawcett, 2001), model combination by means of bagging (Breiman, 1996), boosting (Freund and Schapire, 1996), arcing (Breiman, 1998), the mixture of experts method (Jacobs et al., 1991), to name just a few. A review on ensembles of learning machines can be found in (Valentini and Masulli, 2002).

Typically, these methods assume a set of given models with fixed performance, and the issue is how best to combine these models to obtain a better ensemble model. There is no attempt to analyse the performance of the given models to determine a region where performance is sub-standard. This paper investigates methods to improve given models using ROC analysis.

ROC (Receiver Operating Characteristic) analysis is usually associated with classifier selection when both class and misclassification cost distribution are unknown at training time. However, ROC analysis has a much broader scope that is not limited to cost-sensitive classification. A categorical classifier is mapped to a point in ROC space by means of its false positive rate on the X-axis and its true positive rate on the Y-axis. A probabilistic classifier results in a ROC curve, which aggregates its behaviour for all possible decision thresholds. The quality of a probabilistic classifier can be measured by the Area Under the ROC Curve (AUC), which measures how well the classifier separates the two classes without reference to a decision threshold. A good classifier should have a large AUC, and AUC=1 means that there is a decision threshold such that the corresponding categorical classifier has 100% accuracy.

We use the term model repair to denote approaches that modify given models in order to obtain better models. In contrast, ensemble methods produce hybrid models that leave the original models intact. An approach to model construction using ROC space is given in [ Blockeel and Struyf, 2002], where the authors identify and assemble parts of a decision tree that perform well in different areas of ROC space. Our approach in this paper is to identify ‘bad’ areas, or concavities, in a ROC curve and repair them by manipulating the corresponding low-quality predictions. The approach is experimentally validated using both naive Bayes and decision tree, but the approach has much wider scope as it can be applied to any classifier that computes class scores.

To illustrate the approach, we describe in Section 2 the RepairPoint algorithm that combines three models based on different thresholds of the same probabilistic classifier, and creates a new model which theoretically should improve upon the worst of the three models. In Section 3 we introduce the main algorithm RepairSection, that mirrors an entire concave region (a region of the curve that is below its convex hull). In Section 4 we present experimental results on 23 data sets from the UCI repository. Section 5 reviews some related work on model ensembles, gives the main conclusion and suggests further work.

2 Basics of repairing classifiers in ROC space

Assume that the confusion matrix of a classifier evaluated on a test set is as in Table 1. Then the true positive rate of the classifier is [math]\displaystyle{ a / (a + b) }[/math] and the false positive rate of the classifier is [math]\displaystyle{ c / (c + d) }[/math]. The point [math]\displaystyle{ (c / (c +]] d),a / (a + b)) }[/math] in the XY plane (i.e., ROC space) will be used to represent the performance of this classifier.

predicted positive predicted negative actual positive a b actual negative d Table 1: A confusion matrix.

If a model is under the ascending diagonal in ROC space, this means that it performs worse than random. Models A and B in Figure 1 are such worse-than-random models.

However, there is a very useful trick to obtain better-than random models: simply invert all predictions of the original model. This corresponds to exchanging the columns in the contingency table, leading to a new true positive rate of b / (a + b) = 1-a / (a + b), i.e. one minus the original true positive rate; similarly we obtain a new false positive rate of d / (c + d) = 1-c / (c + d). Geometrically, this corresponds to mirroring the original ROC point through the midpoint on the ascending diagonal.

Figure 1: By inverting their predictions, worse-than-random models A and B below the diagonal can be transformed into better-than-random models - A and - B above the diagonal.

Notice that the ascending diagonal really connects two classifiers: the classifier which always predicts negative in (0, 0), and the classifier which always predicts positive in (1, 1). This suggests that the above repair procedure can be generalised to line segments connecting arbitrary classifiers. For instance, consider Figure 2. Denote the sets of true and false positives of Model i by TPi and FPi, then we can construct Model 4 under the condition that TP1 � TP3 � TP2 and FP1 � FP3 � FP2. In particular, these inclusion constraints are satisfied if Models 1, 2 and 3 are obtained by setting thresholds on the same probabilistic model, which is what we assume throughout the paper.

…

3 Identifying and Repairing Concavities in a ROC Curve

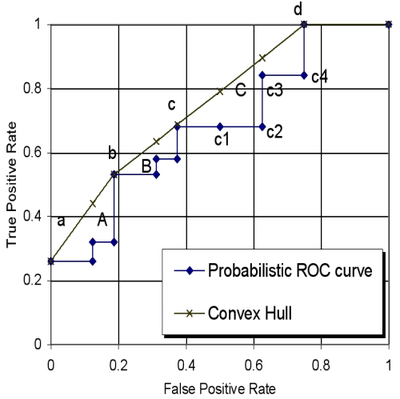

(...) Figure 3 shows both the ROC curve for a probabilistic model evaluated on a small test set[1] and its convex hull (Provost & Fawcett, 2001).

|

Four points of the ROC curve (point a, b, c and d) are located on the convex hull. Each of these points corresponds to a probability threshold, and thus each segment of the convex hull corresponds to a probability interval. For instance, the convex hull in Figure 3 has three segments corresponding to three disjoint probability intervals. (...) It is interesting to note that if this procedure is followed for all concavities, this corresponds to constructing the convex hull by discretising the probability scores. (...) by applying this algorithm to the model corresponding to threshold $c_2$, this point would be point-mirrored through the midpoint on line segment $c_d$ to the other side of the convex hull.

(...) The AUC of the repaired curve is therefore larger than both the AUC of the original curve and the AUC of its convex hull.

4 Experimental Evaluation

5 Discussion and Conclusions

Acknowledgments

Footnotes

- ↑ For illustration purposes, the test set contains only a few examples and the resolution of the ROC curve is low. In practical circumstances a step curve with much higher resolution is obtained.

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2005 RepairingConcavitiesinROCCurves | Peter A. Flach Shaomin Wu | Repairing Concavities in ROC Curves | 2005 |