2001 AMachLearnApprToCorefResOfNounPhrases

- (Soon et al., 2001) ⇒ Wee Meng Soon, Hwee Tou Ng, Daniel Chung Yong Lim. (2001). “A Machine Learning Approach to Coreference Resolution of Noun Phrases.” In: Computational Linguistics, 27(4). doi:10.1162/089120101753342653

Subject Headings: Supervised Entity Mention Coreference Resolution Algorithm, Decision Tree Algorithm, Coreference Resolution Algorithm; Coreference Resolution Task; Coreference Resolution System.

Notes

- It proposes a Pairwise Classification Coreference Resolution Algorithm.

- 'first learn-based system that offers performance comparable to that of state-of-the-art nonlearning systems' on the MUC-6 and MUC-7 data sets (provided as part of the DARPA Message Understanding Conferences).

- They provide a comprehensive feature set - with explanations - which should prove useful, and

- It tests performance with the C5 Algorithm.

- It refers to an Coreferent Entity Mention as a Markable.

Cited By

~345 http://scholar.google.com/scholar?cites=3195495080781519937

2008

- Xiaofeng Yang, Jian Su, and Chew Lim Tan. (2008). “A Twin-Candidate Model for Learning-based Anaphora Resolution.” Computational Linguistics 34:3, 327-356

- Regina Barzilay, and Mirella Lapata. (2008). “Modeling Local Coherence: An Entity-based Approach.” Computational Linguistics 34:1, 1-34

- http://scholar.google.com/scholar?q=%222001%22+A+Machine+Learning+Approach+to+Coreference+Resolution+of+Noun+Phrases

- http://dl.acm.org/citation.cfm?id=972597.972602&preflayout=flat#citedby

Quotes

Abstract

In this paper, we present a learning approach to coreference resolution of noun phrases in unrestricted text. The approach learns from a small, annotated corpus and the task includes resolving not just a certain type of noun phrase (e.g., pronouns) but rather general noun phrases. It also does not restrict the entity types of the noun phrases; that is, coreference is assigned whether they are of " organization, " " person, " or other types. We evaluate our approach on common data sets (namely, the MUC-6 and MUC-7 coreference corpora) and obtainencouraging results, indicating that on the general noun phrase coreference task, the learning approach holds promise and achieves accuracy comparable to that of nonlearning approaches. Our system is the first learning-based system that offers performance comparable to that of state-of-the-art nonlearning systems on these data sets.

1. Introduction

Coreference resolution is the process of determining whether two expressions in natural language refer to the same entity in the world. It is an important subtask in natural language processing systems. In particular, information extraction (IE) systems like those built in the DARPA Message Understanding Conferences (Chinchor 1998; Sundheim 1995) have revealed that coreference resolution is such a critical component of IE systems that a separate coreference subtask has been defined and evaluated since MUC-6 (MUC-6 1995).

In this paper, we focus on the task of determining coreference relations as defined in MUC-6 (MUC-6 1995) and MUC-7 (MUC-7 1997). Specifically, an coreference relation denotes an identity of reference and holds between two textual elements known as markables, which can be definite noun phrases, demonstrative noun phrases, proper names, appositives, sub-noun phrases that act as modifiers, pronouns, and so on. Thus, our coreference task resolves general noun phrases and is not restricted to a certain type of noun phrase such as pronouns. Also, we do not place any restriction on the possible candidate markables; that is, all markables, whether they are “organization," “person," or other entity types, are considered. The ability to link coreferring noun phrases both within and across sentences is critical to discourse analysis and language understanding in general.

2. A Machine Learning Approach to Coreference Resolution

We adopt a corpus-based, machine learning approach to noun phrase coreference resolution. This approach requires a relatively small corpus of training documents that have been annotated with coreference chains of noun phrases. All possible markables in a training document are determined by a pipeline of language-processing modules, and training examples in the form of feature vectors are generated for appropriate pairs of markables. These training examples are then given to a learning algorithm to build a classifier. To determine the coreference chains in a new document, all markables are determined and potential pairs of coreferring markables are presented to the classifier, which decides whether the two markables actually corefer. We give the details of these steps in the following subsections.

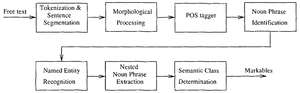

2.1 Determination of Markables

A prerequisite for coreference resolution is to obtain most, if not all, of the possible markables in a raw input text. To determine the markables, a pipeline of natural language processing (NLP) modules is used, as shown in Figure 1. They consist of tokenization, sentence segmentation, morphological processing, part-of-speech tagging, noun phrase identification, named entity recognition, nested noun phrase extraction, and semantic class determination. As far as coreference resolution is concerned, the goal of these NLP modules is to determine the boundary of the markables, and to provide the necessary information about each markable for subsequent generation of features in the training examples.

Our part-of-speech tagger is a standard statistical tagger based on the Hidden Markov Model ([HMM]]) (Church 1988). Similarly, we built a statistical HMM-based noun phrase identification module that determines the noun phrase boundaries solely based on the part-of-speech tags assigned to the words in a sentence. We also implemented a module that recognizes MUC-style named entities, that is, organization, person, location, date, time, money, and percent. Our named entity recognition module uses the HMM approach of Bikel, Schwartz, and Weischedel (1999), which learns from a tagged corpus of named entities. That is, our part-of-speech tagger, noun phrase identification module, and named entity recognition module are all based on HMMs and learn from corpora tagged with parts of speech, noun phrases, and named entities, respectively. Next, both the noun phrases determined by the noun phrase identification module and the named entities are merged in such a way that if the noun phrase overlaps with a named entity, the noun phrase boundaries will be adjusted to subsume the named entity.

6. Related Work

There is a long tradition of work on coreference resolution within computational linguistics, but most of it was not subject to empirical evaluation until recently. Among the papers that have reported quantitative evaluation results, most are not based on learning from an annotated corpus (Baldwin 1997; Kameyama 1997; Lappin and Leass 1994; Mitkov 1997).

To our knowledge, the research efforts of Aone and Bennett (1995), Ge, Hale, and Charniak (1998), Kehler (1997), McCarthy and Lehnert (1995), Fisher et al. (1995), and McCarthy (1996) are the only ones that are based on learning from an annotated corpus.

Ge, Hale, and Charniak (1998) used a statistical model for resolving pronouns, whereas we used a decision tree learning algorithm and resolved general noun phrases, not just pronouns. Similarly, Kehler (1997) used maximum entropy modeling to assign a probability distribution to alternative sets of coreference relationships among noun phrase entity templates, whereas we used decision tree learning.

The work of Aone and Bennett (1995), McCarthy and Lehnert (1995), Fisher et al. (1995), and McCarthy (1996) employed decision tree learning. The RESOLVE system is presented in McCarthy and Lehnert (1995), Fisher et al. (1995), and McCarthy (1996). McCarthy and Lehnert (1995) describe how RESOLVE was tested on the MUC-5 English Joint Ventures (EJV) corpus. It used a total of 8 features, 3 of which were specific to the EJV domain. For example, the feature JV-CHILD-i determined whether i referred to a joint venture formed as the result of a tie-up. McCarthy (1996) describes how the original RESOLVE for MUC-5 EJV was improved to include more features, 8 of which were domain specific, and 30 of which were domain independent. Fisher et al. (1995) adapted RESOLVE to work in MUC-6. The features used were slightly changed for this domain. Of the original 30 domain-independent features, 27 were used. The 8 domain-specific features were completely changed for the MUC-6 task. For example, JV-CHILD-i was changed to CHILD-i to decide whether i is a “unit” or a “subsidiary” of a certain parent company. In contrast to RESOLVE, our system makes use of a smaller set of 12 features and, as in Aone and Bennett’s (1995) system, the features used are generic and applicable across domains. This makes our coreference engine a domain-independent module.

Although Aone and Bennett’s (1995) system also made use of decision tree learning for coreference resolution, they dealt with Japanese texts, and their evaluation focused only on noun phrases denoting organizations, whereas our evaluation, which dealt with English texts, encompassed noun phrases of all types, not just those denoting organizations. In addition, Aone and Bennett evaluated their system on noun phrases that had been correctly identified, whereas we evaluated our coreference resolution engine as part of a total system that first has to identify all the candidate noun phrases and has to deal with the inevitable noisy data when mistakes occur in noun phrase identification and semantic class determination.

The contribution of our work lies in showing that a learning approach, when evaluated on common coreference data sets, is able to achieve accuracy competitive with that of state-of-the-art systems using nonlearning approaches. It is also the first machine learning-based system to offer performance comparable to that of nonlearning approaches.

Finally, the work of Cardie and Wagstaff (1999) also falls under the machine learning approach. However, they used unsupervised learning and their method did not require any annotated training data. Their clustering method achieved a balanced Fmeasure of only 53.6% on MUC-6 test data. This is to be expected: supervised learning in general outperforms unsupervised learning since a supervised learning algorithm has access to a richer set of annotated data to learn from. Since our supervised learning approach requires only a modest number of annotated training documents to achieve good performance (as can be seen from the learning curves), we argue that the better accuracy achieved more than justifies the annotation effort incurred.

7. Conclusion

In this paper, we presented a learning approach to coreference resolution of noun phrases in unrestricted text. The approach learns from a small, annotated corpus and the task includes resolving not just pronouns but general noun phrases. We evaluated our approach on common data sets, namely, the MUC-6 and MUC-7 coreference corpora. We obtained encouraging results, indicating that on the general noun phrase coreference task, the learning approach achieves accuracy comparable to that of nonlearning approaches.

}}

Figures

References

- Aone, Chinatsu and Scott William Bennett. (1995). Evaluating Automated and Manual Acquisition of Anaphora Resolution Strategies. In: Proceedings of the 33rd Annual Meeting of the Association for Computational Linguistics, 122–129.

- Baldwin, Breck. (1997). CogNIAC: High precision coreference with limited knowledge and linguistic resources. In: Proceedings of the ACL Workshop on Operational Factors in Practical, Robust Anaphora Resolution for Unrestricted Texts, pages 38–45.

- Bikel, Daniel M., Richard Schwartz, and Ralph M. Weischedel. (1999). An algorithm that learns what’s in a name. Machine Learning, 34(1–3):211–231, February.

- Cardie, Claire and Kiri Wagstaff. (1999). Noun phrase coreference as clustering. In: Proceedings of the 1999 Joint SIGDAT Conference on Empirical Methods in Natural Language Processing and Very Large Corpora, pages 82–89.

- Chinchor, Nancy A. (1998). Overview of MUC-7/MET-2. In: Proceedings of the Seventh Message Understanding Conference (MUC-7). http://www.itl.nist.gov/iad/ 894.02/related projects/muc/ proceedings/muc 7 toc.html.

- Kenneth W. Church. (1988). A stochastic parts program and noun phrase parser for unrestricted text. In: Proceedings of the Second Conference on Applied Natural Language Processing, pages 136–143.

- Dietterich, Thomas G. (1998). Approximate statistical tests for comparing supervised classification learning algorithms. Neural Computation, 10(7):1895–1924, October.

- Fisher, David, Stephen Soderland, Joseph McCarthy, Fangfang Feng, and Wendy Lehnert. (1995). Description of the UMass system as used for MUC-6. In: Proceedings of the Sixth Message Understanding Conference (MUC-6), pages 127–140.

- (Ge et al., 1998) ⇒ Niyu Ge, John Hale, and Eugene Charniak. (1998). “A Statistical Approach to Anaphora Resolution.” In: Proceedings of the Sixth Workshop on Very Large Corpora.

- Kameyama, Megumi. (1997). Recognizing referential links: An information extraction perspective. In: Proceedings of the ACL Workshop on Operational Factors in Practical, Robust Anaphora Resolution for Unrestricted Texts, pages 46–53.

- Kehler, Andrew. (1997). Probabilistic coreference in information extraction. In: Proceedings of the Second Conference on Empirical Methods in Natural Language Processing, pages 163–173.

- Lappin, Shalom and Herbert J. Leass. (1994). An algorithm for pronominal anaphora resolution. Computational Linguistics, 20(4):535–561, December.

- McCarthy, Joseph F. (1996). A Trainable Approach to Coreference Resolution for Information Extraction. Ph.D. thesis, 543 Computational Linguistics Volume 27, Number 4 University of assachusetts Amherst, Department of Computer Science, September.

- McCarthy, Joseph F. and Wendy Lehnert. (1995). Using decision trees for coreference resolution. In: Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, pages 1050–1055.

- McEnery, A., I. Tanaka, and S. Botley. (1997). Corpus annotation and reference resolution. In: Proceedings of the ACL Workshop on Operational Factors in Practical, Robust Anaphora Resolution for Unrestricted Texts, pages 67–74.

- Miller, George A. (1990). WordNet: An on-line lexical database. International Journal of Lexicography, 3(4):235–312.

- Mitkov, Ruslan. (1997). Factors in anaphora resolution: They are not the only things that matter. A case study based on two different approaches. In: Proceedings of the ACL Workshop on Operational Factors in Practical, Robust Anaphora Resolution for Unrestricted Texts, pages 14–21.

- MUC-6. (1995). Coreference task definition (v2.3, 8 Sep 95). In: Proceedings of the Sixth Message Understanding Conference (MUC-6), pages 335–344.

- MUC-7. (1997). Coreference task definition (v3.0, 13 Jul 97). In: Proceedings of the Seventh Message Understanding Conference (MUC-7).

- Quinlan, John Ross. (1993). C4.5: Programs for Machine Learning. Morgan Kaufmann, San Francisco, CA.

- Sundheim, Beth M. (1995). Overview of results of the MUC-6 evaluation. In: Proceedings of the Sixth Message Understanding Conference (MUC-6), pages 13–31.

,

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2001 AMachLearnApprToCorefResOfNounPhrases | Hwee Tou Ng Wee Meng Soon Daniel Chung Yong Lim | A Machine Learning Approach to Coreference Resolution of Noun Phrases | Computational Linguistics (CL) Research Area | http://aclweb.org/anthology/J/J01/J01-4004.pdf | 10.1162/089120101753342653 | 2001 |