Deep Contextual Word Representation System

(Redirected from Deep Contextualized Word Representation System)

Jump to navigation

Jump to search

A Deep Contextual Word Representation System is a Deep Learning System that is a Word Embedding System that can produce contextual word vector.

- Example(s):

- Counter-Example(s):

- See: NLP System, Subword Embedding System, OOV Word, Deep Bidirectional Language Model, Bidirectional LSTM, CRF Training Task, Bidirectional RNN, Word Embedding, 1 Billion Word Benchmark.

References

2021

- (Allen Inst. for AI, 2021) ⇒ https://allennlp.org/elmo 2021-05-08.

- QUOTE: ELMo is a deep contextualized word representation that models both (1) complex characteristics of word use (e.g., syntax and semantics), and (2) how these uses vary across linguistic contexts (i.e., to model polysemy). These word vectors are learned functions of the internal states of a deep bidirectional language model (biLM), which is pre-trained on a large text corpus. They can be easily added to existing models and significantly improve the state of the art across a broad range of challenging NLP problems, including question answering, textual entailment and sentiment analysis.

(...)

ELMo representations are:

- Contextual: The representation for each word depends on the entire context in which it is used.

- Deep: The word representations combine all layers of a deep pre-trained neural network.

- Character based: ELMo representations are purely character based, allowing the network to use morphological clues to form robust representations for out-of-vocabulary tokens unseen in training.

- QUOTE: ELMo is a deep contextualized word representation that models both (1) complex characteristics of word use (e.g., syntax and semantics), and (2) how these uses vary across linguistic contexts (i.e., to model polysemy). These word vectors are learned functions of the internal states of a deep bidirectional language model (biLM), which is pre-trained on a large text corpus. They can be easily added to existing models and significantly improve the state of the art across a broad range of challenging NLP problems, including question answering, textual entailment and sentiment analysis.

2019

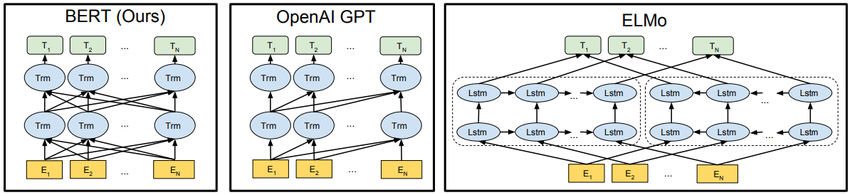

- (Devlin et al., 2019) ⇒ Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. (2019). “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2019), Volume 1 (Long and Short Papers). DOI:10.18653/v1/N19-1423. arXiv:1810.04805

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

- QUOTE: We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers (...)

|