2016 TheGoldilocksPrincipleReadingCh

- (Hill et al., 2016) ⇒ Felix Hill, Antoine Bordes, Sumit Chopra, and Jason Weston. (2016). “The Goldilocks Principle: Reading Children's Books with Explicit Memory Representations.” In: Proceedings of the 4th International Conference on Learning Representations (ICLR 2016) Conference Track.

Subject Headings: Children's Book Test (CBT) Dataset; Reading Comprehension Task.

Notes

Cited By

- Google Scholar: ~ 454 Citations, Retrieved: 2020-12-13.

Quotes

Abstract

We introduce a new test of how well language models capture meaning in children's books. Unlike standard language modelling benchmarks, it distinguishes the task of predicting syntactic function words from that of predicting lower-frequency words, which carry greater semantic content. We compare a range of state-of-the-art models, each with a different way of encoding what has been previously read. We show that models which store explicit representations of long-term contexts outperform state-of-the-art neural language models at predicting semantic content words, although this advantage is not observed for syntactic function words. Interestingly, we find that the amount of text encoded in a single memory representation is highly influential to the performance: there is a sweet-spot, not too big and not too small, between single words and full sentences that allows the most meaningful information in a text to be effectively retained and recalled. Further, the attention over such window-based memories can be trained effectively through self-supervision. We then assess the generality of this principle by applying it to the CNN QA benchmark, which involves identifying named entities in paraphrased summaries of news articles, and achieve state-of-the-art performance.

1. Introduction

2. The Children's Book Test

The experiments in this paper are based on a new resource, the Children's Book Test, designed to measure directly how well language models can exploit wider linguistic context. The CBT is built from books that are freely available thanks to Project Gutenberg [1]. Using children's books guarantees a clear narrative structure, which can make the role of context more salient. After allocating books to either training, validation or test sets, we formed example '$questions$' (denoted $x$) from chapters in the book by enumerating 21 consecutive sentences.

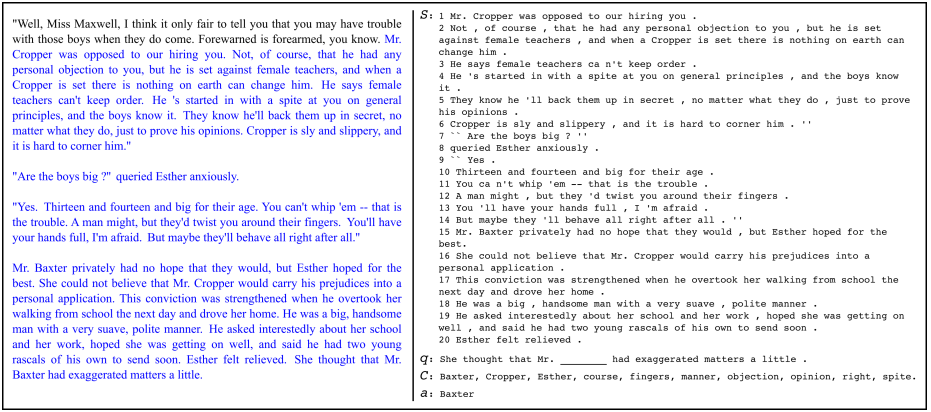

In each question, the first 20 sentences form the context (denoted $S$), and a word (denoted $a$) is removed from the 21st sentence, which becomes the query (denoted $q$). Models must identify the answer word a among a selection of 10 candidate answers (denoted $C$) appearing in the context sentences and the query. Thus, for a question answer pair $(x, a): x = (q, S, C);\; S$ is an ordered list of sentences; $q$ is a sentence (an ordered list $q = q_1,\cdots, q_l$ of words) containing a missing word symbol; $C$ is a bag of unique words such that $a \in C$, its cardinality $\vert C\vert$ is 10 and every candidate word $w \in C$ is such that $w \in q \cup S$. An example question is given in Figure 1.

|

(...)

3. Studying Memory Representation With Memory Networks

4. Baseline And Comparison Models

5. Results

6. Conclusion

We have presented the Children's Book Test, a new semantic language modelling benchmark. The CBT measures how well models can use both local and wider contextual information to make predictions about different types of words in children's stories. By separating the prediction of syntactic function words from more semantically informative terms, the CBT provides a robust proxy for how much language models can impact applications requiring a focus on semantic coherence.

We tested a wide range of models on the CBT, each with different ways of representing and retaining previously seen content. This enabled us to draw novel insights into the optimal strategies for representing and accessing semantic information in memory. One consistent finding was that memories that encode sub-sentential chunks (windows) of informative text seem to be most useful to neural nets when interpreting and modelling language. However, our results indicate that the most useful text chunk size depends on the modeling task (e.g. semantic content vs. syntactic function words). We showed that Memory Networks that adhere to this principle can be efficiently trained using a simple self-supervision to surpass all other methods for predicting named entities on both the CBT and the CNN QA benchmark, an independent test of machine reading.

Acknowledgments

The authors would like to thank Harsha Pentapelli and Manohar Paluri for helping to collect the human annotations and Gabriel Synnaeve for processing the QA CNN data.

Footnotes

A. Experimental Details

B. Results On CBT Validation Set

C. Ablation Study On CNN QA

D. Effects Of Anonymising Entities In CBT

E. Candidates And Window Memories In CBT

References

BibTeX

@inproceedings{2016_TheGoldilocksPrincipleReadingCh,

author = {Felix Hill and

Antoine Bordes and

Sumit Chopra and

Jason Weston},

editor = {Yoshua Bengio and

Yann LeCun},

title = {The Goldilocks Principle: Reading Children's Books with Explicit Memory

Representations},

booktitle = {Proceedings of the 4th International Conference on Learning

Representations (ICLR 2016) Conference Track},

address = {San Juan, Puerto Rico},

date = {May 2-4, 2016},

year = {2016},

url = {http://arxiv.org/abs/1511.02301}

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2016 TheGoldilocksPrincipleReadingCh | Jason Weston Antoine Bordes Sumit Chopra Felix Hill | The Goldilocks Principle: Reading Children's Books with Explicit Memory Representations | 2016 |