2015 ShowAttendandTellNeuralImageCap

- (Xu et al., 2015) ⇒ Kelvin Xu, Jimmy Lei Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard S. Zemel, and Yoshua Bengio. (2015). “Show, Attend and Tell: Neural Image Caption Generation with Visual Attention.” In: Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Volume 37.

Subject Headings: Automatic Image Description Generation, Neural Machine Translation, Attention Mechanism, Image Captions Generation via Neural Machine Translation and Attention Mechanism, Attention Mechanism, Natural Language Generation.

Notes

Pre-print(s) and Other Link(s)

- PLMR: http://proceedings.mlr.press/v37/xuc15.html

- ArXiv: https://arxiv.org/abs/1511.05234

- DBLP: https://dblp.org/rec/html/conf/icml/XuBKCCSZB15

- ACM DL: https://dl.acm.org/doi/10.5555/3045118.3045336

Cited By

- Google Scholar: ~ 4,989 Citations.

- Semantic Scholar: ~ 4,204 Citations.

- MS Academic: ~ 5,003 Citations.

- ACM DL: ~ 363 Citations.

2015a

- (Luong et al., 2015) ⇒ Minh-Thang Luong, Hieu Pham, and Christopher D. Manning. (2015). “Effective Approaches to Attention-based Neural Machine Translation.” arXiv preprint arXiv:1508.04025

2015b

- (Sukhbaatar et al., 2015) ⇒ Sainbayar Sukhbaatar, Jason Weston, and Rob Fergus. (2015). “End-to-end Memory Networks.” In: Advances in Neural Information Processing Systems, pp. 2440-2448.

- QUOTE: … A similar attention model is also used in Xu et al. (24) for generating image captions. Our “memory” is analogous to their attention mechanism, although [2] is only over a single sentence rather than many, as in our case. Furthermore, our model makes several hops on the memory before making an output; we will see below that this is important for good performance. …

Quotes

Abstract

Inspired by recent work in machine translation and object detection, we introduce an attention based model that automatically learns to describe the content of images. We describe how we can train this model in a deterministic manner using standard backpropagation techniques and stochastically by maximizing a variational lower bound. We also show through visualization how the model is able to automatically learn to fix its gaze on salient objects while generating the corresponding words in the output sequence. We validate the use of attention with state-of-the-art performance on three benchmark datasets: Flickr9k, Flickr30k and MS COCO.

1. Introduction

Automatically generating captions for an image is a task close to the heart of scene understanding — one of the primary goals of computer vision. Not only must caption generation models be able to solve the computer vision challenges of determining what objects are in an image, but they must also be powerful enough to capture and express their relationships in natural language. For this reason, caption generation has long been seen as a difficult problem. It amounts to mimicking the remarkable human ability to compress huge amounts of salient visual information into descriptive language and is thus an important challenge for machine learning and AI research.

Yet despite the difficult nature of this task, there has been a recent surge of research interest in attacking the image caption generation problem. Aided by advances in training deep neural networks (Krizhevsky et al., 2012) and the availability of large classification datasets (Russakovsky et al., 2014), recent work has significantly improved the quality of caption generation using a combination of convolutional neural networks (convents) to obtain vectorial representation of images and recurrent neural networks to decode those representations into natural language sentences (see Sec. 2). One of the most curious facets of the human visual system is the presence of attention (Rensink, 2000; Corbetta & Shulman, 2002). Rather than compress an entire image into a static representation, attention allows for salient features to dynamically come to the forefront as needed. This is especially important when there is a lot of clutter in an image. Using representations (such as those from the very top layer of a convnet) that distill information in image down to the most salient objects is one effective solution that has been widely adopted in previous work. Unfortunately, this has one potential drawback of losing information which could be useful for richer, more descriptive captions. Using lower-level representation can help preserve this information. However working with these features necessitates a powerful mechanism to steer the model to information important to the task at hand, and we show how learning to attend at different locations in order to generate a caption can achieve that. We present two variants: a “hard” stochastic attention mechanism and a “soft” deterministic attention mechanism. We also show how one advantage of including attention is the insight gained by approximately visualizing what the model “sees”. Encouraged by recent advances in caption generation and inspired by recent successes in employing attention in machine translation (Bahdanau et al., 2014) and object recognition (Ba et al., 2014; Mnih et al., 2014), we investigate models that can attend to salient part of an image while generating its caption. The contributions of this paper are the following:

- We introduce two attention-based image caption generators under a common framework (Sec. 3.1): 1) a “soft” deterministic attention mechanism trainable by standard back-propagation methods and 2) a “hard” stochastic attention mechanism trainable by maximizing an approximate variational lower bound or equivalently by REINFORCE (Williams, 1992).

- We show how we can gain insight and interpret the results of this framework by visualizing “where” and “what” the attention focused on (see Sec. 5.4.)

- Finally, we quantitatively validate the usefulness of attention in caption generation with state-of-the-art performance (Sec. 5.3) on three benchmark datasets: Flickr8k (Hodosh et al., 2013), Flickr30k (Young et al., 2014) and the MS COCO dataset (Lin et al., 2014).

2. Related Work

In this section we provide relevant background on previous work on image caption generation and attention. Recently, several methods have been proposed for generating image descriptions. Many of these methods are based on recurrent neural networks and inspired by the successful use of sequence-to-sequence training with neural networks for machine translation (Cho et al., 2014; Bahdanau et al., 2014; Sutskever et al., 2014; Kalchbrenner & Blunsom, 2013). The encoder-decoder framework (Cho et al., 2014) of machine translation is well suited, because it is analogous to “translating” an image to a sentence.

The first approach to using neural networks for caption generation was proposed by Kiros et al. (2014a) who used a multimodal log-bilinear model that was biased by features from the image. This work was later followed by Kiros et al. (2014b) whose method was designed to explicitly allow for a natural way of doing both ranking and generation. Mao et al. (2014) used a similar approach to generation but replaced a feedforward neural language model with a recurrent one. Both Vinyals et al. (2014) and Donahue et al. (2014) used recurrent neural networks (RNN) based on long short-term memory (LSTM) units (Hochreiter & Schmidhuber, 1997) for their models. Unlike Kiros et al. (2014a) and Mao et al. (2014) whose models see the image at each time step of the output word sequence, Vinyals et al. (2014) only showed the image to the RNN at the beginning. Along with images, Donahue et al. (2014) and Yao et al. (2015) also applied LSTMs to videos, allowing their model to generate video descriptions.

Most of these works represent images as a single feature vector from the top layer of a pre-trained convolutional network. Karpathy & Li (2014) instead proposed to learn a joint embedding space for ranking and generation whose model learns to score sentence and image similarity as a function of R-CNN object detections with outputs of a bidirectional RNN. Fang et al. (2014) proposed a three-step pipeline for generation by incorporating object detections. Their models first learn detectors for several visual concepts based on a multi-instance learning framework. A language model trained on captions was then applied to the detector outputs, followed by rescoring from a joint imagetext embedding space. Unlike these models, our proposed attention framework does not explicitly use object detectors but instead learns latent alignments from scratch. This allows our model to go beyond “objectness” and learn to attend to abstract concepts.

Prior to the use of neural networks for generating captions, two main approaches were dominant. The first involved generating caption templates which were filled in based on the results of object detections and attribute discovery (Kulkarni et al. (2013), Li et al. (2011), Yang et al. (2011), Mitchell et al. (2012), Elliott & Keller (2013)). The second approach was based on first retrieving similar captioned images from a large database then modifying these retrieved captions to fit the query (Kuznetsova et al., 2012; 2014). These approaches typically involved an intermediate “generalization” step to remove the specifics of a caption that are only relevant to the retrieved image, such as the name of a city. Both of these approaches have since fallen out of favour to the now dominant neural network methods.

There has been a long line of previous work incorporating the idea of attention into neural networks. Some that share the same spirit as our work include Larochelle & Hinton (2010); Denil et al. (2012); Tang et al. (2014) and more recently Gregor et al. (2015). In particular however, our work directly extends the work of Bahdanau et al. (2014); Mnih et al. (2014); Ba et al. (2014); Graves (2013).

3. Image Caption Generation with Attention Mechanism

3.1. Model Details

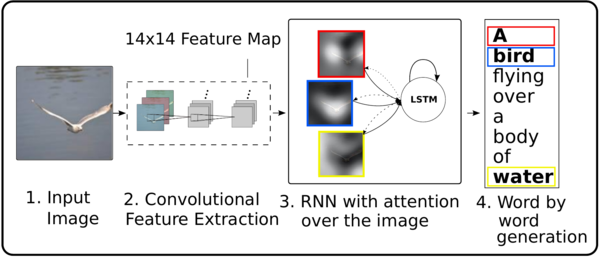

In this section, we describe the two variants of our attention-based model by first describing their common framework. The key difference is the definition of the $\theta$ function which we describe in detail in Sec. 4. See Fig. 1 for the graphical illustration of the proposed model.

We denote vectors with bolded font and matrices with capital letters. In our description below, we suppress bias terms for readability.

|

3.1.1. Encoder: Convolutional Features

Our model takes a single raw image and generates a caption $y$ encoded as a sequence of 1-of-K encoded words.

[math]\displaystyle{ y =\{\mathbf{y}_i,\cdots, \mathbf{y}_C\}, \mathbf{y}_i \in \R^K }[/math]

where $K$ is the size of the vocabulary and $C$ is the length of the caption.

We use a convolutional neural network in order to extract a set of feature vectors which we refer to as annotation vectors. The extractor produces $L$ vectors, each of which is a D-dimensional representation corresponding to a part of the image.

[math]\displaystyle{ a= \{\mathbf{a}_j,\cdots,\mathbf{a}_L\}, \mathbf{a}_i \in \R^D }[/math]

In order to obtain a correspondence between the feature vectors and portions of the 2-D image, we extract features from a lower convolutional layer unlike previous work which instead used a fully connected layer. This allows the decoder to selectively focus on certain parts of an image by weighting a subset of all the feature vectors.

3.1.2. Decoder: Long Short-Term Memory Network

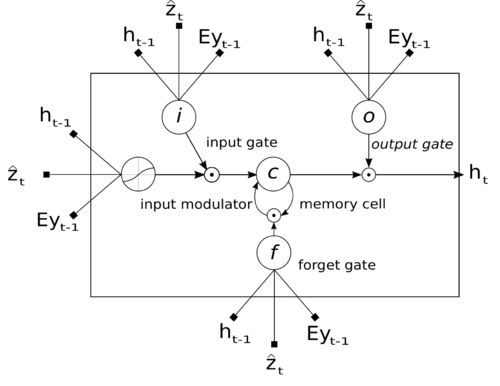

We use a long short-term memory (LSTM) network (Hochreiter & Schmidhuber, 1997) that produces a caption by generating one word at every time step conditioned on a context vector, the previous hidden state and the previously generated words. Our implementation of LSTMs, shown in Fig. 2, closely follows the one used in Zaremba et al. (2014):

[math]\displaystyle{ \begin{align} \mathbf{i}_t & = \sigma \left(W_i E \mathbf{y}_{t-1} + U_i \mathbf{h}_{t-1} + Z_i\mathbf{\hat{z}}_t + \mathbf{b}_i\right),\\ \mathbf{f}_t & = \sigma \left(W_f E \mathbf{y}_{t-1} + U_f \mathbf{h}_{t-1} + Z_f\mathbf{\hat{z}}_t + \mathbf{b}_f\right),\\ \mathbf{c}_t & =\mathbf{f}_t\mathbf{c}_{t-1} + \mathbf{i}_t \left(W_c E \mathbf{y}_{t-1} + U_c \mathbf{h}_{t-1} + Z_c\mathbf{\hat{z}}_t + \mathbf{b}_c\right),\\ \mathbf{o}_t & = \sigma \left(W_o E \mathbf{y}_{t-1} + U_o \mathbf{h}_{t-1} + Z_o\mathbf{\hat{z}}_t + \mathbf{b}_o\right),\\ \mathbf{h}_t &= \mathbf{o}_t \mathrm{tanh}\left(\mathbf{c}_t\right). \end{align} }[/math]

Here, $\mathbf{i}_t$, $\mathbf{f}_t$, $\mathbf{c}_t$, $\mathbf{o}_t$, $\mathbf{h}_t$, are the input, forget, memory, output and hidden state of the LSTM, respectively. $W_{\bullet}$, $U_{\bullet}$, $Z_{\bullet}$, and $b_{\bullet}$, are learned weight matrices and biases. $\mathbf{E} \in \R^{m\times K}$ is an embedding matrix. Let $m$ and $n$ denote the embedding and LSTM dimensionality respectively and $\sigma$ be the logistic sigmoid activation.

|

In simple terms, the context vector $\mathbf{\hat{z}}_t$ is a dynamic representation of the relevant part of the image input at time $t$. We define a mechanism $\phi$ that computes $\mathbf{\hat{z}}_t$, from the annotation vectors $\mathbf{a}_i,i = 1,\cdots,L$ corresponding to the features extracted at different image locations. For each location $i$, the mechanism generates a positive weight $\alpha_i$ which can be interpreted either as the probability that location $i$ is the right place to focus for producing the next word (stochastic attention mechanism), or as the relative importance to give to location $i$ in blending the $\mathbf{a}_i$’s together (deterministic attention mechanism). The weight $\alpha_i$ of each annotation vector $a_i$ is computed by an attention model $f_{att}$ for which we use a multilayer perceptron conditioned on the previous hidden state $h_{t-1}$. To emphasize, we note that the hidden state varies as the output RNN advances in its output sequence: “where” the network looks next depends on the sequence of words that has already been generated.

[math]\displaystyle{ \alpha_{ti} = \dfrac{ \exp\left(e_{ti}\right)}{\displaystyle \sum_{k=1}^L \exp\left(e_{tk}\right)} }[/math]

Once the weights (which sum to one) are computed, the context vector $\hat{z}_t$ is computed by

| [math]\displaystyle{ \mathbf{\hat{z}}_t=\phi\left(\{\mathbf{a}_i\},\{\alpha_i\}\right) }[/math] | (1) |

where $\phi$ is a function that returns a single vector given the set of annotation vectors and their corresponding weights. The details of the $\phi$ function are discussed in Sec. 4.

The initial memory state and hidden state of the LSTM are predicted by an average of the annotation vectors fed through two separate MLPs (init,c and init,h):

[math]\displaystyle{ \displaystyle\mathbf{c}_o = f_{init,c}\left(\dfrac{1}{L}\sum^L_i\mathbf{a}_i\right) }[/math], [math]\displaystyle{ \mathbf{h}_o = f_{init,h} \left(\dfrac{1}{L}\sum^L_i\mathbf{a}_i\right)$ }[/math]

In this work, we use a deep output layer (Pascanu et al., 2014) to compute the output word probability. Its input are cues from the image (the context vector), the previously generated word, and the decoder state ($h_t$).

| [math]\displaystyle{ p\left(\mathbf{y}_t\vert\mathbf{a},\mathbf{y}^{t-1}_1\right) \propto \exp\left(\mathbf{L}o\left(\mathbf{Ey}_{t-1} + \mathbf{L}_h\mathbf{h}_t + \mathbf{L}_z\mathbf{\hat{z}}_t\right)\right) }[/math] | (2) |

where $\mathbf{L}_o \in \R^{K\times m}$, $\mathbf{L}_h \in \R^{m\times n}$, $\mathbf{L}_z in \R^{m\times D}$, and $\mathbf{E}$ are learned parameters initialized randomly.

4. Learning Stochastic "Hard" vs Deterministic "Soft" Attention

In this section we discuss two alternative mechanisms for the attention model $f_{att}$: stochastic attention and deterministic attention.

4.1. Stochastic “Hard” Attention

We represent the location variable $s_t$ as where the model decides to focus attention when generating the $t$-th word. $s_{t,i}$ an indicator one-hot variable which is set to $1$ if the $i$-th location (out of $L$) is the one used to extract visual features. By treating the attention locations as intermediate latent variables, we can assign a multinoulli distribution parametrized by $\{\alpha_i\},$ and view $\mathbf{\hat{z}}_t$ as a random variable:

| [math]\displaystyle{ p\left(s_{t,i} = 1\vert s_{j\lt t},\mathbf{a}\right) = \alpha_{t,i} }[/math] | (3) |

| [math]\displaystyle{ \mathbf{\hat{z}}_t = \displaystyle \sum_is_{t,i} \mathbf{a}_i }[/math] | (4) |

We define a new objective function $L$, that is a variational lower bound on the marginal log-likelihood $\log p\left(\mathbf{y} \vert\mathbf{a}\right)$ of observing the sequence of words $y$ given image features $\mathbf{a}$. Similar to work in generative deep generative modeling (Kingma & Welling, 2014; Rezende et al., 2014), the learning algorithm for the parameters $W$ of the models can be derived by directly optimizing

| [math]\displaystyle{ \begin{align}\displaystyle L_s &= \sum_s p\left(s\vert\mathbf{a}\right)\log\;p\left(\mathbf{y}\vert s, \mathbf{a}\right) \\ &\leq \log \sum_s p\left(vert\mathbf{a}\right)p\left(\mathbf{y}\vert s, \mathbf{a}\right)\\ &= log\;p\left(\mathbf{y}\vert\mathbf{a}\right), \end{align} }[/math] | (5) |

following its gradient

| [math]\displaystyle{ \displaystyle \dfrac{\partial L_s}{\partial W}= \sum^N_{n=1}\Big[\dfrac{\partial \log\;p\left(\mathbf{y}\vert s,\mathbf{a}\right)}{\partial W}+\log\; p\left(\mathbf{y}\vert s, \mathbf{a}\right)\dfrac{\partial\log\;p\left(s\vert\mathbf{a}\right)}{\partial W}\Big] }[/math] | (6) |

We approximate this gradient of $L$, by a Monte Carlo method such that

| [math]\displaystyle{ \displaystyle \dfrac{\partial L_s}{\partial W}\approx \dfrac{1}{N}\sum^N_{n=1}\Big[\dfrac{\partial \log\;p\left(\mathbf{y}\vert\tilde{s}^n,\mathbf{a}\right)}{\partial W}+\log\; p\left(\mathbf{y}\vert\tilde{s}^n, \mathbf{a}\right)\dfrac{\partial\log\;p\left(\tilde{s}^n\vert\mathbf{a}\right)}{\partial W}\Big] }[/math] | (7) |

where $\tilde{s}^n= \left(s_1^n, s_2^n,\cdots\right)$ is a sequence of sampled attention locations. We sample the location $\tilde{s}^n_t$ from a multinouilli distribution defined by Eq. (3):

[math]\displaystyle{ \tilde{s}_t^n \sim \mathrm{Multinoulli}_L \left(\{\alpha_i^n\}\right) }[/math].

We reduce the variance of this estimator with the moving average baseline technique (Weaver & Tao, 2001). Upon seeing the $k$-th mini-batch, the moving average baseline is estimated as an accumulated sum of the previous log likelihoods with exponential decay:

[math]\displaystyle{ b_k = 0.9 \times b_{k-1} +0.1 \times \log p\left(\mathbf{y} \vert\tilde{s}_k, \mathbf{a}\right) }[/math]

To further reduce the estimator variance, the gradient of the entropy $H|s]|$ of the multinouilli distribution is added to the RHS of Eq. (7).

The final learning rule for the model is then

[math]\displaystyle{ \displaystyle \dfrac{\partial L_s}{\partial W}\approx \dfrac{1}{N}\sum^N_{n=1}\Big[\dfrac{\partial \log \;p\left(\mathbf{y}\vert\tilde{s}^n,\mathbf{a}\right)}{\partial W}+\lambda_r\left(\log\; p\left(\mathbf{y}\vert\tilde{s}^n, \mathbf{a}\right)-b\right)\dfrac{\partial\log\;p\left(\tilde{s}^n\vert\mathbf{a}\right)}{\partial W}+\lambda_e\dfrac{\partial H[\tilde{s}^n]}{\partial W}\Big] }[/math]

where, $\lambda_r$, and $\lambda_e$, are two hyper-parameters set by cross-validation. As pointed out and used by Ba et al. (2014) and Mnih et al. (2014), this formulation is equivalent to the REINFORCE learning rule (Williams, 1992), where the reward for the attention choosing a sequence of actions is a real value proportional to the log likelihood of the target sentence under the sampled attention trajectory.

In order to further improve the robustness of this learning rule, with probability 0.5 for a given image, we set the sampled attention location $\tilde{s}$ to its expected value a (equivalent to the deterministic attention in Sec. 4.2).

4.2. Deterministic "Soft" Attention

Learning stochastic attention requires sampling the attention location $s_t$ each time, instead we can take the expectation of the context vector $\mathbf{\hat{z}}_t$ directly,

| [math]\displaystyle{ \displaystyle \mathbb{E}_{p\left(s_t\vert a\right)}\big[\mathbf{\hat{z}}_i\big]=\sum_{i=1}^L\alpha_{t,i}\mathbf{a}_i }[/math] | (8) |

and formulate a deterministic attention model by computing a soft attention weighted annotation vector $\phi\left(\{\mathbf{a}_i\},\{\alpha_i\}\right)=\sum_{i=1}^L\alpha_i\mathbf{a}_i;$ as proposed by Bahdanau et al. (2014). This corresponds to feeding in a soft $\alpha$ weighted context into the system. The whole model is smooth and differentiable under the deterministic attention, so learning end-to-end is trivial by using standard back-propagation.

Learning the deterministic attention can also be understood as approximately optimizing the marginal likelihood in Eq. (5) under the attention location random variable $s_t$ from Sec. 4.1. The hidden activation of LSTM $h_t$ is a linear projection of the stochastic context vector $z,;$ followed by tanh non-linearity. To the first-order Taylor approximation, the expected value $ \mathbb{E}_{p\left(s_t\vert a\right)}\big[\mathbf{h}_t\big]$ is equivalent to computing $\mathbf{h}_t$ using a single forward computation with the expected context vector $\mathbb{E}_{p\left(s_t\vert a\right)}\big[\mathbf{\hat{z}}_i\big]$.

Let us denote by $n_{t,i}$ as n in Eq. (2) with $\mathbf{\hat{z}}_t$ set to $\mathbf{a}_i$. Then, we can write the normalized weighted geometric mean (NWGM) of the softmax of $k$-th word prediction as

[math]\displaystyle{ \begin{align} NWGM\Big[p\left(y_t=k\vert\mathbf{a}\right)\Big] &= \dfrac{\displaystyle\prod_i\exp\left(n_{t,k,i}\right)^{p\left(s_{t,i}=1\vert a\right)}}{\displaystyle\sum_j\prod_i\exp\left(n_{t,j,i}\right)^{p\left(s_{t,i}=1\vert a\right)}} \\ &= \dfrac{\exp\left(\mathbb{E}_{p\left(s_t\vert a\right)}\Big[n_{t,k,i}\Big]\right)}{\displaystyle\sum_j\exp\left( \mathbb{E}_{p\left(s_t\vert a\right)}\Big[n_{t,k,i}\Big]\right)} \end{align} }[/math]

This implies that the NWGM of the word prediction can be well approximated by using the expected context vector $\mathbb{E} \big[\mathbf{\hat{z}}_t\big]$, instead of the sampled context vector $\mathbf{a}_i$.

Furthermore, from the result by Baldi & Sadowski (2014), the NWGM in Eq. (9) which can be computed by a single feedforward computation approximates the expectation $\mathbb{E}\Big[p\left(y =k | \mathbf{a}\right)\Big]$ of the output over all possible attention locations induced by random variable $s_t$. This suggests that the proposed deterministic attention model approximately maximizes the marginal likelihood over all possible attention locations.

4.2.1. Doubly Stochastic Attention

In training the deterministic version of our model, we introduce a form a doubly stochastic regularization that encourages the model to pay equal attention to every part of the image. Whereas the attention at every point in time sums to $1$ by construction (i.e $\sum_i\alpha_{ti}=1$), the attention $\sum_i\alpha_{ti}$ iS not constrained in any way. This makes it possible for the decoder to ignore some parts of the input image. In order to alleviate this, we encourage $\sum_t\alpha_{ti} \approx \tau$ where $\tau \geq \frac{L}{D}$. In our experiments, we observed that this penalty quantitatively improves overall performance and that this qualitatively leads to more descriptive captions.

Additionally, the soft attention model predicts a gating scalar $\beta$ from previous hidden state $\mathbf{h}_{t-1}$ at each time step $t$, such that, $\phi\left(\{\mathbf{a_i}\},\{\alpha_i\}\right) = \beta\sum_i^L\alpha_{i}\mathbf{a}_i$, where $\beta_t = \sigma\left(f_\beta\left(\mathbf{h}_{t-1}\right)\right)$. This gating variable lets the decoder decide whether to put more emphasis on language modeling or on the context at each time step. Qualitatively, we observe that the gating variable is larger than the decoder describes an object in the image.

The soft attention model is trained end-to-end by minimizing the following penalized negative log-likelihood:

| [math]\displaystyle{ L_d=-\log\left(p\left(\mathbf{y}\vert \mathbf{a}\right)\right)+\lambda\displaystyle \sum_i^L\left(1-\displaystyle \sum_i^C\alpha_{ti}\right)^2 }[/math] | (9) |

where we simply fixed $\tau$ to $1$.

4.3. Training Procedure

Both variants of our attention model were trained with stochastic gradient descent using adaptive learning rates. For the Flickr8k dataset, we found that RMSProp (Tieleman & Hinton, 2012) worked best, while for Flickr30k/MS COCO dataset we for the recently proposed Adam algorithm (Kingma & Ba, 2014) to be quite effective.

To create the annotations $a_i$ used by our decoder, we used the Oxford VGGnet (Simonyan & Zisserman, 2014) pretrained on ImageNet without fine-tuning. In our experiments we use the 14×14×512 feature map of the fourth convolutional layer before max pooling. This means our decoder operates on the flattened 196 × 512 (i.e $L \times D$) encoding. In principle however, any encoding function could be used. In addition, with enough data, the encoder could also be trained from scratch (or fine-tune) with the rest of the model.

As our implementation requires time proportional to the length of the longest sentence per update, we found training on a random group of captions to be computationally wasteful. To mitigate this problem, in preprocessing we build a dictionary mapping the length of a sentence to the corresponding subset of captions. Then, during training we randomly sample a length and retrieve a mini-batch of size 64 of that length. We found that this greatly improved convergence speed with no noticeable diminishment in performance. On our largest dataset (MS COCO), our soft attention model took less than 3 days to train on an NVIDIA Titan Black GPU.

In addition to dropout (Srivastava et al., 2014), the only other regularization strategy we used was early stopping on BLEU score. We observed a breakdown in correlation between the validation set log-likelihood and BLEU in the later stages of training during our experiments. Since BLEU is the most commonly reported metric, we used BLEU on our validation set for model selection.

In our experiments with soft attention, we used Whet-lab[1]! (Snoek et al., 2012; 2014) in our Flickr8k experiments. Some of the intuitions we gained from hyperparameter regions it explored were especially important in our Flickr30k and COCO experiments.

We make ourcode for these models publicly available to encourage future research in this area[2].

5. Experiments

We describe our experimental methodology and quantitative results which validate the effectiveness of our model for caption generation.

5.1. Data

We report results on the widely-used Flickr8k and Flickr30k dataset as well as the more recently introduced MS COCO dataset. Each image in the Flickr8k / 30k dataset have 5 reference captions. In preprocessing our COCO dataset, we maintained a the same number of references between our datasets by discarding caption in excess of 5. We applied only basic tokenization to MS COCO so that it is consistent with the tokenization present in Flickr8k and Flickr30k. For all our experiments, we used a fixed vocabulary size of 10, 000.

Results for our attention-based architecture are reported in Table 1. We report results with the frequently used BLEU metric[3] which is the standard in image caption generation research. We report BLEU[4] from 1 to 4 without a brevity penalty. There has been, however, criticism of BLEU, so we report another common metric METEOR (Denkowski & Lavie, 2014) and compare whenever possible.

5.2. Evaluation Procedures

A few challenges exist for comparison, which we explain here. The first challenge is a difference in choice of convolutional feature extractor. For identical decoder architectures, using a more recent architectures such as GoogLeNet (Szegedy et al., 2014) or Oxford VGG (Simonyan & Zisserman, 2014) can give a boost in performance over using the AlexNet (Krizhevsky et al., 2012). In our evaluation, we compare directly only with results which use the comparable GoogLeNet / Oxford VGG features, but for METEOR comparison we include some results that use AlexNet.

The second challenge is a single model versus ensemble comparison. While other methods have reported performance boosts by using ensembling, in our results we report a single model performance.

Finally, there is a challenge due to differences between dataset splits. In our reported results, we use the predefined splits of Flickr8k. However, for the Flickr30k and COCO datasets is the lack of standardized splits for which results are reported. As a result, we report the results with the publicly available splits[5] used in previous work (Karpathy & Li, 2014). We note, however, that the differences in splits do not make a substantial difference in overall performance.

5.3. Quantitative Analysis

In Table 1, we provide a summary of the experiment validating the quantitative effectiveness of attention. We obtain state of the art performance on the Flickr8k, Flickr30k and MS COCO. In addition, we note that in our experiments we are able to significantly improve the state-of-the-art performance METEOR on MS COCO. We speculate that this is connected to some of the regularization techniques we used (see Sec. 4.2.1) and our lower-level representation.

| BLEU | ||||||

|---|---|---|---|---|---|---|

| Dataset | Model | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR |

| Flickr8k | Google NIC(Vinyals etal., 2014)† Σ | 63 | 41 | 27 | — | — |

| Log Bilinear (Kiros et al.,2014a)°</deh> | 65.6 | 42.4 | 27.7 | 17.7 | 17.31 | |

| Soft-Attention | 67 | 44.8 | 29.9 | 19.5 | 18.93 | |

| Hard-Attention | 67 | 45.7 | 31.4 | 21.3 | 20.30 | |

| Flickr30k | Google NIC† ° Σ | 66.3 | 42.3 | 27.7 | 18.3 | — |

| Log Bilinear | 60.0 | 38 | 25.4 | 17.1 | 16.88 | |

| Soft-Attention | 66.7 | 43.4 | 28.8 | 19.1 | 18.49 | |

| Hard-Attention | 66.9 | 43.9 | 29.6 | 19.9 | 18.46 | |

| COCO | CMU/MS Research (Chen & Zitnick,2014)a | — | — | — | — | 20.41 |

| MS Research (Fang etal.,2014)† a | — | — | — | — | 20.71 | |

| BRNN (Karpathy & Li, 2014)° | 64.2 | 45.1 | 30.4 | 20.3 | — | |

| GoogleNICT† ° Σ | 66.6 | 46.1 | 32.9 | 24.6 | — | |

| Log Bilinear° | 70.8 | 48.9 | 34.4 | 24.3 | 20.03 | |

| Soft-Attention | 70.7 | 49.2 | 34.4 | 24.3 | 23.90 | |

| Hard-Attention | 71.8 | 50.4 | 35.7 | 25.0 | 23.04 | |

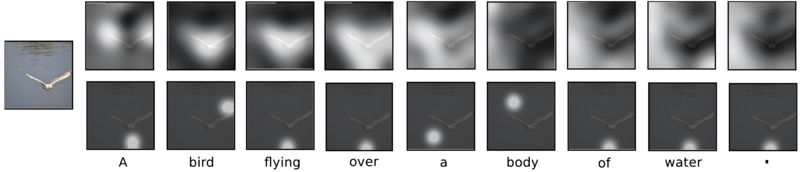

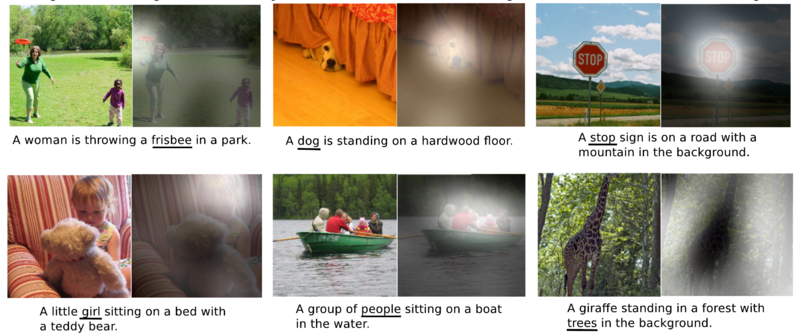

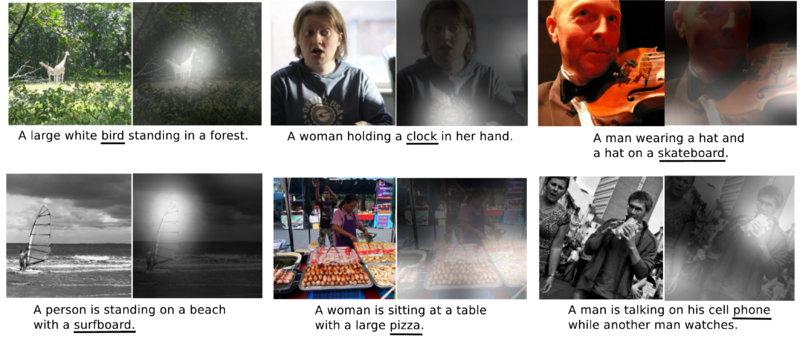

5.4. Qualitative Analysis: Learning to Attend

By visualizing the attention learned by the model, we are able to add an extra layer of interpretability to the output of the model (see Fig.1). Other systems that have done this rely on object detection systems to produce candidate alignment targets (Karpathy & Li, 2014). Our approach is much more flexible, since the model can attend to “nonobject” salient regions.

The 19-layer OxfordNet uses stacks of 3×3 filters meaning the only time the feature maps decrease in size are due to the max pooling layers. The input image is resized so that the shortest side is 256-dimensional with preserved aspect ratio. The input to the convolutional network is the center-cropped 224 x 224 image. Consequently, with four max pooling layers, we get an output dimension of the top convolutional layer of 14×14. Thus in order to visualize the attention weights for the soft model, we upsample the weights by a factor of 24 = 16 and apply a Gaussian filter deepimagesent/to emulate the large receptive field size.

As we can see in Figs. 3 and 4, the model learns alignments that agree very strongly with human intuition. Especially from the examples of mistakes in Fig. 5, we see that it is possible to exploit such visualizations to get an intuition as to why those mistakes were made. We provide a more extensive list of visualizations as the supplementary materials for the reader.

|

|

|

6. Conclusion

We propose an attention based approach that gives state of the art performance on three benchmark datasets using the BLEU and METEOR metric. We also show how the learned attention can be exploited to give more interpretability into the models generation process, and demonstrate that the learned alignments correspond very well to human intuition. We hope that the results of this paper will encourage future work in using visual attention. We also expect that the modularity of the encoder-decoder approach combined with attention to have useful applications in other domains.

Acknowledgments

The authors would like to thank the developers of Theano (Bergstra et al., 2010; Bastien et al., 2012). We acknowledge the support of the following organizations for research funding and computing support: NSERC, Samsung, NVIDIA, Calcul Québec, Compute Canada, the Canada Research Chairs and CIFAR. The authors would also like to thank Nitish Srivastava for assistance with his ConvNet package as well as preparing the Oxford convolutional network and Relu Patrascu for helping with numerous infrastructure-related problems.

References

2015a

- (Gregor et al., 2015) ⇒ Gregor, Karol, Danihelka, Ivo, Graves, Alex, and Wierstra, Daan. Draw: A Recurrent Neural Network for Image Generation. arXiv Preprint ArXiv:1502.04623, 2015.

2015b

- (Yao et al., 2015) ⇒ Li Yao, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, Aaron Courville. Describing Videos by Exploiting Temporal Structure. Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), p.4507-4515, December 07-13, 2015.

2014a

- (Ba et al., 2014) ⇒ Ba, Jimmy Lei, Mnih, Volodymyr, and Kavukcuoglu, Koray. Multiple Object Recognition with Visual Attention. arXiv:1412.7755 [cs.LG], December 2014.

2014b

- (Bahdanau et al., 2014) ⇒ Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. (2014). “Neural Machine Translation by Jointly Learning to Align and Translate.” In: Proceedings of the Third International Conference on Learning Representations, (ICLR-2015).

2014c

- (Baldi & Sadowski, 2014) ⇒ Pierre Baldi, Peter Sadowski. The Dropout Learning Algorithm. Artificial Intelligence, 210, p.78-122, May, 2014.

2014d

- (Chen et al., 2014) ⇒ Chen, Xinlei and Zitnick, C Lawrence. Learning a Recurrent Visual Representation for Image Caption Generation. arXiv Preprint ArXiv:1411.5654, 2014.

2014d

- (Cho et al., 2014a) ⇒ Kyunghyun Cho, Bart van Merrienboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. (2014). “Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation”. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP-2014). arXiv:1406.1078

2014e

- (Denkowski & Lavie., 2014) ⇒ Michael J. Denkowski, and Alon Lavie. (2014). “Meteor Universal: Language Specific Translation Evaluation for Any Target Language". In: Proceedings of the Ninth Workshop on Statistical Machine Translation (WMT@ACL 2014). DOI:10.3115/v1/W14-3348.

2014f

- (Donahue et al., 2014) ⇒ Donahue, Jeff, Hendrikcs, Lisa Anne, Guadarrama, Segio, Rohrbach, Marcus, Venugopalan, Subhashini, Saenko, Kate, and Darrell, Trevor. Long-term Recurrent Convolutional Networks for Visual Recognition and Description. arXiv:1411.4389v2 [cs.CV], November 2014.

2014g

- (Fang et al., 2014) ⇒ Fang, Hao, Gupta, Saurabh, Iandola, Forrest, Srivastava, Rupesh, Deng, Li, Dollár, Piotr, Gao, Jianfeng, He, Xiaodong, Mitchell, Margaret, Platt, John, Et Al. From Captions to Visual Concepts and Back. arXiv:1411.4952 [cs.CV], November 2014.

2014h

- (Karphaty et al., 2014) ⇒ Karpathy, Andrej and Li, Fei-Fei. Deep Visual-semantic Alignments for Generating Image Descriptions. arXiv:1412.2306 [cs.CV], December 2014.

2014i

- (Kingma et al., 2014) ⇒ Kingma, Diederik P. and Ba, Jimmy. Adam: A Method for Stochastic Optimization. arXiv:1412.6980 [cs.LG], December 2014.

2014j

- (Kingma & Welling, 2014) ⇒ Kingma, Durk P. and Welling, Max. Auto-encoding Variational Bayes. In: Proceedings of the International Conference on Learning Representations (ICLR), 2014.

2014k

- (Kiros et al., 2014a) ⇒ Kiros, Ryan, Salahutdinov, Ruslan, and Zemel, Richard. Multimodal Neural Language Models. In: Proceedings of The International Conference on Machine Learning, Pp. 595-603, 2014.

2014l

- (Kiros et al., 2014b) ⇒ Kiros, Ryan, Salakhutdinov, Ruslan, and Zemel, Richard. Unifying Visual-semantic Embeddings with Multimodal Neural Language Models. arXiv:1411.2539 [cs.LG], November 2014.

2014m

- (Kuznetsova et al., 2014) ⇒ Kuznetsova, Polina, Ordonez, Vicente, Berg, Tamara L, and Choi, Yejin. Treetalk: Composition and Compression of Trees for Image Descriptions. TACL, 2(10):351-362, 2014.

2014n

- (Lin et al., 2014) ⇒ Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollar, and C. Lawrence Zitnick. (2014). “Microsoft COCO: Common Objects in Context.” In: Proceeding of the 13th European Conference in Computer Vision Part V (ECCV 2014).

2014o

- (Mao et al., 2014) ⇒ Mao, Junhua, Xu, Wei, Yang, Yi, Wang, Jiang, and Yuille, Alan. Deep Captioning with Multimodal Recurrent Neural Networks (m-rnn). arXiv:1412.6632[cs.CV], December 2014.

2014p

- (Mnih et al., 2014) ⇒ Mnih,Volodymyr, Nicolas Heess, Graves, Alex, Kavukcuoglu, Koray. Recurrent Models of Visual Attention. Proceedings of the 27th International Conference on Neural Information Processing Systems, p.2204-2212, December 08-13, Montreal, Canada, 2014.

2014q

- (Pascanu et al., 2014) ⇒ Pascanu, Razvan, Gulcehre, Caglar, Cho, Kyunghyun, and Bengio, Yoshua. How to Construct Deep Recurrent Neural Networks. In ICLR, 2014.

2014r

- (Russakovsky et al., 2014) ⇒ Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. (2014). “ImageNet Large Scale Visual Recognition Challenge". In: International Journal of Computer Vision (IJCV). DOI:10.1007/s11263-015-0816-y.

2014s

- (Simonyan & Zisserman, 2014) ⇒ Simonyan, K. and Zisserman, A. Very Deep Convolutional Networks for Large-scale Image Recognition. arXiv Preprint ArXiv:1409.1556, 2014.

2014t

- (Snoek et al., 2014) ⇒ Snoek, Jasper, Swersky, Kevin, Zemel, Richard S, and Adams, Ryan P. Input Warping for Bayesian Optimization of Nonstationary Functions. arXiv Preprint ArXiv:1402.0929, 2014.

2014u

- (Srivastava et al., 2014) ⇒ Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. (2014). “Dropout: A Simple Way to Prevent Neural Networks from Overfitting.” In: The Journal of Machine Learning Research, 15(1).

2014v

- (Sutskever et al., 2014) ⇒ Ilya Sutskever, Oriol Vinyals, and Quoc V. Le. (2014). “Sequence to Sequence Learning with Neural Networks.” In: Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems (NIPS 2014).

2014w

- (Szegedy et al., 2014) ⇒ Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott E. Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. (2014, 2015). “Going Deeper with Convolutions.” In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/CVPR.2015.7298594

2014x

- (Tang et al., 2014) ⇒ Yichuan Tang, Nitish Srivastava, Ruslan Salakhutdinov .Learning Generative Models with Visual Attention. Proceedings of the 27th International Conference on Neural Information Processing Systems, p.1808-1816, December 08-13, Montreal, Canada, 2014.

2014y

- (Vinyals et al., 2014) ⇒ Vinyals, Oriol, Toshev, Alexander, Bengio, Samy, and Erhan, Dumitru. Show and Tell: A Neural Image Caption Generator. arXiv:1411.4555 [cs.CV], November 2014.

2014z

- (Rezende et al., 2014) ⇒ Rezende, Danilo J., Mohamed, Shakir, and Wierstra, Daan. Stochastic Backpropagation and Approximate Inference in Deep Generative Models. Technical Report, ArXiv:1401.4082, 2014.

2014aa

- (Young et al., 2014) ⇒ Young, Peter, Lai, Alice, Hodosh, Micah, and Hockenmaier, Julia. From Image Descriptions to Visual Denotations: New Similarity Metrics for Semantic Inference over Event Descriptions. TACL, 2:67-78, 2014.

2014ba

- (Zaremba et al., 2014) ⇒ Zaremba, Wojciech, Sutskever, Ilya, and Vinyals, Oriol. Recurrent Neural Network Regularization. arXiv Preprint ArXiv:1409.2329, September 2014.

2013a

- (Elliot & Keller, 2013) ⇒ Elliott, Desmond and Keller, Frank. Image Description Using Visual Dependency Representations. In EMNLP, Pp. 1292-1302, 2013.

2013b

- (Graves, 2013) ⇒ Graves, Alex. Generating Sequences with Recurrent Neural Networks. Technical Report, ArXiv Preprint ArXiv:1308.0850, 2013.

2013c

- (Hodosh et al., 2013) ⇒ Micah Hodosh, Peter Young, Julia Hockenmaier, Framing Image Description As a Ranking Task: Data, Models and Evaluation Metrics, Journal of Artificial Intelligence Research, v.47 n.1, p.853-899, May 2013.

2013d

- (Kalchbrenner & Blunsom, 2013) ⇒ Kalchbrenner, Nal and Blunsom, Phil. Recurrent Continuous Translation Models. In: Proceedings of the ACL Conference on Empirical Methods in Natural Language Processing (EMNLP), Pp. 1700-1709. Association for Computational Linguistics, 2013.

2013e

- (Kulkarni et al., 2013) ⇒ Girish Kulkarni, Visruth Premraj, Vicente Ordonez, Sagnik Dhar, Siming Li, Yejin Choi, Alexander C. Berg, Tamara L. Berg. BabyTalk: Understanding and Generating Simple Image Descriptions. IEEE Transactions on Pattern Analysis and Machine Intelligence, v.35 n.12, p.2891-2903, December 2013.

2012a

- (Bastien et al., 2012) ⇒ Bastien, Frederic, Lamblin, Pascal, Pascanu, Razvan, Bergstra, James, Goodfellow, Ian, Bergeron, Arnaud, Bouchard, Nicolas, Warde-Farley, David, and Bengio, Yoshua. Theano: New Features and Speed Improvements. Submited to the Deep Learning and Unsupervised Feature Learning NIPS 2012 Workshop, 2012.

2012b

- (Denil et al., 2012) ⇒ Misha Denil, Loris Bazzani, Hugo Larochelle, Nando De Freitas. Learning Where to Attend with Deep Architectures for Image Tracking. Neural Computation, v.24 n.8, p.2151-2184, August 2012.

2012c

- (Krizhevsky et al., 2012) ⇒ Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton. (2012). “ImageNet Classification with Deep Convolutional Neural Networks.” In: Proceedings of the 25th International Conference on Neural Information Processing Systems.

2012d

- (Kuznetsova et al., 2012) ⇒ Polina Kuznetsova, Vicente Ordonez, Alexander C. Berg, Tamara L. Berg, Yejin Choi. Collective Generation of Natural Image Descriptions. Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Long Papers, July 08-14, Jeju Island, Korea, 2012.

2012e

- (Mitchell et al., 2012) ⇒ Margaret Mitchell, Xufeng Han, Jesse Dodge, Alyssa Mensch, Amit Goyal, Alex Berg, Kota Yamaguchi, Tamara Berg, Karl Stratos, Hal Daumé, III. Midge: Generating Image Descriptions from Computer Vision Detections. Proceedings of the 13th Conference of the European Chapter of the Association for Computational Linguistics, April 23-27, Avignon, France, 2012.

2012f

- (Russakovsky et al., 2012) ⇒ Jasper Snoek, Hugo Larochelle, and Ryan P. Adams. (2012). “Practical Bayesian Optimization of Machine Learning Algorithms.” In: Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS-2012).

2011a

- (Li et al., 2011) ⇒ Siming Li, Girish Kulkarni, Tamara L. Berg, Alexander C. Berg, Yejin Choi. Composing Simple Image Descriptions Using Web-scale N-grams. Proceedings of the Fifteenth Conference on Computational Natural Language Learning, p.220-228, June 23-24, Portland, Oregon, 2011.

2011b

- ( Yang et al., 2011) ⇒ Yezhou Yang, Ching Lik Teo, Hal Daumé, III, Yiannis Aloimonos.Corpus-guided Sentence Generation of Natural Images. Proceedings of the Conference on Empirical Methods in Natural Language Processing, July 27-31, Edinburgh, United Kingdom, 2011.

2010a

- (Bergstra et al., 2010) ⇒ Bergstra, James, Breuleux, Olivier, Bastien, Frédéric, Lamblin, Pascal, Pascanu, Razvan, Desjardins, Guillaume, Turian, Joseph, Warde-Farley, David, and Bengio, Yoshua. Theano: A CPU and GPU Math Expression Compiler. In: Proceedings of the Python for Scientific Computing Conference (SciPy), 2010.

2010b

- (Larochelle & Hinton, 2010) ⇒ Hugo Larochelle, Geoffrey Hinton. Learning to Combine Foveal Glimpses with a Third-order Boltzmann Machine. Proceedings of the 23rd International Conference on Neural Information Processing Systems, p.1243-1251, December 06-09, Vancouver, British Columbia, Canada, 2010.

2002

- (Corbetta & Shulman, 2002) ⇒ Corbetta, Maurizio and Shulman, Gordon L. Control of Goal-directed and Stimulus-driven Attention in the Brain. Nature Reviews Neuroscience, 3(3):201-215, 2002.

2001

- (Weaver & Tao, 2001) ⇒ Lex Weaver, Nigel Tao. The Optimal Reward Baseline for Gradient-based Reinforcement Learning. Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence, p.538-545, August 02-05, , Seattle, Washington, 2001.

2000

- (Rensink, 2000) ⇒ Rensink, Ronald A. The Dynamic Representation of Scenes. Visual Cognition, 7(1-3):17-42, 2000.

1997

- (Hochreiter & Schmidhuber, 1997) ⇒ Sepp Hochreiter, and Jürgen Schmidhuber. (1997). “Long Short-term Memory". In: Neural computation, 9(8). DOI:10.1162/neco.1997.9.8.1735.

1992

- (Williams, 1992) ⇒ Ronald J Williams. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning, Machine Learning, v.8 n.3-4, p.229-256, May 1992.

BibTeX

@inproceedings{2015_ShowAttendandTellNeuralImageCa,

author = {Kelvin Xu and

[[Jimmy Ba]] and

Ryan Kiros and

Kyunghyun Cho and

Aaron C. Courville and

Ruslan Salakhutdinov and

[[Richard S. Zemel]] and

Yoshua Bengio},

editor = {Francis R. Bach and

[[David M. Blei]]},

title = {Show, Attend and Tell: Neural Image Caption Generation with Visual

Attention},

booktitle = {Proceedings of the 32nd International Conference on Machine Learning,

(ICML 2015)},

series = {JMLR Workshop and Conference Proceedings},

volume = {37},

pages = {2048--2057},

publisher = {JMLR.org},

year = {2015},

month = {July},

url = {http://proceedings.mlr.press/v37/xuc15.html},

}

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2015 ShowAttendandTellNeuralImageCap | Yoshua Bengio Aaron Courville Ruslan Salakhutdinov Richard S. Zemel Kyunghyun Cho Ryan Kiros Kelvin Xu Jimmy Lei Ba | Show, Attend and Tell: Neural Image Caption Generation with Visual Attention | 2015 |

- ↑ https: //www.whetlab.com/

- ↑ https://github.com/kelvinxu/arctic-captions

- ↑ We verified that our BLEU evaluation code matches the authors of Vinyals et al. (2014), Karpathy & Li (2014) and Kiros et al. (2014b). For fairness, we only compare against results for which we have verified that our BLEU evaluation code is the same .

- ↑ BLEU-n is the geometric average of the n-gram precision. For instance, BLEU-1 is the unigram precision, and BLEU-2 is the geometric average of the unigram and bigram precision.

- ↑ http://cs.stanford.edu/people/karpathy/