2016 AReviewofRelationalMachineLearn

- (Nickel et al., 2016) ⇒ Maximilian Nickel, Kevin Murphy, Volker Tresp, and Evgeniy Gabrilovich. (2016). “A Review of Relational Machine Learning for Knowledge Graphs". In: Proceedings of the IEEE (invited paper). 104(1). DOI:10.1109/JPROC.2015.2483592.

Subject Headings: Relational Machine Learning Algorithm, SPO Triple, Google Knowledge Vault.

Notes

- Other Version(s):

- (Nickel et al., 2015) ⇒ Maximilian Nickel, Kevin Murphy, Volker Tresp, and Evgeniy Gabrilovich. (2015). “A Review of Relational Machine Learning for Knowledge Graphs: From Multi-Relational Link Prediction to Automated Knowledge Graph Construction.” ArXiv:1503.00759.

- DSpace MIT Library: https://dspace.mit.edu/bitstream/handle/1721.1/100193/CBMM-Memo-028.pdf

- Link(s):

Cited By

- Google Scholar: ~ 749 Citations.

- Semantic Scholar: ~ 571 Citations.

- MS Academic: ~ 96 - 742 Citations.

Quotes

Abstract

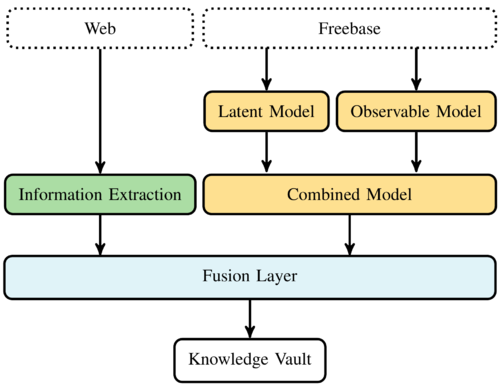

Relational machine learning studies methods for the statistical analysis of relational, or graph-structured data. In this paper, we provide a review of how such statistical models can be “trained” on large knowledge graphs, and then used to predict new facts about the world (which is equivalent to predicting new edges in the graph). In particular, we discuss two fundamentally different kinds of statistical relational models, both of which can scale to massive data sets. The first is based on latent feature models such as tensor factorization and multi-way neural networks. The second is based on mining observable patterns in the graph. We also show how to combine these latent and observable models to get improved modeling power at decreased computational cost. Finally, we discuss how such statistical models of graphs can be combined with text-based information extraction methods for automatically constructing knowledge graphs from the Web. To this end, we also discuss Google's knowledge vault project as an example of such combination.

I. INTRODUCTION

| ‘‘I am convinced that the crux of the problem of learning is recognizing relationships and being able to use them’’ |

Traditional machine learning algorithms take as input a feature vector, which represents an object in terms of numeric or categorical attributes. The main learning task is to learn a mapping from this feature vector to an output prediction of some form. This could be class labels, a regression score, or an unsupervised cluster id or latent vector (embedding). In statistical relational learning (SRL), the representation of an object can contain its relationships to other objects. Thus the data is in the form of a graph, consisting of nodes (entities) and labeled edges (relationships between entities). The main goals of SRL include prediction of missing edges, prediction of properties of nodes, and clustering nodes based on their connectivity patterns. These tasks arise in many settings such as analysis of social networks and biological pathways. For further information on SRL, see [1]–[3].

In this paper, we review a variety of techniques from the SRL community and explain how they can be applied to large-scale knowledge graphs (KGs), i.e., graph structured knowledge bases (KBs) that store factual information in form of relationships between entities. Recently, a large number of knowledge graphs have been created, including YAGO [4], DBpedia [5], NELL [6], Freebase [7], and the Google Knowledge Graph [8]. As we discuss in Section II, these graphs contain millions of nodes and billions of edges. This causes us to focus on scalable SRL techniques, which take time that is (at most) linear in the size of the graph.

We can apply SRL methods to existing KGs to learn a model that can predict new facts (edges) given existing facts. We can then combine this approach with information extraction methods that extract ‘‘noisy’’ facts from the Web (see, e.g., [9] and [10]). For example, suppose an information extraction method returns a fact claiming that Barack Obama was born in Kenya, and suppose (for illustration purposes) that the true place of birth of Obama was not already stored in the knowledge graph. An SRL model can use related facts about Obama (such as his profession being U.S. President) to infer that this new fact is unlikely to be true and should be discarded. This provides us a way to ‘‘grow’’ a KG automatically, as we explain in more detail in Section IX.

The remainder of this paper is structured as follows. In Section II, we introduce knowledge graphs and some of their properties. Section III discusses SRL and how it can be applied to knowledge graphs. There are two main classes of SRL techniques: those that capture the correlation between the nodes/ [edge]]s using latent variables, and those that capture the correlation directly using statistical models based on the observable properties of the graph. We discuss these two families in Sections IV and V, respectively. Section VI describes methods for combining these two approaches, in order to get the best of both worlds. Section VII discusses how such models can be trained on KGs. In Section VIII we discuss relational learning using Markov Random Fields. In Section IX, we describe how SRL can be used in automated knowledge base construction projects. In Section X, we discuss extensions of the presented methods, and Section XI presents our conclusions.

II. KNOWLEDGE GRAPHS

In this section, we introduce knowledge graphs, and discuss how they are represented, constructed, and used.

A. Knowledge Representation

Knowledge graphs model information in the form of entities and relationships between them. This kind of relational knowledge representation has a long history in logic and artificial intelligence [11] , for example, in semantic networks [12] and frames [13]. More recently, it has been used in the Semantic Web community with the purpose of creating a ‘‘web of data’’ that is readable by machines [14]. While this vision of the Semantic Web remains to be fully realized, parts of it have been achieved. In particular, the concept of linked data [15], [16] has gained traction, as it facilitates publishing and interlinking data on the Web in relational form using the W3C Resource Description Framework (RDF) [17], [18]. (For an introduction to knowledge representation, see, e.g., [11], [19], and [20].)

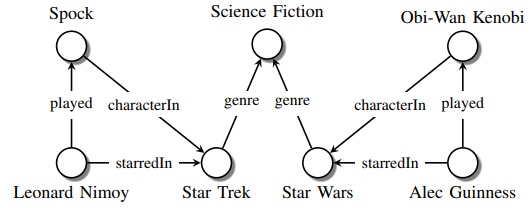

In this paper, we will loosely follow the RDF standard and represent facts in the form of binary relationships, in particular (subject, predicate, object) (SPO) triples, where subject and object are entities and predicate is the relation between them. (We discuss how to represent higher arity relations in Section X-A.) The existence of a particular SPO triple indicates an existing fact, i.e., that the respective entities are in a relationship of the given type. For instance, the information

{|style="border: 0px; text-align:center; border-spacing: 1px; margin: 1em auto; width: 80%" |- |‘‘Leonard Nimoy was an actor who played the character Spock in the science-fiction movie Star Trek’’ |- |}

can be expressed via the following set of SPO triples:

{|class="wikitable" style="border:1px; text-align:center; solid black; border-spacing:1px; margin: 1em auto; width: 80%" |- !subject!! predicate !!object |- |(LeonardNimoy,|| profession,|| Actor) |- |(LeonardNimoy, ||starredIn, ||StarTrek) |- |(LeonardNimoy, ||played,|| Spock) |- |(Spock, ||characterIn, ||StarTrek) |- |(StarTrek,|| genre, ||ScienceFiction) |- |}

We can combine all the SPO triple s together to form a multigraph, where nodes represent entities (all subjects and objects), and directed edges represent relationships. The direction of an edge indicates whether entities occur as subjects or objects, i.e., an edge points from the subject to the object. Different relations are represented via different types of edges (also called edge labels). This construction is called a knowledge graph (KG), or sometimes a heterogeneous information network [21].) See Fig. 1 for an example.

In addition to being a collection of facts, knowledge graphs often provide type hierarchies (Leonard Nimoy is an actor, which is a person, which is a living thing) and type constraints (e.g., a person can only marry another person, not a thing).

|

B. Open Versus Closed World Assumption

While existing triples always encode known true relationships (facts), there are different paradigms for the interpretation of nonexisting triples.

- Under the closed world assumption (CWA), nonexisting triples indicate false relationships. For example, the fact that in Fig. 1 there is no

starredInedge from Leonard Nimoy to Star Wars is interpreted to mean that Nimoy definitely did not star in this movie. - Under the open world assumption (OWA), a nonexisting triple is interpreted as unknown, i.e., the corresponding relationship can be either true or false. Continuing with the above example, the missing edge is not interpreted to mean that Nimoy did not star in Star Wars. This more cautious approach is justified, since KGs are known to be very incomplete. For example, sometimes just the main actors in a movie are listed, not the complete cast. As another example, note that even the place of birth attribute, which you might think would be typically known, is missing for 71% of all people included in Freebase [22].

RDF and the Semantic Web make the open-world assumption. In Section VII-B we also discuss the local closed world assumption (LCWA), which is often used for training relational models.

C. Knowledge Base Construction

Completeness, accuracy, and data quality are important parameters that determine the usefulness of knowledge bases and are influenced by the way knowledge bases are constructed. We can classify KB construction methods into four main groups:

- in curated approaches, triples are created manually by a closed group of experts;

- in collaborative approaches, triples are created manually by an open group of volunteers;

- in automated semistructured approaches, triples are extracted automatically from semistructured text (e.g., infoboxes in Wikipedia) via hand-crafted rules, learned rules, or regular expressions;

- in automated unstructured approaches, triples are extracted automatically from unstructured text via machine learning and natural language processing techniques (see, e.g., [9] for a review).

Construction of curated knowledge bases typically leads to highly accurate results, but this technique does not scale well due to its dependence on human experts. Collaborative knowledge base construction, which was used to buildWikipedia and Freebase, scales better but still has some limitations. For instance, as mentioned previously, the place of birth attribute is missing for 71% of all people included in Freebase, even though this is a mandatory property of the schema [22]. Also, a recent study [35] found that the growth of Wikipedia has been slowing down. Consequently, automatic knowledge base construction methods have been gaining more attention.

Such methods can be grouped into two main approaches. The first approach exploits semistructured data, such as Wikipedia infoboxes, which has led to large, highly accurate knowledge graphs such as YAGO [4], [27] and DBpedia [5]. The accuracy (trustworthiness) of facts in such automatically created KGs is often still very high. For instance, the accuracy of YAGO2 has been estimated[1] to be over 95% through manual inspection of sample facts [36], and the accuracy of Freebase [7] was estimated to be 99%.[2] However, semistructured text still covers only a small fraction of the information stored on the Web, and completeness (or coverage) is another important aspect of KGs. Hence the second approach tries to ‘‘read the Web,’’ extracting facts from the natural language text of Web pages. Example projects in this category include NELL [6] and the knowledge vault [28]. In Section IX, we show how we can reduce the level of ‘‘noise’’ in such automatically extracted facts by using the knowledge from existing, high quality repositories. KGs, and more generally KBs, can also be classified based on whether they employ a fixed or open lexicon of entities and relations. In particular, we distinguish two main types of KBs.

- In schema-based approaches, entities, and relations are represented via globally unique identifiers and all possible relations are predefined in a fixed vocabulary. For example, Freebase might represent the fact that Barack Obama was born in Hawaii using the triple (

/m/02mjmr,/people/person/born-in,/m/03gh4), where/m/02mjmris the unique machine ID for Barack Obama. - In schema-free approaches, entities and relations are identified using open information extraction (OpenIE) techniques [37], and represented via normalized but not disambiguated strings (also referred to as surface names). For example, an OpenIE system may contain triples such as ("

Obama", “born in", “Hawaii"), ("Barack Obama", “place of birth", “Honolulu"), etc. Note that it is not clear from this representation whether the first triple refers to the same person as the second triple, nor whether “born in” means the same thing as “place of birth". This is the main disadvantage of OpenIE systems. Table I lists current knowledge base construction projects classified by their creation method and data schema. In this paper, we will only focus on schema-based KBs. Table II shows a selection of such KBs and their sizes.

| Method | Schema | Examples |

|---|---|---|

| Curated | Yes | Cyc/OpenCyc [23], WordNet [24],UMLS [25] |

| Collaborative | Yes | Wikidata [26], Freebase [7] |

| Auto. Semi-Structured | Yes | YAGO [4, 27], DBPedia [5], Freebase [7] |

| Auto. Unstructured | Yes | Knowledge Vault [28], NELL [6], PATTY [29], PROSPERA [30],DeepDive/Elementary [31] |

| Auto. Unstructured | No | ReVerb [ 32], OLLIE [33], PRISMATIC [34 ] |

| Number of | |||

|---|---|---|---|

| Knowledge Graph | Entities | Relation Types | Facts |

| Freebase[3] | 40 M | 35,000 | 637 M |

| Wikidata[4] | 18 M | 1,632 | 66 M |

| DBpedia (en)[5] | 4.6 M | 1,367 | 538 M |

| YAGO2[6] | 9.8 M | 114 | 447 M |

| Google Knowledge Graph[7] | 570 M | 35,000 | 18,000 M |

D. Uses of Knowledge Graphs

Knowledge graphs provide semantically structured information that is interpretable by computers — a property that is regarded as an important ingredient to build more intelligent machines [38]. Consequently, knowledge graphs are already powering multiple "Big Data" applications in a variety of commercial and scientific domains. A prime example is the integration of Google’s Knowledge Graph, which currently stores 18 billion facts about 570 million entities, into the results of Google’s search engine [8]. The Google Knowledge Graph is used to identify and disambiguate entities in text, to enrich search results with semantically structured summaries, and to provide links to related entities in exploratory search. (Microsoft has a similar KB, called Satori, integrated with its Bing search engine [39].)

Enhancing search results with semantic information from knowledge graphs can be seen as an important step to transform text-based search engines into semantically aware question answering services. Another prominent example demonstrating the value of knowledge graphs is IBM’s question answering system Watson, which was able to beat human experts in the game of Jeopardy !. Among others, this system used YAGO, DBpedia, and Freebase as its sources of information [40]. Repositories of structured knowledge are also an indispensable component of digital assistants such as Siri, Cortana, or Google Now.

Knowledge graphs are also used in several specialized domains. For instance, Bio2RDF [41], Neurocommons [42], and LinkedLifeData [43] are knowledge graphs that integrate multiple sources of biomedical information. These have been used for question answering and decision support in the life sciences.

E. Main Tasks in Knowledge Graph Construction and Curation

In this section, we review a number of typical KG tasks. Link prediction is concerned with predicting the existence (or probability of correctness) of (typed) edges in the graph (i.e., triples). This is important since existing knowledge graphs are often missing many facts, and some of the edges they contain are incorrect [44]. In the context of knowledge graphs, link prediction is also referred to as knowledge graph completion. For example, in Fig.1, suppose the characterIn edge]] from Obi-Wan Kenobi to Star Wars were missing; we might be able to predict this missing edge, based on the structural similarity between this part of the graph and the part involving Spock and Star Trek. It has been shown that relational models that take the relationships of entities into account can significantly outperform nonrelational machine learning methods for this task (e.g., see [45] and [46]).

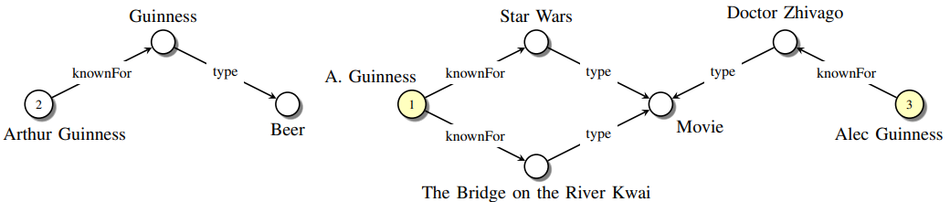

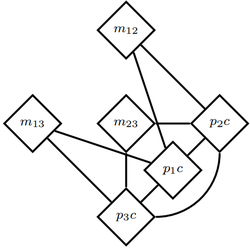

Entity resolution (also known as record linkage [47], object identification [48], instance matching [49], and deduplication [50]) is the problem of identifying which objects in relational data refer to the same underlying entities. See Fig. 2 for a small example. In a relational setting, the decisions about which objects are assumed to be identical can propagate through the graph, so that matching decisions are made collectively for all objects in a domain rather than independently for each object pair (see, for example, [51] – [53]). In schema-based automated knowledge base construction, entity resolution can be used to match the extracted surface names to entities stored in the knowledge graph.

|

Link-based clustering extends feature-based clustering to a relational learning setting and groups entities in relational data based on their similarity. However, in link-based clustering, entities are not only grouped by the similarity of their features but also by the similarity of their links. As in entity resolution, the similarity of entities can propagate through the knowledge graph, such that relational modeling can add important information for this task. In social network analysis, link-based clustering is also known as community detection [54].

III. STATISTICAL RELATIONAL LEARNING FOR KNOWLEDGE GRAPHS

Statistical Relational Learning is concerned with the creation of statistical models for relational data. In the following sections we discuss how statistical relational learning can be applied to knowledge graphs. We will assume that all the entities and (types of) relations in a knowledge graph are known. (We discuss extensions of this assumption in Section X-C). However, triples are assumed to be incomplete and noisy; entities and relation types may contain duplicates.

Notation: Before proceeding, let us define our mathematical notation. (Variable names will be introduced later in the appropriate sections.) We denote scalars by lower case letters, such as $a$; column vectors (of size $N$) by bold lower case letters, such as $\mathbf{a}$; matrices (of size $N_1\times N_2$) by bold upper case letters, such as $\mathbf{A}$; and tensors (of size $N_1 \times N_2 \times N_3$) by bold upper case letters with an underscore, such as $\mathbf{\underline{A}}$. We denote the $k$’ th "frontal slice" of a tensor $\mathbf{\underline{A}}$ by $\mathbf{A}_k$ (which is a matrix of size $N_1 \times N_2$), and the $(i,j,k)$’th element by $a_{ijk}$ (which is a scalar). We use $[\mathbf{a}; \mathbf{b}]$ to denote the vertical stacking of vectors $\mathbf{a}$ and $\mathbf{b}$, i.e., $[\mathbf{a}; \mathbf{b}] = \begin{pmatrix} \mathbf{a} \\ \mathbf{b} \end{pmatrix}$. We can convert a matrix $\mathbf{A}$ of size $N_1\times N_2$ into a vector a of size $N_1N_2$ by stacking all columns of $\mathbf{A}$, denoted $\mathbf{a} = vec (\mathbf{A})$. The inner (scalar) product of two vectors (both of size $N$) is defined by $\mathbf{a}^{\top} \mathbf{b} = \sum_{i-1}^N a_i b_j$. The tensor (Kronecker) product of two vectors (of size $N_1$ and $N_2$) is a vector of size $N_1N_2$ with entries

[math]\displaystyle{ \mathbf{a} \otimes \mathbf{b} = \begin{pmatrix} a_1\mathbf{b} \\ \vdots \\ a_{N_1}\mathbf{b} \end{pmatrix} }[/math].

Matrix multiplication is denoted by $\mathbf{AB}$ as usual. We denote the $L_2$ norm of a vector by $\parallel\mathbf{a}\parallel_2= \sqrt{\sum_ia_i^2}$, and the Frobenius norm of a matrix by $\parallel \mathbf{A}\parallel_F =\sqrt{\sum_i\sum_ja^2_{ij}}$. We denote the vector of all ones by $\mathbf{1}$, and the identity matrix by $\mathbf{I}$.

A. Probabilistic Knowledge Graphs

We now introduce some mathematical background so we can more formally define statistical models for knowledge graphs.

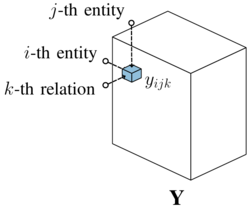

Let $\varepsilon = {e_1,\cdots,e_{N_e}}$ be the set of all entities and $\mathcal{R} = {r_1,\cdots,r_{N_r}}$ be the set of all relation types in a knowledge graph. We model each possible triple $ x_{ijk} = \left(e_i, r_k, e_j \right)$ over this set of entities and relations as a binary random variable $y_{ijk} \in \{0,1\}$ that indicates its existence. All possible triples in $\varepsilon \times \mathcal{R} \times \varepsilon$ can be grouped naturally in a third-order tensor (three-way array) $\mathbf{\underline{Y}} \in \{0,1\}^{N_e}\times N_e \times N_r$ whose entries are set such that

[math]\displaystyle{ y_{ijk}= \begin{cases} 1, & \text{if the triple} \left(e_i,r_k,e_j\right) \text{exists} \\ 0, & \text{otherwise.} \end{cases} }[/math]

We will refer to this construction as an adjacency tensor (cf. Figure 3). Each possible realization of $\mathbf{\underline{Y}}$ can be interpreted as a possible world. To derive a model for the entire knowledge graph, we are then interested in estimating the joint distribution $P\left(\mathbf{\underline{Y}}\right)$, from a subset $D \subseteq \varepsilon \times \mathcal{R} \times \varepsilon $ of observed triples. In doing so, we are estimating a probability distribution over possible worlds, which allows us to predict the probability of triples based on the state of the entire knowledge graph. While $y_{ijk} = 1$ in adjacency tensors indicates the existence of a triple, the interpretation of $y_{ijk} = 0$ depends on whether the open world, closed world, or local-closed world assumption is made. For details, see Section II-D.

|

Note that the size of $\mathbf{\underline{Y}}$ can be enormous for large knowledge graphs. For instance, in the case of Freebase, which currently consists of over 40 million entities and 35,000 relations, the number of possible triples $\vert \varepsilon \times \mathcal{R} \times \varepsilon\vert$ exceeds 1019 elements. Of course, type constraints reduce this number considerably.

Even amongst the syntactically valid triples, only a tiny fraction are likely to be true. For example, there are over 450,000 thousands actors and over 250,000 movies stored in Freebase. But each actor stars only in a small number of movies. Therefore, an important issue for SRL on knowledge graphs is how to deal with the large number of possible relationships while efficiently exploiting the sparsity of relationships. Ideally, a relational model for large-scale knowledge graphs should scale at most linearly with the data size, i.e., linearly in the number of entities $N_e$, linearly in the number of relations $N_r$, and linearly in the number of observed triples $\vert D\vert = N_d$.

B. Statistical Properties of Knowledge Graphs

Knowledge graphs typically adhere to some deterministic rules, such as type constraints and transitivity (e.g., if Leonard Nimoy was born in Boston, and Boston is located in the USA, then we can infer that Leonard Nimoy was born in the USA). However, KGs have typically also various “softer” statistical patterns or regularities, which are not universally true but nevertheless have useful predictive power.

One example of such statistical pattern is known as homophily, that is, the tendency of entities to be related to other entities with similar characteristics. This has been widely observed in various social networks [55, 56]. For example, US-born actors are more likely to star in US-made movies. For multi-relational data (graphs with more than one kind of link), homophily has also been referred to as autocorrelation [57].

Another statistical pattern is known as block structure. This refers to the property where entities can be divided into distinct groups (blocks), such that all the members of a group have similar relationships to members of other groups [58, 59, 60 ]. For example, we can group some actors, such as Leonard Nimoy and Alec Guinness, into a science fiction actor block, and some movies, such as Star Trek and Star Wars, into a science fiction movie block, since there is a high density of links from the scifi actor block to the scifi movie block.

Graphs can also exhibit global and long-range statistical dependencies, i.e., dependencies that can span over chains of triples and involve different types of relations. For example, the citizenship of Leonard Nimoy (USA) depends statistically on the city where he was born (Boston), and this dependency involves a path over multiple entities (Leonard Nimoy, Boston, USA) and relations (bornIn, locatedIn, citizenOf). A distinctive feature of relational learning is that it is able to exploit such patterns to create richer and more accurate models of relational domains.

When applying statistical models to incomplete knowledge graphs, it should be noted that the distribution of facts in such KGs can be skewed. For instance, KGs that are derived from Wikipedia will inherit the skew that exists in distribution of facts in Wikipedia itself[8] . Statistical models as discussed in the following sections can be affected by such biases in the input data and need to be interpreted accordingly.

C. Types of SRL Models

As we discussed, the presence or absence of certain triples in relational data is correlated with (i.e., predictive of) the presence or absence of certain other triples. In other words, the random variables $y_{ijk}$ are correlated with each other. We will discuss three main ways to model these correlations:

- M1) Assume all $y_{ijk}$ are conditionally independent given latent features associated with subject, object and relation type and additional parameters (latent feature models)

- M2) Assume all $y_{ijk}$ are conditionally independent given observed graph features and additional parameters (graph feature models)

- M3) Assume all $y_{ijk}$ have local interactions (Markov Random Fields)

In what follows we will mainly focus on M1 and M2 and their combination; M3 will be the topic of Section VIII.

The model classes M1 and M2 predict the existence of a triple $x_{ijk}$ via a score function $f\left(x_{ijk};\Theta\right)$ which represents the model’s confidence that a triple exists given the parameters $\Theta$. The conditional independence assumptions of M1 and M2 allow the probability model to be written as follows:

| [math]\displaystyle{ \displaystyle P\left(\mathbf{\underline{Y}}\vert \mathcal{D}, \Theta\right)=\prod_{i=1}^{N_e}\prod_{j=1}^{N_e}\prod_{k=1}^{N_r}\mathrm{Ber}\left(y_{ijk}\vert \sigma\left(f\left(x_{ijk}, \Theta \right)\right)\right) }[/math] | (1) |

where $\sigma(u) = 1/(1 +e^{-u})$ is the sigmoid (logistic) function, and

| [math]\displaystyle{ \mathrm{Ber}(y\vert P)= \begin{cases} p, & \text{if } y=1 \\ 1-p, & \text{if} y=0 \end{cases} }[/math] | (2) |

is the Bernoulli distribution. We will refer to models of the form Equation (1) as probabilistic models. In addition to probabilistic models, we will also discuss models which optimize $f(\cdot)$ under other criteria, for instance models which maximize the margin between existing and non-existing triples. We will refer to such models as score-based models. If desired, we can derive probabilities for score-based models via Platt scaling [61].

There are many different methods for defining $f(\cdot)$. In the following Sections IV to Sections VI and VIII we will discuss different options for all model classes. In Section VII we will furthermore discuss aspects of how to train these models on knowledge graphs.

IV. LATENT FEATURE MODELS

In this section, we assume that the variables $y_{ijk}$ are conditionally independent given a set of global latent features and parameters, as in Equation 1. We discuss various possible forms for the score function $f (x; \Theta)$ below. What all models have in common is that they explain triples via latent features of entities (This is justified via various theoretical arguments [62]). For instance, a possible explanation for the fact that Alec Guinness received the Academy Award is that he is a good actor. This explanation uses latent features of entities (being a good actor) to explain observable facts (Guinness receiving the Academy Award). We call these features “latent” because they are not directly observed in the data. One task of all latent feature models is therefore to infer these features automatically from the data.

In the following, we will denote the latent feature representation of an entity $e_i$ by the vector $\mathbf{e}_i \in \R^{H_e}$ where $H_e$ denotes the number of latent features in the model. For instance, we could model that Alec Guinness is a good actor and that the Academy Award is a prestigious award via the vectors

[math]\displaystyle{ \mathbf{e}_{Guinness}=\begin{bmatrix}0.9\\0.2\end{bmatrix}, \quad \mathbf{e}_{Academy\;Award}=\begin{bmatrix}0.2\\0.8\end{bmatrix} }[/math]

where the component $e_{i1}$ corresponds to the latent feature Good Actor and $e_{i2}$ correspond to Prestigious Award. (Note that, unlike this example, the latent features that are inferred by the following models are typically hard to interpret.)

The key intuition behind relational latent feature models is that the relationships between entities can be derived from interactions of their latent features. However, there are many possible ways to model these interactions, and many ways to derive the existence of a relationship from them. We discuss several possibilities below. See Table III for a summary of the notation.

| Relational data | ||

|---|---|---|

| Symbol | Meaning | |

| $N_e$ | Number of entities | |

| $N_r$ | Number of relations | |

| $N_d$ | Number of training examples | |

| $e_i$ | $i$-th entity in the dataset (e.g., LeonardNimoy)

| |

| $r_k$ | $k$-th relation in the dataset (e.g., bornIn)

| |

| $\mathcal{D}^{+}$ | Set of observed positive triples | |

| $\mathcal{D}^{-}$ | Set of observed negative triples | |

| Probabilistic Knowledge Graphs | ||

| Symbol | Meaning | Size |

| $\mathbf{\underline{Y}}$ | (Partially observed) labels for all triples | $N_e \times N_e \times N_r$ |

| $\mathbf{\underline{F}}$ | Score for all possible triples | $N_e \times N_e \times N_r$ |

| $\mathbf{Y}_k$ | Slice of $\mathbf{\underline{Y}}$ for relation $r_k$ | $N_e \times N_e$ |

| $\mathbf{F}_k$ | Slice of $\mathbf{\underline{F}}$ for relation $r_k$ | $N_e \times N_e$ |

| Graph and Latent Feature Models | ||

| Symbol | Meaning | |

| $\phi_{ijk}$ | Feature vector representation of triple $\left(e_i, r_k, e_j\right)$ | |

| $\mathbf{w}k $ | Weight vector to derive scores for relation $k$ | |

| $\Theta$ | Set of all parameters of the model | |

| $\sigma(\cdot)$ | Sigmoid (logistic) function | |

| Latent Feature Models | ||

| Symbol | Meaning | Size |

| $H_e$ | Number of latent features for entities | |

| $H_r$ | Number of latent features for relations | |

| $\mathbf{e}_i$ | Latent feature representation. of entity $e_i$ | $H_e$ |

| $\mathbf{r}_k$ | Latent feature representation of relation $r_k$ | $H_r$ |

| $H_a$ | Size of $\mathbf{h}_a$ layer | |

| $H_b$ | Size of $\mathbf{h}_b$ layer | |

| $H_c$ | Size of $\mathbf{h}_c$ layer | |

| $\mathbf{E}$ | Entity embedding matrix | $N_e\times H_e$ |

| $\mathbf{W}_k$ | Bilinear weight matrix for relation $k$ | $H_e\times H_e$ |

| $\mathbf{A}_k$ | Linear feature map for pairs of entities for relation $r_k$ | $\left(2H_e\right)\times H_a$ |

| $\mathbf{C}$ | Linear feature map for triples | $\left(2H_e+H_r\right)\times H_c$ |

A. RESCAL: A Bilinear Model

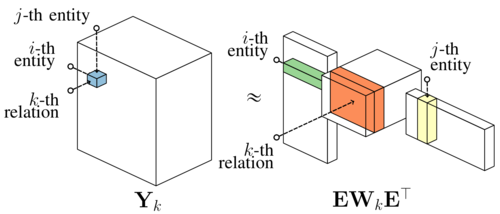

RESCAL [63, 64, 65] is a relational latent feature model which explains triples via pairwise interactions of latent features. In particular, we model the score of a triple $x_{ijk}$ as

| [math]\displaystyle{ f^{RESCAL}_{ijk}:=\mathbf{e}_i^{\top}\mathbf{W}_k\mathbf{e}_j =\displaystyle \sum_{a=1}^{H_e} \sum_{b=1}^{H_e} w_{abk}e_{ia}e_{jb} }[/math] | (3) |

where $\mathbf{W}_k \in \R^{H_e\times H_e}$ is a weight matrix whose entries $w_{abjk}$ specify how much the latent features $a$ and $b$ interact in the $k$-th relation. We call this a bilinear model, since it captures the interactions between the two entity vectors using multiplicative terms. For instance, we could model the pattern that good actors are likely to receive prestigious awards via a weight matrix such as

[math]\displaystyle{ \mathbf{W}_{receiveAward}=\begin{bmatrix}0.1&0.9\\0.1&0.1\end{bmatrix}, \quad \mathbf{e}_{Academy\;Award}=\begin{bmatrix}0.2\\0.8\end{bmatrix} }[/math]

In general, we can model block structure patterns via the magnitude of entries in $\mathbf{W}_k$, while we can model homophily patterns via the magnitude of its diagonal entries. Anti-correlations in these patterns can be modeled via negative entries in $\mathbf{W}_k$.

Hence, in Equation (3) we compute the score of a triple $x_{ijk}$ via the weighted sum of all pairwise interactions between the latent features of the entities $e_i$ and $e_j$. The parameters of the model are $\Theta = \{\{e_i\}^{N_e}, \{\mathbf{W}_k,\}^{N_r}_{k=1} \}$. During training we jointly learn the latent representations of entities and how the latent features interact for particular relation types.

In the following, we will discuss further important properties of the model for learning from knowledge graphs.

Relational learning via shared representations: In equation (3), entities have the same latent representation regardless of whether they occur as subjects or objects in a relationship. Furthermore, they have the same representation over all different relation types. For instance, the $i$-th entity occurs in the triple $x_{ijk}$ as the subject of a relationship of type $k$, while it occurs in the triple $x_{piq}$ as the object of a relationship of type $q$. However, the predictions $f_{ijk} = \mathbf{e}_i^{\top} \mathbf{W}_k\mathbf{e}_j$ and $f_{piq} = \mathbf{e}_p^{\top} \mathbf{W}_a\mathbf{e}_i$ both use the same latent representation $\mathbf{e}_i$ of the $i$-th entity. Since all parameters are learned jointly, these shared representations permit to propagate information between [[triple]s via the latent representations of entities and the weights of relations. This allows the model to capture global dependencies in the data.

Semantic embeddings: The shared entity representations in RESCAL capture also the similarity of entities in the relational domain, i.e., that entities are similar if they are connected to similar entities via similar relations [65]. For instance, if the representations of $\mathbf{e}_i$ and $\mathbf{e}_p$ are similar, the predictions $f_{ijk}$ and $f_{pjk}$will have similar values. In return, entities with many similar observed relationships will have similar latent representations. This property can be exploited for entity resolution and has also enabled large-scale hierarchical clustering on relational data [ 63, 64]. Moreover, since relational similarity is expressed via the similarity of vectors, the latent representations $\mathbf{e}_i$ can act as proxies to give non-relational machine learning algorithms such as k-means or kernel methods access to the similarity of entities.

Connection to tensor factorization: RESCAL is similar to methods used in recommendation systems [66], and to traditional tensor factorization methods [67]. In matrix notation, Equation (3) can be written compactly as as $\mathbf{F}k = \mathbf{EW}_k\mathbf{E}^{\top}$, where $\mathbf{F}k \in \R^{N_e\times N_e}$ is the matrix holding all scores for the $k$-th relation and the $i$-th row of $\mathbf{E} \in \R^{N_e\times H_e}$ holds the latent representation of $\mathbf{e}_i$. See Figure 4 for an illustration. In the following, we will use this tensor representation to derive a very efficient algorithm for parameter estimation.

|

Fitting the model: If we want to compute a probabilistic model, the parameters of RESCAL can be estimated by minimizing the log-loss using gradient-based methods such as stochastic gradient descent [68 ]. RESCAL can also be computed as a score-based model, which has the main advantage that we can estimate the parameters $\Theta$ very efficiently: Due to its tensor structure and due to the sparsity of the data, it has been shown that the RESCAL model can be computed via a sequence of efficient closed-form updates when using the squared-loss [63, 64]. In this setting, it has been shown analytically that a single update of $\mathbf{E}$ and $\mathbf{W}_k$ scales linearly with the number of entities $N_e$, and linearly with the number of relations $N_r$, and linearly with the number of observed triples, i.e., the number of non-zero entries in $\mathbf{\underline{Y}}$ [64]. We call this algorithm RESCAL-ALS[9]. In practice, a small number (say 30 to 50) of iterated updates are often sufficient for RESCAL-ALS to arrive at stable estimates of the parameters. Given a current estimate of $\mathbf{E}$, the updates for each $\mathbf{W}_k$ can be computed in parallel to improve the scalability on knowledge graphs with a large number of relations. Furthermore, by exploiting the special tensor structure of RESCAL, we can derive improved updates for RESCAL-ALS that compute the estimates for the parameters with a runtime complexity of $\mathcal{O} \left(H^3_e\right)$ for a single update (as opposed to a runtime complexity of $\mathcal{O} \left(H^5_e\right)$ for naive updates) [65, 69]. In summary, for relational domains that can be explained via a moderate number of latent features, RESCAL-ALS is highly scalable and very fast to compute. For more detail on RESCAL-ALS see also Equation (26) in Section VII.

Decoupled Prediction: In Equation (3), the probability of[single relationship is computed via simple matrix-vector products in $\mathcal{O} \left(H^2_e\right)$ time. Hence, once the parameters have been estimated, the computational complexity to predict the score of a triple depends only on the number of latent features and is independent of the size of the graph. However, during parameter estimation, the model can capture global dependencies due to the shared latent representations.

Relational learning results: RESCAL has been shown to achieve state-of-the-art results on a number of relational learning tasks. For instance, [63] showed that RESCAL provides comparable or better relationship prediction results on a number of small benchmark datasets compared to Markov Logic Networks (with structure learning) [70], the Infinite (Hidden) Relational model [71, 72], and Bayesian Clustered Tensor Factorization [73]. Moreover, RESCAL has been used for link prediction on entire knowledge graphs such as YAGO and DBpedia [64, 74]. Aside from link prediction, RESCAL has also successfully been applied to SRL tasks such as entity resolution and link-based clustering. For instance, RESCAL has shown state-of-the-art results in predicting which authors, publications, or publication venues are likely to be identical in publication databases [63, 65]. Furthermore, the semantic embedding of entities computed by RESCAL has been exploited to create taxonomies for uncategorized data via hierarchical clusterings of entities in the embedding space [75].

B. Other Tensor Factorization Models

Various other tensor factorization methods have been explored for learning from knowledge graphs and multi-relational data. [76, 77] factorized adjacency tensors using the CP tensor decomposition to analyze the link structure of Web pages and Semantic Web data respectively. [78] applied pairwise interaction tensor factorization [79] to predict triples in knowledge graphs. [80] applied factorization machines to large uni-relational datasets in recommendation settings. [81] proposed a tensor factorization model for knowledge graphs with a very large number of different relations.

It is also possible to use discrete latent factors. [82] proposed Boolean tensor factorization to disambiguate facts extracted with OpenIE methods and applied it to large datasets [83]. In contrast to previously discussed factorizations, Boolean tensor factorizations are discrete models, where adjacency tensors are decomposed into binary factors based on Boolean algebra.

C. Matrix Factorization Methods

Another approach for learning from knowledge graphs is based on matrix factorization, where, prior to the factorization, the adjacency tensor $\mathbf{\underline{Y}} \in \R^{Ne \times\;N_e\times\;N_r}$ is reshaped into a matrix $\mathbf{Y} \in \R^{Ne^2\times\;N_r}$ by associating rows with subject-object pairs $\left(e_i,e_j\right)$ and columns with relations $r_k$ (cf. 84, 85]), or into a matrix $\mathbf{Y} \in \R^{Ne \times\;N_e\;N_r}$ by associating rows with subjects $e_i$ and columns with relation / objects $(r_k, e_j)$ (cf. [86, 87]). Unfortunately, both of these formulations lose information compared to tensor factorization. For instance, if each subjectobject pair is modeled via a different latent representation, the information that the relationships $y_{ijk}$, and $y_{pjq}$share the same object is lost. It also leads to an increased memory complexity, since a separate latent representation is computed for each pair of entities, requiring $\mathcal{O} \left(N_e^2 H,_e + N_r H_e\right)$ parameters (compared to $\mathcal{O}\left (N_eH_e + N_r H_e^2\right)$ parameters for RESCAL).

D. Multi-Layer Perceptrons

We can interpret RESCAL as creating composite representations of triples and predicting their existence from this representation. In particular, we can rewrite RESCAL as

| [math]\displaystyle{ f^{RESCAL}_{ijk}:=\mathbf{w}^{\top}_k\phi_{ij}^{RESCAL} }[/math] | (4) |

| [math]\displaystyle{ \phi^{RESCAL}_{ij}:=\mathbf{e}_j\otimes \mathbf{e}_i }[/math] | (5) |

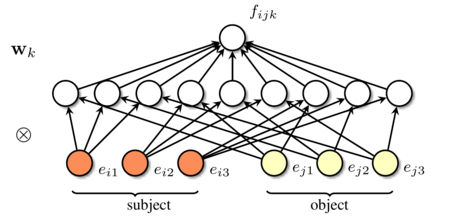

where $\mathbf{w}_k = vec \left(\mathbf{W}_k\right)$. Equation (4) follows from Equation (3) via the equality $vec\left(\mathbf{AXB}\right) = \left(\mathbf{B}^{top} \mathbf{A}\right) vec(\mathbf{X})$. Hence, RESCAL represents pairs of entities $\left(e_i,e_j\right)$ via the tensor product of their latent feature representations (Equation (5)) and predicts the existence of the $triple$ $x_{ijk}$ from $\phi_{ij}$ via $\mathbf{w}_z$ (Equation (4)). See also Figure 5a. For a further discussion of the tensor product to create composite latent representations please see [88, 89, 90].

|

|

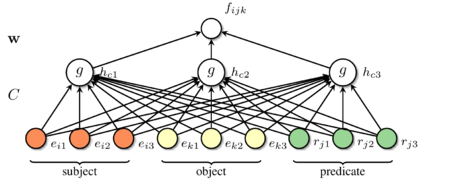

| (a) RESCAL | (b) ER-MLP |

Since the tensor product explicitly models all pairwise interactions, RESCAL can require a lot of parameters when the number of latent features are large (each matrix $\mathbf{W}_e$, has $H_e^2$ entries). This can, for instance, lead to scalability problems on knowledge graphs with a large number of relations.

In the following we will discuss models based on multilayer perceptrons (MLPs), also known as feedforward neural networks. In the context of multidimensional data they can be referred to a multiway neural networks. This approach allows us to consider alternative ways to create composite triple representations and to use nonlinear functions to predict their existence.

In particular, let us define the following E-MLP model (E for entity):

| [math]\displaystyle{ f^{E-MLP}_{ijk}:=\mathbf{w}^{\top}_k\mathbf{g}\left(\mathbf{h}^a_{ijk}\right) }[/math] | (6) |

| [math]\displaystyle{ \mathbf{h}^a_{ijk}\:=\mathbf{A}_k^{top} \phi_{ij}^{E-MLP} }[/math] | (7) |

| [math]\displaystyle{ \phi_{ij}^{E-MLP}:=\big[ \mathbf{e}_i; \mathbf{e}_j\big] }[/math] | (8) |

where $\mathbf{g(u)} = \big[g(u_1),g(u_2),\cdots\big]$ is the function $g$ applied element-wise to vector $\mathbf{u}$; one often uses the nonlinear function $g(u) = tanh(u)$.

Here $\mathbf{h}_a$ is an additive hidden layer, which is deriving by adding together different weighed components of the entity representations. In particular, we create a composite representation $\phi^{E-MLP}_{ij}=\big[ \mathbf{e}_i;\mathbf{e}_j\big] \in \R^{2H_a}$ via the concatenation ofe_i ande_i. However, concatenation alone does not consider any interactions between the latent features of $e_i$ and $e_j$. For this reason, we add a (vector-valued) hidden layer $h$, of size $H_a$, from which the final prediction is derived via $\mathbf{w}_k^{top} \mathbf{g}(\mathbf{h}_a)$. The important difference to tensor-product models like RESCAL is that we learn the interactions of latent features via the matrix A; (Equation (7)), while the tensor product considers always all possible interactions between latent features. This adaptive approach can reduce the number of required parameters significantly, especially on datasets with a large number of relations.

One disadvantage of the E-MLP is that it has to define a vector $\mathbf{w}_k$, and a matrix $\mathbf{A}_k$, for every possible relation, which requires $H_a + (H_a \times 2H_e)$ parameters per relation. An alternative is to embed the relation itself, using a $H_{r^{-}}$ dimensional vector $\mathbf{r}_k$. We can then define

| [math]\displaystyle{ f^{E-MLP}_{ijk}:=\mathbf{w}^{\top}_k\mathbf{g}\left(\mathbf{h}^c_{ijk}\right) }[/math] | (9) |

| [math]\displaystyle{ \mathbf{h}^c_{ijk}\:=\mathbf{C}_k^{top} \phi_{ijk}^{E-MLP} }[/math] | (10) |

| [math]\displaystyle{ \phi_{ijk}^{E-MLP}:=\big[ \mathbf{e}_i; \mathbf{e}_j;\mathbf{r}_k\big] }[/math] | (11) |

We call this model the ER-MLP, since it applies an MLP to an embedding of the entities and relations. Please note that ER-MLP uses a global weight vector for all relations. This model was used in the KV project (see Section IX), since it has many fewer parameters than the E-MLP (see Table V); the reason is that $\mathbf{C}$ is independent of the relation $k$.

It has been shown in ⏧] that MLPs can learn to put “semantically similar” words close by in the embedding space, even if they are not explicitly trained to do so. In [28], they show a similar result for the semantic embedding of relations using ER-MLP. For example, Table IV shows the nearest neighbors of latent representations of selected relations that have been computed with a 60 dimensional model on Freebase. Numbers in parentheses represent squared Euclidean distances. It can be seen that ER-MLP puts semantically related relations near each other. For instance, the closest relations to the children relation are parents, spouse, and birthplace.

| Relation | Nearest Neighbors | ||

|---|---|---|---|

| children | parents (0.4) | spouse (0.5) | birth-place (0.8) |

| birth-date | children (1.24) | gender (1.25) | parents (1.29) |

| edu-end[10] | job-start (1.41) | edu-start (1.61) | job-end (1.74) |

E. Neural Tensor Networks

We can combine traditional MLPs with bilinear models, resulting in what [92] calls a “neural tensor network” (NTN). More precisely, we can define the NTN model as follows:

| [math]\displaystyle{ f^{E-MLP}_{ijk}:=\mathbf{w}^{\top}_k\mathbf{g}\left(\big[\mathbf{h}^a_{ijk};\mathbf{h}^b_{ijk}\big]\right) }[/math] | (12) |

| [math]\displaystyle{ \mathbf{h}^a_{ijk}:=\mathbf{A}_k^{\top}\big[\mathbf{e}_i; \mathbf{e}_j;\mathbf{r}_k\big] }[/math] | (13) |

| [math]\displaystyle{ \mathbf{h}^a_{ijk}:=\mathbf{A}_k^{\top}\big[\mathbf{e}_i\mathbf{B}_k^{1}\mathbf{e}_j ,\cdots, \mathbf{e}_i\mathbf{B}_k^{H_b}\mathbf{e}_j\big] }[/math] | (14) |

Here $\mathbf{\underline{B}}_k$, is a tensor, where the $\ell$-th slice $\mathbf{B}_k^{\ell}$ has size $H_e \times H_e$, and there are $H_b$ slices. We call $\mathbf{h}_{ijk}^b$ a bilinear hidden layer, since it is derived from a weighted combination of multiplicative terms.

NTN is a generalization of the RESCAL approach, as we explain in Section XII-A. Also, it uses the additive layer from the E-MLP model. However, it has many more parameters than the E-MLP or RESCAL models. Indeed, the results in [95] and [28] both show that it tends to overfit, at least on the (relatively small) datasets uses in those papers.

F. Latent Distance Models

Another class of models are latent distance models (also known as latent space models in social network analysis), which derive the probability of relationships from the distance between latent representations of entities: entities are likely to be in a relationship if their latent representations are close according to some distance measure. For uni-relational data, [96] proposed this approach first in the context of social networks by modeling the probability of a relationship $x_{ij}$ via the score function $f (e_i,e_j) = - d (\mathbf{e}_i,\mathbf{e}_j)$ where $d (\cdot., \cdots)$ refers to an arbitrary distance measure such as the Euclidean distance.

The structured embedding (SE) model [93] extends this idea to multi-relational data by modeling the score of a triple $x_{ijk}$ as:

| [math]\displaystyle{ f^{SE}_{ijk}:= -\parallel\;\mathbf{A}_k^{s}\mathbf{e}_i -\mathbf{A}_k^{0}\mathbf{e}_j\parallel=-\parallel\mathbf{h}^a_{ijk}\parallel_1 }[/math] | (15) |

where $\mathbf{A}_k = \big[\mathbf{A}_k^{s}; -\mathbf{A}_k^{0}\big]$. In Equation (15) the matrices $\mathbf{A}_k^{0}$. transform the global latent feature representations of entities to model relationships specifically for the $k$-th relation. The transformations are learned using the ranking loss in a way such that pairs of entities in existing relationships are closer to each other than entities in non-existing relationships.

To reduce the number of parameters over the SE model, the TransE model [94] translates the latent feature representations via a relation-specific offset instead of transforming them via matrix multiplications. In particular, the score of a triple $x_{ijk}$ is defined as:

| [math]\displaystyle{ f^{TransE}_{ijk}:= -d \left(\mathbf{e}_i+\mathbf{r}_k, \mathbf{e}_j\right) }[/math] | (16) |

This model is inspired by the results in[ 91], who showed that some relationships between words could be computed by their vector difference in the embedding space. As noted in [95], under unit-norm constraints on $\mathbf{e}_i$, $\mathbf{e}_j$; and using the squared Euclidean distance, we can rewrite Equation (16) as follows:

| [math]\displaystyle{ f^{TransE}_{ijk}:= - \left(2\mathbf{r}_k^{\top} \left(\mathbf{e}_i - \mathbf{e}_j\right)- 2\mathbf{e}_j^{\top}\mathbf{e}_j + \parallel \mathbf{r}_k\parallel_2^2 \right) }[/math] | (17) |

Furthermore, if we assume $\mathbf{A}_k=[\mathbf{r}_k; - \mathbf{r}_k]$, so that $h^a_{ijk}=[\mathbf{r}_k; - \mathbf{r}_k]^T[(\mathbf{e}_i ; \mathbf{e}]=\left(\mathbf{r}_k^T\mathbf{e}_i - \mathbf{e}_j\right)$ and $\mathbf{\underline{B}} = \mathbf{I}$, so that $ $h^a_{ijk}=e_i^Te_j$, then we can rewrite this model as follows:

| [math]\displaystyle{ f^{TransE}_{ijk}:= - \left(2h_{ijk}^a - 2 h_{ijk}^b + \parallel\mathbf{r}_k\parallel_2^2\right)- 2\mathbf{e}_j^{\top}\mathbf{e}_j + \parallel \mathbf{r}_k\parallel_2^2 }[/math] | (18) |

==== G. Comparison Of Models ====

Table V summarizes the different models we have discussed. A natural question is: which model is best? [28] showed that the ER-MLP model outperformed the NTN model on their particular dataset. [95] performed more extensive experimental comparison of these models, and found that RESCAL (called the bilinear model) worked best on two link prediction tasks. However, clearly the best model will be dataset dependent.

| Method | $f_{ijk}$ | $\mathbf{A}_k$ | $\mathbf{C}$ | $\mathbf{\underline{B}}_k$ | Num. Parameters |

|---|---|---|---|---|---|

| RESCAL [64] | $\mathbf{w}_k^{\top} \mathbf{h}^b_{ijk}$ | - | - | $[\delta_{1,1},\cdots, \delta_{H_e,H_e}]$ | $N_rH^2_e+ N_eH_e$ |

| E-MLP [92] | $\mathbf{w}_k^{\top}\mathbf{g} \left(\mathbf{h}^a_{ijk}\right)$ | $\big[\mathbf{A}^s_k ; \mathbf{A}^o_k \big]$ | - | - | $N_r\left(H_a + H_a \times\; 2H_e\right)+N_eH_e$ |

| ER-MLP [28] | $\mathbf{w}_k^{\top}\mathbf{g} \left(\mathbf{h}^c_{ijk}\right)$ | - | $\mathbf{C}$ | - | $H_c + H_c \times\; \left(2H_e + H_r\right) + N_rH_r + N_eH_e$ |

| NTN [92] | $\mathbf{w}_k^{\top}\mathbf{g} \left(\big[\mathbf{h}^a_{ijk}; \mathbf{h}^b_{ijk}\big]\right)$ | $\big[\mathbf{A}^s_k ; \mathbf{A}^o_k \big]$ | - | $\big[\mathbf{B}^1_k ,\cdots,\mathbf{B}^{H_b}_k \big]$ | $N^2_e H_b + N_r\left(H_b + H_a\right) + 2N_rH_eH_a + N_eH_e$ |

| Structured Embeddings [93] | $-\parallel\mathbf{h}^a_{ijk}\parallel_1$ | $\big[\mathbf{A}^s_k ;- \mathbf{A}^o_k \big]$ | - | $-$ | $2\;N_rH_eH_a + N_eH_e$ |

| TransE [94] | $-\left(2\;h^a_{ijk}-2\;h^b_{ijk}+\parallel\mathbf{r}_k\parallel_2^2\right)$ | $\big[\mathbf{r}_k;-\mathbf{r}_k\big]$ | - | $\mathbf{I}$ | $N_rH_e + N_eH_e$ |

V. GRAPH FEATURE MODELS

In this section, we assume that the existence of an edge can be predicted by extracting features from the observed edges in the graph. For example, due to social conventions, parents of a person are often married, so we could predict the triple $(John,\; marriedTo,\;Mary)$ from the existence of the path $John \xrightarrow{ParentOf}Anne \xleftarrow{ParentOf}Mary$, representing a common child. In contrast to latent feature models, this kind of reasoning explains triples directly from the observed triples in the knowledge graph. We will now discuss some models of this kind.

A. Similarity Measures For Uni-Relational Data

Observable graph feature models are widely used for link prediction in graphs that consist only of a single relation, e.g., social network analysis (friendships between people), biology (interactions of proteins), and Web mining (hyperlinks between Web sites). The intuition behind these methods is that similar entities are likely to be related (homophily) and that the similarity of entities can be derived from the neighborhood of nodes or from the existence of paths between nodes. For this purpose, various indices have been proposed to measure the similarity of entities, which can be classified into local, global, and quasi-local approaches [97].

Local similarity indices such as Common Neighbors, the Adamic-Adar index [98] or Preferential Attachment [99] derive the similarity of entities from their number of common neighbors or their absolute number of neighbors. Local similarity indices are fast to compute for single relationships and scale well to large knowledge graphs as their computation depends only on the direct neighborhood of the involved entities. However, they can be too localized to capture important patterns in relational data and cannot model long-range or global dependencies.

Global similarity indices such as the Katz index [100] and the Leicht-Holme-Newman index [101] derive the similarity of entities from the ensemble of all paths between entities, while indices like Hitting Time, Commute Time, and PageRank [102] derive the similarity of entities from random walks on the graph. Global similarity indices often provide significantly better predictions than local indices, but are also computationally more expensive [97, 56].

Quasi-local similarity indices like the Local Katz index [56] or Local Random Walks [103] try to balance predictive accuracy and computational complexity by deriving the similarity of entities from paths and random walks of bounded length.

In Section V-C, we will discuss an approach that extends this idea of quasi-local similarity indices for uni-relational networks to learn from large multi-relational knowledge graphs.

B. Rule Mining and Inductive Logic Programming

Another class of models that works on the observed variables of a knowledge graph extracts rules via mining methods and uses these extracted rules to infer new links. The extracted rules can also be used as a basis for Markov Logic as discussed in Section VIII. For instance, ALEPH is an Inductive Logic Programming (ILP) system that attempts to learn rules from relational data via inverse entailment [104] (For more information on ILP see e.g.,[106 105, 3, 106]). AMIE is a rule mining system that extracts logical rules (in particular Horn clauses) based on their support in a knowledge graph [107, 108]. In contrast to ALEPH, AMIE can handle the open-world assumption of knowledge graphs and has shown to be up to three orders of magnitude faster on large knowledge graphs [108]. The basis for the Semantic Web is Description Logic and[109, 110, 111] describe approaches for logic oriented machine learning approaches in this context. Also to mention are data mining approaches for knowledge graphs as described in[112, 113, 114]. An advantage of rule-based systems is that they are easily interpretable as the model is given as a Set of logical rules. However, rules over observed variables cover usually only a subset of patterns in knowledge graphs (or relational data) and useful rules can be challenging to learn.

C. Path Ranking Algorithm

The Path Ranking Algorithm (PRA) [115, 116] extends the idea of using random walks of bounded lengths for predicting links in multi-relational knowledge graphs. In particular, let $\pi_L(i,j,k,t)$ denote a path of length $L$ of the form $e_i \xrightarrow{r_1} e_2\xrightarrow{r_2} e_3\cdots\xrightarrow{r_L}e_j$, where $t$ represents the sequence of edge types $t = (r_1,r_2,\cdots,r_L)$. We also require there to be a direct arc $e_i \xrightarrow{r_k}e_j$, representing the existence of a relationship of type $k$ from $e_i$ to $e_j$. Let $\Pi_L (i, j,k)$ represent the set of all such paths of length $L$, ranging over path types $t$. (We can discover such paths by enumerating all (type-consistent) paths from entities of type $e_i$ to entities of type $e_j$. If there are too many relations to make this feasible, we can perform random sampling.)

We can compute the probability of following such a path by assuming that at each step, we follow an outgoing link uniformly at random. Let $P\left(\pi_L,(i,j,k,t)\right)$ be the probability of this particular path; this can be computed recursively by a sampling procedure, similar to PageRank (see [116] for details). The key idea in PRA is to use these path probabilities as features for predicting the probability of missing edges. More precisely, define the feature vector

| [math]\displaystyle{ \phi^{PRA}_{ijk}=\big[P(\pi):\pi\in\Pi_L(i,j,k) \big] }[/math] | (19) |

We can then predict the edge probabilities using logistic regression:

We can compute the probability of following such a path by assuming that at each step, we follow an outgoing link uniformly at random. Let $P\left(\pi_L,(i,j,k,t)\right)$ be the probability of this particular path; this can be computed recursively by a sampling procedure, similar to PageRank (see [116] for details). The key idea in PRA is to use these path probabilities as features for predicting the probability of missing edges. More precisely, define the feature vector

| [math]\displaystyle{ f_{ijk}^{PRA}:=\mathbf{w}_k^{\top}phi^{PRA}_{ijk} }[/math] | (20) |

Interpretability: A useful property of PRA is that its model is easily interpretable. In particular, relation paths can be regarded as bodies of weighted rules — more precisely Horn clauses — where the weight specifies how predictive the body of the rule is for the head. For instance, Table VI shows some relation paths along with their weights that have been learned by PRA in the KV project (see Section IX) to predict which college a person attended, i.e., to predict triples of the form $(p,\; college,\; c)$. The first relation path in Table VI can be interpreted as follows: it is likely that a person attended a college if the sports team that drafted the person is from the same college. This can be written in the form of a Horn clause as follows:

| [math]\displaystyle{ (p,\; college,\; c)\; \leftarrow\; (p, draftedBy, t) \;\wedge \; (t, school, c) }[/math] . |

By using a sparsity promoting prior on $\mathbf{w}_k$, we can perform feature selection, which is equivalent to rule learning.

Relational learning results: PRA has been shown to outperform the ILP method FOIL [106] for link prediction in NELL 116. It has also been shown to have comparable performance to ER-MLP on link prediction in KV: PRA obtained a result of 0.884 for the area under the ROC curve, as compared to 0.882 for ER-MLP [28].

| Relation Path | F1 | Prec | Rec | Weight |

|---|---|---|---|---|

| (draftedBy, school) | 0.03 | 1.0 | 0.01 | 2.62 |

| (sibling(s), sibling, education, institution) | 0.05 | 0.55 | 0.02 | 1.88 |

| (spouse(s), spouse, education, institution) | 0.06 | 0.41 | 0.02 | 1.87 |

| (parents, education, institution) | 0.04 | 0.29 | 0.02 | 1.37 |

| (children, education, institution) | 0.05 | 0.21 | 0.02 | 1.85 |

| (placeOfBirth, peopleBornHere, education) | 0.13 | 0.1 | 0.38 | 6.4 |

| (type, instance, education, institution) | 0.05 | 0.04 | 0.34 | 1.74 |

| (profession, peopleWithProf., edu., inst.) | 0.04 | 0.03 | 0.33 | 2.19 |

VI. COMBINING LATENT AND GRAPH FEATURE MODELS

It has been observed experimentally (see, e.g., [28]) that neither state-of-the-art relational latent feature models (RLFMs) nor state-of-the-art graph feature models are superior for learning from knowledge graphs. Instead, the strengths of latent and graph-based models are often complementary (see e.g., [117]), as both families focus on different aspects of relational data:

- Latent feature models are well-suited for modeling global relational patterns via newly introduced latent variables. They are computationally efficient if triples can be explained with a small number of latent variables.

- Graph feature models are well-suited for modeling local and quasi-local graphs patterns. They are computationally efficient if triples can be explained from the neighborhood of entities or from short paths in the graph.

There has also been some theoretical work comparing these two approaches [118]. In particular, it has been shown that tensor factorization can be inefficient when relational data consists of a large number of strongly connected components. Fortunately, such “problematic” relations can often be handled efficiently via graph-based models. A good example is the $marriedTo$ relation: One marriage corresponds to a single strongly connected component, so data with a large number of marriages would be difficult to model with RLFMs. However, predicting $marriedTo$ links via graph-based models is easy: the existence of the triple $(John,\; marriedTo,\; Mary)$ can be simply predicted from the [existence of $(Mary,\; marriedTo,\; John)$, by exploiting the symmetry of the relation. If the $(Mary,\; marriedTo,\; John)$ edge is unknown, we can use statistical patterns, such as the existence of shared children.

Combining the strengths of latent and graph-based models is therefore a promising approach to increase the predictive performance of graph models. It typically also speeds up the training. We now discuss some ways of combining these two kinds of models.

A. Additive Relational Effects Model

[118] proposed the additive relational effects (ARE), which is a way to combine RLFMs with observable graph models. In particular, if we combine RESCAL with PRA, we get

| [math]\displaystyle{ f^{RESCAL+PRA}_{ijk}=\mathbf{w}_k^{(1)\top}\phi_{ij}^{RESCAL}+\mathbf{w}_k^{(2)\top}\phi_{ij}^{RESCAL} }[/math] | (21) |

ARE models can be trained by alternately optimizing the RESCAL parameters with the PRA parameters. The key benefit is now RESCAL only has to model the “residual errors” that cannot be modelled by the observable graph patterns. This allows the method to use much lower latent dimensionality, which significantly speeds up training time. The resulting combined model also has increased accuracy [118].

B. Other Combined Models

In addition to ARE, further models have been explored to learn jointly from latent and observable patterns on relational data. [84, 85] combined a latent feature model with an additive term to learn from latent and neighborhood-based information on multi-relational data, as follows[11]:

| [math]\displaystyle{ f^{ADD}_{ijk}:=\mathbf{w}_{k,j}^{(1)\top}\phi_{i}^{SUB}+\mathbf{w}_{k,i}^{(2)\top}\phi_{j}^{OBJ}+\mathbf{w}_{k}^{(3)\top}\phi_{ijk}^{N} }[/math] | (22) |

| [math]\displaystyle{ \phi_{ijk}^{N}:=\big[y_{ijk'}:k'\ne k \big] }[/math] | (23) |

Here, $\phi_u^{SUB}$ is the latent representation of entity $e_i$ as a subject and $phi_j^{OBJ}$ is the latent representation of entity $e_j$ as an object. The term $\phi_{ijk}^N$ captures patterns efficiently where the existence of a triple $y_{ijk'}$ is predictive of another triple $y_{ijk}$ between the same pair of entities (but of a different relation type). For instance, if Leonard Nimoy was born in Boston, it is also likely that he lived in Boston. This dependency between the relation types $bornIn$ and $livedIn$ can be modeled in Equation (23) by assigning a large weight to $w_{bornIn,\;livedIn}$

ARE and the models of [84] and [85] are similar in spirit to the model of [119], which augments SVD (ce., matrix factorization) of a rating matrix with additive terms to include local neighborhood information. Similarly, factorization machines [120] allow to combine latent and observable patterns, by modeling higher-order interactions between input variables via low-rank factorizations [78].

An alternative way to combine different prediction systems is to fit them separately, and use their outputs as inputs to another “fusion” system. This is called stacking[121]. For instance,[28] used the output of PRA and ER-MLP as scalar features, and learned a final “fusion” layer by training a binary classifier. Stacking has the advantage that it is very flexible in the kinds of models that can be combined. However, it has the disadvantage that the individual models cannot cooperate, and thus any individual model needs to be more complex than in a combined model which is trained jointly. For example, if we fit RESCAL separately from PRA, we will need a larger number of latent features than if we fit them jointly.

VII. TRAINING SRL MODELS ON KNOWLEDGE GRAPHS

In this section we discuss aspects of training the previously discussed models that are specific to knowledge graphs, such as how to handle the open-world assumption of knowledge graphs, how to exploit sparsity, and how to perform model selection.

A. Penalized Maximum Likelihood Training

Let us assume we have a set of $Nd$ observed triples and let the $n$-th triple be denoted by $x^n$. Each observed triple is either true (denoted $y^n = 1$) or false (denoted $y^n = 0$). Let this labeled dataset be denoted by $\mathcal{D} = \{\left(x^n, y^n\right) \vert\; n =1,\cdots, N_d\}$. Given this, a natural way to estimate the parameters $\Theta$ is to compute the maximum a posteriori (MAP) estimate:

| [math]\displaystyle{ \displaystyle \underset{\Theta}{\mathrm{max}}\sum_{n=1}^{N_d}\log\mathrm{Ber}\left(y^n\vert\;\sigma\left(f\left(x^n;\Theta\right)\right)\right)+\log p\left(\boldsymbol{\Theta}\vert\lambda\right) }[/math] | (24) |

where $\lambda$ controls the strength of the prior. (If the prior is uniform, this is equivalent to maximum likelihood training.) We can equivalently state this as a regularized loss minimization problem:

| [math]\displaystyle{ \displaystyle \underset{\Theta}{\mathrm{min}}\sum_{n=1}^{N}\mathcal{L}\left(\sigma\left(f\left(x^n;\Theta\right)\right), y^n\right)+\lambda\;\mathrm{reg}\left(\boldsymbol{\Theta}\right) }[/math] | (25) |

where $\mathcal{L}(p,y) = -\log\mathrm{Ber}(y\vert P)$ is the log loss function. Another possible loss function is the squared loss, $\mathcal{L}(p, y) = (p — y)^2$. Using the squared loss can be especially efficient in combination with a closed-world assumption (CWA). For instance, using the squared loss and the CWA, the minimization problem for RESCAL becomes

| [math]\displaystyle{ \displaystyle \underset{\mathbf{E}\{\mathbf{W}_k\}}{\mathrm{min}}\sum_k\parallel\mathbf{Y}_k-\mathbf{EW}_k\mathbf{E}^{\top}\parallel^2_F+\lambda_1\parallel\mathbf{E}\parallel^2_F+\lambda_2\sum_k\parallel\mathbf{W}_k\parallel^2_F }[/math] | (26) |

where $\lambda_1, \lambda_2 \ge 0$ control the degree of regularization. The main advantage of Equation (26) is that it can be optimized via RESCAL-ALS, which consists of a sequence of very efficient, closed-form updates whose computational complexity depends only on the non-zero entries in $\mathbf{Y}$ [63, 64]. We discuss some other loss functions below.

B. Where Do The Negative Examples Come From?

One important question is where the labels $y^2$ come from. The problem is that most knowledge graphs only contain positive training examples, since, usually, they do not encode false facts. Hence $y^n = 1$ for all $\left(x^n, y^n\right) \in \mathcal{D}$. To emphasize this, we shall use the notation $\mathcal{D}^+$ to represent the observed positive (true) triples: $\mathcal{D}^+ = \{x^n \in \mathcal{D} | y^n = 1\}. Training on all-positive data is tricky, because the model might easily over generalize.

One way around this is as to make a closed world assumption and assume that all (type consistent) triples that are not in $\mathcal{D}^+$ are false. We will denote this negative set as $\mathcal{D}^- = \{x^2 \in \mathcal{D} |y^2 = 0\}$. However, for incomplete knowledge graphs this assumption will be violated. Moreover, $\mathcal{D}^-$ might be very large, since the number of false facts is much larger than the number of true facts. This can lead to scalability issues in training methods that have to consider all negative examples.

An alternative approach to generate negative examples is to exploit known constraints on the structure of a knowledge graph: Type constraints for predicates (persons are only married to persons), valid value ranges for attributes (the height of humans is below 3 meters), or functional constraints such as mutual exclusion (a person is born exactly in one city) can all be used for this purpose. Since such examples are based on the violation of hard constraints, it is certain that they are indeed negative examples. Unfortunately, functional constraints are scarce and negative examples based on type constraints and valid value ranges are usually not sufficient to train useful models: While it is relatively easy to predict that a person is married to another person, it is difficult to predict to which person in particular. For the latter, examples based on type constraints alone are not very informative. A better way to generate negative examples is to “perturb” true triples. In particular, let us define

| [math]\displaystyle{ \mathcal{D}^- = \{\left(e_{\ell}, r_k, e_j\right) | e_i \ne e_{\ell} \wedge \left(e_i,r_k,e_j\right) \in \mathcal{D}^+\} \cup \{\left(e_i, r_,k e_{\ell}\right) | e_j \ne e_{\ell} \wedge \left(e_i,r_k,e_j\right) \in \mathcal{D}^+\} }[/math] |

To understand the difference between this approach and the CWA (where we assumed all valid unknown triples were false), let us consider the example in Figure 1. The CWA would generate “good” negative triples such as $(LeonardNimoy,\; starredIn,\; StarWars)$, $(AlecGuinness,\; starredIn,\; StarTrek)$, etc., but also type-consistent but “irrelevant” negative triples such as $(BarackObama,\; starredIn,\; StarTrek)$, etc. (We are assuming (for the sake of this example) there is a type $Person$ but not a type $Actor$.) The second approach (based on perturbation) would not generate negative triples such as $(BarackObama,\; starredIn,\; StarTrek)$, since $BarackObama$ does not participate in any $starredIn$ events. This reduces the size of $D^-$, and encourages it to focus on “plausible” negatives. (An even better method, used in Section IX, is to generate the candidate triples from text extraction methods run on the Web. Many of these triples will be false, due to extraction errors, but they define a good set of “plausible” negatives.)

Another option to generate negative examples for training is to make a local-closed world assumption (LCWA) [107, 28], in which we assume that a KG is only locally complete. More precisely, if we have observed any triple for a particular subject-predicate pair $e_i$, $r_k$, then we will assume that any non-existing triple $\left(e_i,rk,\cdot\right)$ is indeed false and include them in $\mathcal{D}^-$. (The assumption is valid for functional relations, such as $bornIn$, but not for set-valued relations, such as $starredIn$). However, if we have not observed any triple at all for the pair $e_i$, $r_k$, we will assume that all triples $\left(e_i,r_k,\cdot\right)$ are unknown and not include them in $\mathcal{D}^-$.

C. Pairwise Loss Training

Given that the negative training examples are not always really negative, an alternative approach to likelihood training is to try to make the probability (or in general, some scoring function) to be larger for true triples than for assumed-to-be-false triples. That is, we can define the following objective function:

| [math]\displaystyle{ \displaystyle \underset{\Theta}{\mathrm{min}}\sum_{x^+\in\mathcal{D}^+}\sum_{x^-\in\mathcal{D}^-}\mathcal{L}\left(f\left(x^+;\Theta\right), f\left(x^-;\Theta\right)+\lambda\;\mathrm{reg}\Theta\right) }[/math] | (27) |

where $\mathcal{L}(f, f’$) is a margin-based ranking loss function such as

| [math]\displaystyle{ \mathcal{L}\left(f,f'\right)=\mathrm{max}\left(1+f'-f,\; 0\right) }[/math] | (28) |

This approach has several advantages. First, it does not assume that negative examples are necessarily negative, just that they are “more negative” than the positive ones. Second, it allows the $f(\cdot)$ function to be any function, not just a probability (but we do assume that larger f values mean the triple is more likely to be correct).

This kind of objective function is easily optimized by stochastic gradient descent (SGD) [122]: at each iteration, we just sample one positive and one negative example. SGD also scales well to large datasets. However, it can take a long time to converge. On the other hand, as discussed previously, some models, when combined with the squared loss objective, can be optimized using alternating least squares (ALS), which is typically much faster.

D. Model Selection

Almost all models discussed in previous sections include one or more user-given parameters that are influential for the model’s performance (e.g., dimensionality of latent feature models, length of relation paths for PRA, regularization parameter for penalized maximum likelihood training). Typically, crossvalidation over random splits of $\mathcal{D}$ into training -, validation -, and test-sets is used to find good values for such parameters without overfitting (for more information on model selection in machine learning see e.g., [123]). For link prediction and entity resolution, the area under the ROC curve (AUC-ROC) or the area under the precision-recall curve (AUC-PR) are good evaluation criteria. For data with a large number of negative examples (as it is typically the case for knowledge graphs), it has been shown that AUC-PR can give a clearer picture of an algorithm’s performance than AUC-ROC [124]. For entity resolution, the mean reciprocal rank (MRR) of the correct entity is an alternative evaluation measure.

VIII. MARKOV RANDOM FIELDS

In this section we drop the assumption that the random variables $y_{ijk}$ in $\mathbf{\underline{Y}}$ are conditionally independent. However, in the case of relational data and without the conditional independence assumption, each $y_{ijk}$ can depend on any of the other $N_e\times\; N_e\; N_r - 1$ random variables in $\mathbf{Y}$. Due to this enormous number of possible dependencies, it becomes quickly intractable to estimate the joint distribution $P(\mathbf{\underline{Y}})$ without further constraints, even for very small knowledge graphs. To reduce the number of potential dependencies and arrive at tractable models, in this section we develop template based graphical models that only consider a small fraction of all possible dependencies.

(See [125] for an introduction to graphical models.)

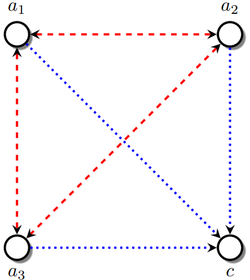

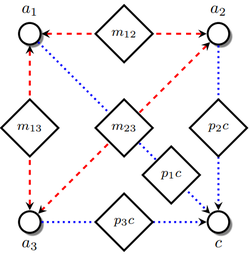

A. Representation

Graphical models use graphs to encode dependencies between random variables. Each random variable (in our case, a possible fact $y_{ijk}$) is represented as a node in the graph, while each dependency between random variables is represented as an edge. To distinguish such graphs from knowledge graphs, we will refer to them as dependency graphs. It is important to be aware of their key difference: while knowledge graphs encode the existence of facts, dependency graphs encode statistical dependencies between random variables.

To avoid problems with cyclical dependencies, it is common to use undirected graphical models, also called Markov Random Fields (MRFs)[12]. A MRF has the following form:

| [math]\displaystyle{ P\left(\mathbf{y}_c\vert\boldsymbol{\theta}\right)=\displaystyle \dfrac{1}{Z}\prod_c \psi\left(\mathbf{y}_c\vert\boldsymbol{\theta}\right) }[/math] | (29) |

where $\psi\left(\mathbf{y}_c\vert\boldsymbol{\theta}\right) \ge 0$is a potential function on the $c$-th subset of variables, in particular the $c$-th clique in the dependency graph, and $Z =\displaystyle\prod_c\psi\left(\mathbf{y}_c\vert\boldsymbol{\theta}\right)$ is the partition function, which ensures that the distribution sums to one. The potential functions capture local correlations between variables in each clique $c$ in the dependency graph. (Note that in undirected graphical models, the local potentials do not have any probabilistic interpretation, unlike in directed graphical models.) This equation again defines a probability distribution over “possible worlds’, i.e., over joint distribution assigned to the random variables $\mathbf{\underline{Y}}$.

The structure of the dependency graph (which defines the cliques in Equation (29)) is derived from a template mechanism that can be defined in a number of ways. A common approach is to use Markov logic[ 126], which is a template language based on logical formulae:

Given a set of formulae $\mathcal{F} = \{F_i\}_{i=1}^L$, we create an edge between nodes in the dependency graph if the corresponding facts occur in at least one grounded formula. A grounding of a formula $F_i$; is given by the (type consistent) assignment of entities to the variables in $F_i$. Furthermore, we define $\psi\left(\mathbf{y}_c\vert\boldsymbol{\theta}\right)$ such that